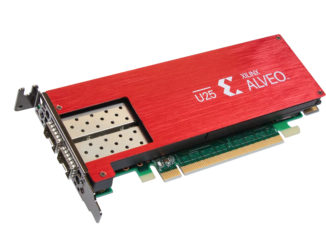

There has been a noticeable interest uptick in reconfigurable computing devices, most notably, field programmable gate arrays (FPGAs). While increased velocity around a technology does not necessarily mean it is set to explode, FPGAs appear to be an exception, especially as a range of new potential use cases, some key improvements in hardware and programming environments, and a renewed push to find specialized processors to fit specific workloads continues.

This is not to say that FPGAs are not to be found in the enterprise, but it is safe to say that the use cases, at least for the last five years, have been limited and for very specific purposes. But lately, a lot of the renewed interest in FPGAs has been fed in part by mainstream news stories about Microsoft’s use of the accelerators to power its Bing search engine, and on the business side, the rumored interest in FPGA maker Altera on the part of Intel.

More quietly, the interest has also been fed by recent developments to extend the reach of FPGAs into new and emerging markets, including deep leaning and analytics. Extensions to the tooling to make them easier to use, an increasing bevy of upcoming integrations into the system (as is the case with IBM’s future outlook with OpenPower, as one example) and the addition of more logic into the FPGA to work with.

On the programming side, which is arguably one of the sticking points for wider FPGA adoption (unlike other accelerators with rich development ecosystems like CUDA for Nvidia GPUs), there is momentum to extend the ability for programmers to design at the C language level or using OpenCL versus the low-level models that plagued FPGA development in the past. But even with so many progressive points to mark wider adoption, FPGAs are still stranded just outside of mainstream adoption.

While we have talked to many of the vendors in this relatively small ecosystem, including Altera and Xilinx (the two major suppliers) about what still remains, according to long-time FPGA researcher, Russell Tessier, the glory days for FPGAs in terms of how they will hit the wider market are still ahead—and new developments across the board will mean broader adoption.

In his twenty years working with FPGAs, which he still does at the University of Massachusetts (he also had a stint at Altera and was founder of Virtual Machine Works, which Mentor Graphics acquired), he says there has been a slow transition from science project to enterprise reality for FPGAs.

“A lot of this is because of key improvements in design tools, with designers better able to specify their designs at a high level in addition to having the vendor tools that can be better mapped to chips.” He adds that from a sheer volume perspective, the amount of logic inside the device means users are able to implement increasingly large functions, making them more attractive to a wider base.

Until relatively recently, FPGAs had the reputation problem. And it was not exactly inaccurate, since for any large-scale implementations, they did require specialists to program and configure, which made them off-limits in many industries, even though financial services, oil and gas, and a few other segments found the costs necessary to gain speed and throughput for specific applications.

There have been trends over last few years that are making these devices a bit easier, programmatically speaking, says Tessier. Xilinx currently encourages design at the C language level using its Vivado product. Altera also has an OpenCL environment it has developed. The key, Tessier says, is that “both companies are trying to create an environment where users can program in more familiar procedural languages like C and OpenCL rather than having to be RTL design experts in Verilog or VHDL—that’s a process that’s still progressing although there does seem to be more footing in the last few years that will help move things more into the mainstream.”

One of the real enabling factors for FPGA development is under development at IBM, among others, who are seeking to solve some of the memory and data movement limitations of FPGAs by meshing them with the chip and a hyper-fast interconnect. This capability might be one of the reasons some were certain the Altera and Intel talks would lead to a big purchase—the market would expand dramatically at that point and if large companies like Intel and IBM were properly motivated to push the software ecosystem for FPGAs further, the mainstreaming of the FPGA (which will likely not be as significant as GPUs, at least not yet) might happen sooner.

“Increased integration with standard core processors is certainly a key here,” Tessier explains. “The barriers in the past have been languages and tools and as those get better there will be new opportunities and work with the chip vendors does open some new doors.”

Tessier tells The Next Platform that because of these and other “mainstreaming” trends, the application areas for FPGAs will continue to grow, in large part because the way they’re being used is changing. For instance, while financial services shops were among the first to use FPGAs for doing financial trends and stock selection analysis, the use cases are expanding as that segment now has bigger devices that can solve larger problems—and can now be strung together in larger quantities. Aside from that, other new areas for FPGAs, including in DNA sequencing, security, and encryption, and some key machine learning tasks will likely find new uses for FPGAs.

In terms of what lies ahead for FPGA adoption, he notes, “There is a lot of exciting work happening at places like Microsoft with their search engine. And increased integration in servers is also a big thing around the corner. Aside from that, allowing for FPGA is a cloud environment shows promising. Other than that, there is increasing interest in networking use of FPGAs, which isn’t new, but is gathering fresh momentum, especially with work partial reconfiguration.

Be the first to comment