Even though Intel is best known in the datacenter as the maker of server processors and their chipsets, the company has increasingly become a platform thinker in the past decade. The company not only wants to supply processors, but also networking and storage, and in the HPC arena, the big platform change coming from Intel – which is being unveiled at this week’s SC15 supercomputing conference in Austin, Texas – is its Omni-Path Architecture interconnect.

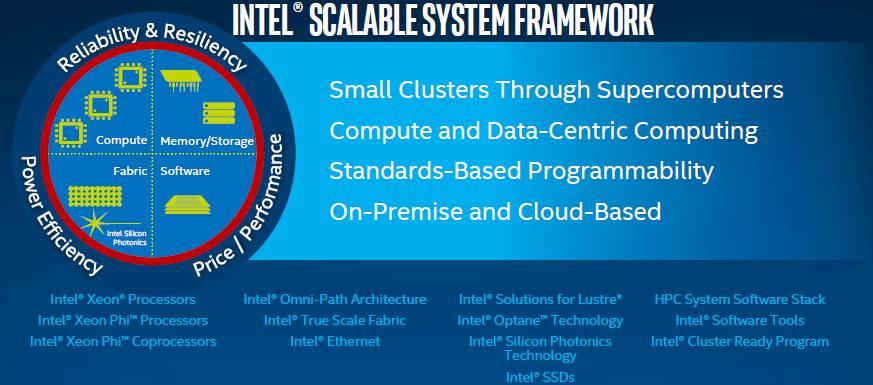

Omni-Path, known internally by the generic code name “Storm Lake,” is a vital ingredient in Intel’s Scalable Systems Framework, which was announced earlier this year as a collection of hardware and systems software components to build parallel systems for running simulation and modeling workloads and increasingly other kinds of data analytics jobs that benefit from running on clusters with low latency and high bandwidth interconnects. This hardware-level stack integration is the foundation for the OpenHPC effort that Intel and a slew of partners announced last week ahead of the SC15 event, which seeks to cultivate a complete and open source software stack for creating and managing HPC clusters and providing them with the specialized operating system kernels, math libraries, and runtimes that are specific to HPC jobs.

In a fractal zoom out repeat of history, Intel is trying to create the conditions in the cluster market that made it so successful inside the server. And there is no reason to believe Intel will not succeed in its goals given the breadth and depth of its technology and partnerships.

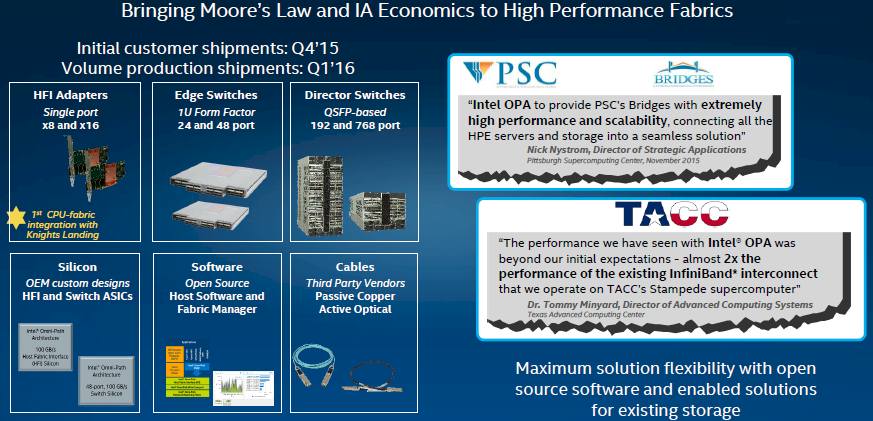

It has been no secret that the Omni-Path interconnect and the “Knights Landing” Xeon Phi processors would be the big announcements from Intel at SC15, and many had expected for one or just possibly both to be fully and formally launched this week. But, as it turns out, neither Knights Landing Xeon Phi nor Omni-Path switches and adapters are being fully launched this week, complete with full specs, prices, and volume shipments. As we report elsewhere at The Next Platform, the Knights Landing chips have shipped to early adopters and are now expected to launch sometime in the first half of 2016. Intel says that the Omni-Path will ramp, both through its own products and from others who are using Intel silicon to make their own gear, in the first quarter of 2016.

As we go to press, the details for Intel’s own Omni-Path switches and adapters are not yet out but should be soon and Intel is not yet supplying pricing for this equipment. But Charles Wuischpard, general manager of the HPC Platform Group within Intel’s Data Center Group, disclosed some of the feeds and speeds of the Omni-Path products in a briefing ahead of SC15 and also gave some insight into where Intel expects Omni-Path uptake to start and how it fits into the Scalable Systems Framework. (We will be getting into the details as soon as we get our hands on them, and will talk about pricing as soon as it is available.)

“One of the reasons we are transcending from being a pure compute provider to taking a more system-wide view is that there are a number of well-known bottlenecks in today’s supercomputing systems that only get worse over time,” explains Wuischpard. “To move the industry and the technology forward, you really have to take more of a systemic view and look at memory, I/O, storage, and the software stack, and you have seen our investments around solving multiple challenges on multiple dimensions.”

What Intel has observed is that customers have divergent infrastructure, where they have different systems for HPC modeling and simulation, visualization, data analytics, and machine learning, and they want an architecture for infrastructure that can support all of these. Moreover, says Wuischpard, HPC workloads continue to migrate to the cloud, so Intel is creating technologies that can be used both on premises and in the cloud – and very probably in a hybrid scenario. (We would say that getting analytics and machine learning workloads tuned up for InfiniBand or Omni-Path and also adopted by public cloud providers will be a challenge, the latter part especially. Hyperscalers will do what they want, and in many cases, we think they will deploy InfiniBand or Omni-Path interconnects where the performance ends justify the breaking of the cardinal rule of homogeneity in their vast datacenters. Baidu is already using InfiniBand for machine learning clusters, for instance.)

“The idea is that we don’t want to build a one-off supercomputer for the top end, but rather an architecture that works for the top end but serves customers at all levels, from customers with small racks back in a closet to the very largest supercomputing centers in the world. And this should be something that can handle multiple workloads, whether they are compute or data centric, do it around a standard programming model, and accommodate on premise and cloud as modes of operation. Ultimately, the world favors integration, which gives reliability and resiliency and should give you much better price/performance. And the cost to move data is becoming as high or higher than compute – especially from the power consumption perspective.”

Intel has been building its Scalable Systems Framework partner ecosystem since the “Aurora” system that it is building for Argonne National Laboratory in conjunction with Cray, which is the system integrator on the 180 petaflops machine. This is essentially the first Scalable System Framework machine, and back in July at ISC, Hewlett-Packard said it would build HPC systems under this framework as it renewed its efforts to sell more machines at the high end of the market as well as in the middle and low end, where it already has a big slice of sales. Others have stepped up, including Dell, Fujitsu, Inspur, Colfax International, Lenovo, Penguin Computing, SGI, Sugon, Supermicro on the hardware from and Altair, Ansys, MSC Software, and Dassault Systemes on the software front. Starting in the first quarter, Intel and these framework partners will be cooking up reference designs to make snapping together complete systems easier and quicker.

“The goal is to build a better system at a better price that is more performant, and in a multi-generational fashion with a better overall quality experience,” Wuischpard says, and he knows a thing or two about this given that he was CEO at cluster provider Penguin Computing for many years.

The new Omni-Path Series 100 adapters and switches are integral to Intel’s Scalable Systems Framework plans. “I think the industry has been waiting for this for some time, and I think anxiously,” Wuischpard says. “This is a 100 Gb/sec solution that is tuned for true application performance, and when we talk about performance, we are really talking about the performance of the application in a parallel fashion.”

“The idea is that we don’t want to build a one-off supercomputer for the top end, but rather an architecture that works for the top end but serves customers at all levels, from customers with small racks back in a closet to the very largest supercomputing centers in the world.”

A number of HPC customers have already deployed Omni-Path and we will be seeking out their insight to compare it to Intel’s own performance claims of having 17 percent lower latency and 7 percent higher messaging rates than 100 Gb/sec Enhanced Data Rate (EDR) InfiniBand. Intel is also claiming that its Omni-Path switches offer higher port density and lower power consumption compared to EDR InfiniBand, and interestingly, Intel will be selling its own cables as well as certifying third party cables for its Omni-Path gear. And taking a page out of the Oracle playbook, Intel is only offering a premium, 24×7 service level for its Omni-Path gear, which makes the choice of support level a whole lot easier. The idea is to provide premium support at a regular price, by the way.

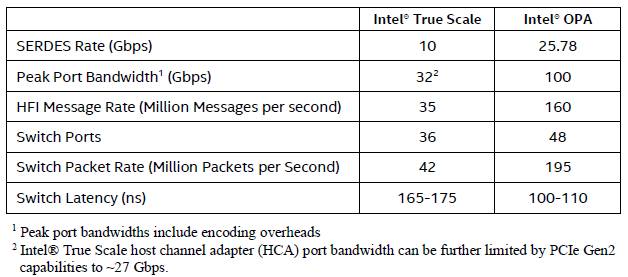

Having a 48-port switch compared to a 36-port switch for InfiniBand switches is a big differentiator, according to Intel.

On a 750-node cluster, Intel says that customers will be able to get 26 percent more compute nodes for the money because of the savings in switching costs compared to EDR InfiniBand. If you read the fine print, Intel’s comparison is for an interconnect fabric with a full bisectional bandwidth Fat-Tree configuration, and the Intel Omni-Path setup uses one fully-populated 768-port director switch, and the EDR InfiniBand setup using Mellanox Technologies gear solution uses a combination of 648-port director switches and 36-port edge switches. Intel priced up Dell PowerEdge R730 compute nodes, grabbed Mellanox pricing from a reseller, and plugged in its own suggested retail pricing for this comparison – data we have not been able to see but will endeavor to get. Mellanox no doubt will have similar comparisons and thoughts about the test setup that Intel is discussing above.

As has been the case with Intel’s prior generation of True Scale InfiniBand products, Intel is selling adapters and switches with the follow-on Omni-Path products. But this time around, Intel is also putting the chips for its Omni-Path adapter cards and switches up for sale, too, and it will be interesting to see if any system makers decide to make Omni-Path switches or integrate them tightly into their machines. We suspect that many will, particularly in the HPC and hyperscale communities where bandwidth and low latency are highly valued. Wuischpard disclosed that there are over 100 platform, switch, and adapters are expected to ship from OEM partners in the first quarter of 2016. Admittedly, a lot of these will just be systems that have Intel’s adapter cards certified to work, but there will be interesting engineering that some companies do to embed Omni-Path deeply into their products, much as has been the case for InfiniBand in the storage and database clustering markets.

Compared to prior True Scale InfiniBand products from Intel, Wuischpard says that Omni-Path switching would be much more power efficient and provide better quality of service and error detection and correction, and do so in a manner that does not degrade the performance of the network.

More significantly, Wuischpard says that over 100,000 nodes have been bid out by Intel and its partners in cluster bids already and the product is only now being launched and volume shipments are not expected until the first quarter of next year.

“We have won a number of them already,” says Wuischpard, with some of the machines employing Omni-Path being announced this week at SC15 and coming from all three of the major geographies. (The two we know about are the Texas Advanced Computing Center and the Pittsburgh Supercomputer Center.) “When you look at the more than 100,000 nodes in bid, by my back of the envelope calculations that represents 20 percent to 25 percent of the overall market, and this is even before launch. I think the appetite and the interest in the industry is there. I don’t know that we expect to win all of the opportunities, but the fact that there is that level of interest is what is what we are really looking for. We just want to compete, and I think that we will do so very effectively.”

Omni-Path Hardware: Dash Of Aries, A Splash of True Scale

As we go to press, the detailed feeds and speeds of the Omni-Path switches and adapters are not yet available, but we have been able to gather up some information on the adapters and switches just the same.

Intel plans to integrate the Omni-Path interconnect on its impending “Knights Landing” Xeon Phi processor, proving hooks directly in the fabric from the Xeon Phi without having to go through the PCI-Express bus like with other network adapters. With select Atom and Xeon processors, Ethernet network interfaces are etched onto the processor die, but in the case of the Knights Landing chip, the two 100 Gb/sec Omni-Path ports are integrated at the package level, not at the chip level. This type of integration comes to the same from a functional standpoint and allows Intel to make one Xeon Phi chip but provide three distinct types of connectivity: a processor with integrated Omni-Path, a processor with links out to a PCI-Express buses allowing customers to use whatever kind of network fabric they wish, and a third as a coprocessor that hooks to any CPU through the PCI-Express bus and uses the connectivity of the server to reach the network fabric.

Eventually, Intel will also offer Omni-Path ports on future Xeon processors, and thus far it has only said that this capability will come with a future 14 nanometer Xeon chip. That could be the “Broadwell” Xeon E5 v4 expected in early 2016 or the “Skylake” Xeon E5 v5 coming in 2017, and we think it will be the latter, not the former. (As we previously reported, we know that some of the Skylake Xeons will have Omni-Path ports as well as a bunch of other interesting features, but we do not know if the Broadwell Xeons will have them.) And while it is possible that the Omni-Path ports could be pushed down into the CPU die with these future Xeons, it is far more likely that Intel does a package-level integration as it has done with the Knights Landing Xeon Phi. This is easier and probably less costly.

The Omni-Path software stack will be compliant with the Open Fabrics Alliance InfiniBand stack, so applications written to speak to Intel’s 40 Gb/sec True Scale InfiniBand adapters and switches will work on the 100 Gb/sec Omni-Path adapters and switches. Interestingly, Omni-Path will have an “offload over fabric” function using the Knights Landing processors, pushing some of the network overhead associated with HPC workloads from the processor to the fabric. (The precise nature of this offload has not been revealed, but we will be hunting that down.)

There is some chatter going on about Omni-Path not supporting Remote Direct Memory Access (RDMA), one of the innovations that gives InfiniBand its low latency edge over Ethernet. (Even after the advent of RDMA over Converged Ethernet, or RoCE, InfiniBand still has a latency edge because Ethernet has to support so many legacy features that slow it down a bit.) Intel gave The Next Platform the following statement with regards to RDMA and Omni-Path: “The claim that Omni-Path Architecture does not support RDMA is not correct. Our host software stack is OFA compliant, so it does support RDMA along with all upper layer protocols (ULPs). That being said, we have an optimized performance path for the MPI traffic called Performance Scaled Messaging (PSM), which has been enhanced from the True Scale implementation, but the I/O traffic will still use other ULPs, which includes RDMA.”

For its Omni-Path server adapters, there are two Omni-Path products at the moment from Intel. The “Wolf River” chip supports up to two 100 Gb/sec ports, each supplying 25 GB/sec of bi-directional bandwidth. This appears to be what Intel is using to bring Omni-Path to the Xeon Phi processors and, we suspect, to the Skylake Xeon E5 chips a little further down the road.

Intel is also making an Omni-Path PCI-Express adapter card, code-named “Chippewa Forest,” that implements a single port running at 100 Gb/sec. (Presumably this is using the Wolf River chip above.) Here is what the x8 and x16 versions of the adapters look like:

It is unclear if Intel will eventually deliver a two-port Omni-Path adapter card, but this also seems likely unless Intel wants to use port count to encourage customers to use its processors (both Xeon Phi and Xeon) that have integrated Omni-Path links or have them buy multiple adapters if they need a higher Omni-Path port count.

On the switch side of the Omni-Path interconnect, the “Prairie River” switch ASIC is designed to push up to 48 ports running at those 100 Gb/sec speeds; it delivers 9.6 Tb/sec of aggregate switching bandwidth, which translates into 1.2 TB/sec of bi-directional bandwidth across those 48 ports when they are humming along. Intel says that the port-to-port latency for the Prairie River ASIC is between 100 nanoseconds and 110 nanoseconds, in this case using the Ohio State Micro Benchmarks v. 4.4.1 tests with 8 byte message sizes. Running the Message Passing Interface (MPI) protocol that provides coherency across multiple nodes in a cluster for most simulation and modeling clusters, the Prairie River ASIC on the Omni-Path switches can run at 195 million messages per second (that is a uni-directional message rate), while the Wolf River Omni-Path adapter chip was measured at 160 million MPI messages per second.

Intel has said (finally, as many of us suspected all along) that the Omni-Path interconnect is taking ideas from the True Scale InfiniBand that it acquired from QLogic and the “Aries” interconnect that it acquired from Cray (and which is at the heart of Cray’s XC30 and XC40 product lines) and mixed them in a way to get a 100 Gb/sec fabric that is tuned up for HPC workloads.

Specifically, Omni-Path takes the low-level Cray link layer (the part of the network stack that is very close to the switch and adapter iron) and merges it with the Performance Scaled Messaging (PSM) libraries for InfiniBand that QLogic created to accelerate the MPI protocol. This low-level Cray technology scales a lot further than what QLogic had on its InfiniBand roadmap before being acquired by Intel, and we have heard that the wire transport and host interfaces are better with Omni-Path, too. The point is, the preservation of that PSM MPI layer from QLogic’s True Scale InfiniBand is what makes Omni-Path compatible with True Scale applications, even if it is not technically a variant of InfiniBand.

As you can see from the table above, Omni-Path has some pretty big performance boosts in terms of bandwidth and messaging rates at the adapter and switch levels, and significantly lower latency on the port hops. It is hard to say which factors will be more important for which HPC shops, but it is probably safe to assume that supercomputers need improvement in both bandwidth and latency, and this will be increasingly true as more cores and more memory (and even different types of memory) are added to server nodes and clusters get more powerful. What will matter is how much Omni-Path networking costs relative to 100 Gb/sec Ethernet and InfiniBand alternatives, and with Intel wanting to drive adoption, we can presume that Intel will be aggressive on pricing for its Omni-Path switches and adapters.

Intel has four of its own switches that it will be peddling. The director switches are known by the code-name “Sawtooth Forest,” and they use QSFP cabling and leaf modules. One version has 192 ports in a 7U chassis and another has 768 ports in a 20U chassis. Here is what the 192-port Sawtooth Forest director switch looks like:

And here is what the 768-port behemoth looks like:

The two Intel Omni-Path edge switches that sit in the rack, acting as a go-between connecting servers to the director switches, are known by the code-name “Eldorado Forest” and they both come in 1U enclosures. One edge switch has 24 ports and the other has 48 ports. Here is what the 48-port version, which we expect Intel to push heavily, looks like:

The 24-port version only has the ports on the middle bump and not off to the right or left of the bump; it is not a half-width switch, as you might expect. Other Intel partners may do a modular design allowing for ports to be added after the fact, as some Ethernet switch makers are doing, splitting the difference between fixed port and modular switches with semi-modular units.

Pricing for the Omni-Path switches and adapters have not been announced, but we will endeavor to get them as soon as we can.

Be the first to comment