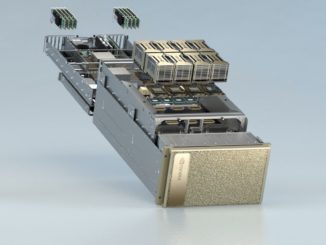

Nvidia has staked its growth in the datacenter on machine learning. Over the past few years, the company has rolled out features in its GPUs aimed neural networks and related processing, notably with the “Pascal” generation GPUs with features explicitly designed for the space, such as 16-bit half precision math.

The company is preparing its upcoming “Volta” GPU architecture, which promises to offer significant gains in capabilities. More details on the Volta chip are expected at Nvidia’s annual conference in May. CEO Jen-Hsun Huang late last year spoke to The Next Platform about what he called the upcoming “hyper-Moore’s Law” era in HPC and supercomputers that will drive such emerging technologies as AI and deep learning and in which GPUs will play an increasingly central role.

As the era of deep learning unfolds, researchers are looking at new ways to improve the underlying infrastructure – which includes the GPUs – to increase the performance of these workloads and run them more efficiently. One area that is being investigated is the use of three-dimensional integrated circuits (3DICs) as system interconnections that can significantly increase bandwidth for the applications. Among those is a group of researchers at the Georgia Institute of Technology, who recently issued a report on a study about the use of 3D stacking technologies in GPU-accelerated deep neural networks.

“Applications which require high bandwidth, such as machine learning, stand to benefit significantly from the heterogeneous 3D integration of high performance computing elements coupled with large quantities of memory,” the authors wrote, adding that “thermal constraints complicate the design of such 3D systems, however, as the areal power density of a 3DIC can be much higher than the power density of the equivalent 2D system, making heat removal and thermal coupling significant challenges in 3D systems.”

In their study, the researchers created a two-tier, air-cooled thermal testbed that includes an Nvidia Tesla K40 GPU to determine how the performance of the GPU in running deep neural network applications is impacted by power consumption and heat generation in a 3D stacking environment. The testbed included a heater/thermometer die on top comprising four independently controlled heaters. The heaters were designed to emulate a variety of components, from low-power memory to high-performance multi-core CPUs, and each heater was connected to two gold pads. The die was mounted in a back-to-back (B2B) fashion with the GPU die, though simulations showed that the thermal difference between the B2B and face-to-back (F2B) configurations – the F2B mode is more common in 3D stacking environments – was small. The package also included a copper heat spreader and other components.

During testing, the researchers ran workloads such as recognition and classifications from four popular neural network applications – LeNet, AlexNet, Overfeat and VGG-16 – through the testbed. The jobs from each neural network were run 25 time back-to-back 25 times, with the researchers recording average GPU temperature, power consumption and computation time. The process was repeated for each benchmark with the power dissipation of the top die put at different settings – zero watts, 16 watts, 24 watts, 30 watts, and 40 watts. As the power dissipation increased, the average GPU temperate rose as well – thought less so when running the LeNet workload, which has smaller computational needs that put less of a demand on the GPU than the other applications. The average GPU power consumption also grows with the top-die power dissipation when running the larger workloads (AlexNet, Overfeat, and VGG-16) until it hits 24 watts, at which point power consumption of the GPU drops significantly.

“The GPU appears to limit its performance in order to remain within its thermal envelope, as the average computation time for each benchmark stays roughly constant until the average GPU temperature approaches 90 degrees C, at which point the computation time dramatically increases,” the authors wrote. “While the average GPU power decreases at high top-die power dissipations, the computation energy increases significantly, due to the increase in computation time.”

The bottom line, according to the researchers, is that GPU-accelerated deep neural networks (DNNs) “could benefit greatly from the high bandwidth and low latency enabled by 3D integration, as DNNs require large sets of model parameters to be fed to the cores of the GPU.” However, the benefits of such a 3D stacking model could be negated to a certain extent by thermal limits. The GPU’s temperature rose as the top-die power dissipation increased, the GPU began limiting its performance when its average temperature neared 90 degrees Celsius – at about a 30 watt top-die power dissipation – so that it wouldn’t go beyond its thermal limits. In the worst-case scenario, the researchers found a 2.6X increase in computation time and a 2.2X jump in computation energy, and “we expect higher top-tier power dissipations to yield worse performance/efficiency degradation.” They argued that the results from the experiments show that what will be needed to make high-performance 3DICs a viable model for such workloads as deep neural networks are aggressive cooling techniques.

What I have usually told folks is that when you are evaluating a good on-line electronics store, there are a few factors that you have to think about. First and foremost, you would like to make sure to find a reputable as well as reliable shop that has received great assessments and scores from other people and business sector experts. This will make certain you are getting through with a well-known store to provide good service and assistance to it’s patrons. Many thanks sharing your opinions on this weblog.

Doubtful that the extra cost in manufacture of a 3D stack chip with only a 2-fold increase in performance is actually worth it. Besides who is using AlexNet or even VGG-16 nowadays? ResNet is the new king in down for CNN architecture and you can build it an enormous quantity of layers that makes VGG-16 look like a tiny network

I think GPU architectures are showing their end of this kind of workload and something new has to emerge