So you are a system architect, and you want to make the databases behind your applications run a lot faster. There are a lot of different ways to accomplish this, and now, there is yet another — and perhaps more disruptive — one.

You can move the database storage from disk drives to flash memory, You can move from a row-based database to a columnar data store that segments data and speeds up accesses to it. And for even more of a performance boost, you can pull that columnar data into main memory to be read and manipulated at memory rather than disk or flash speeds.

All of the in-memory databases – the “Hekaton” variant of SQL Server from Microsoft, the 12c database from Oracle, the BLU Acceleration add-ons for DB2 from IBM, and the HANA database from SAP are the dominant commercial ones – do the latter. (Spark kind of does the same thing for Hadoop analytics clusters, but the Spark/Hadoop stack is not a relational database, strictly speaking, even if with some overlays it can be taught to speak a dialect of SQL and behave like a very slow relational database with limitations.)

You can shard your database tables and run queries in parallel across a cluster of machines; this is a pain in the neck, but was the only reasonable option for a long time.

You can also shift to any number of NewSQL databases and NoSQL data stores, which sacrifice some of the properties of relational databases (particularly for data consistency) in exchange for speed and scale.

You can move to one of the new GPU-accelerated databases, such as MapD or Kinetica.

Or, you can slip an FPGA into the server node, load up the database acceleration layer from startup Swarm64, and not tell anyone how you made MySQL, MariaDB, or PostgreSQL run an order of magnitude faster – and be able to do so on databases that are an order of magnitude larger. If you wait a bit, and if enough customers ask for it, Swarm64 will even support Oracle database acceleration, and there is even the possibility in the future – provided there is enough customer demand – of getting FPGA acceleration for Microsoft’s SQL Server without any of the limitations that came with the Hekaton in-memory feature.

The factor of 10X improvement in response time, database size, and data ingestion rates on a system running Swarm64 is something that is bound to get the attention of anyone who has a database application that is being hampered by the performance of the underlying database engine. But the fact that it can be done transparently to popular relational databases in use at enterprises means that Swarm64 has the chance to be extremely disruptive in the part of the server market that is driven by databases.

This helps explain, in part, why Intel shelled out $16.7 billion for FPGA maker Altera back in June 2015 and also why it is keen on making hybrid CPU-FPGA compute engines, starting with a package that combines a fifteen-core “Broadwell” Xeon with an Arria 10 FPGA on a single package and eventually having a future Xeon chip with the FPGA etched onto the same die as the Xeon cores. The impact that acceleration of relational databases using Swarm64 could have on server shipments is enormous.

Here is how Karsten Rönner, CEO at Swarm64, cases the market. The world consumes somewhere on the order of 12 million servers a year, based on the 2015 data that he had on hand. About a quarter of these, or 3 million machines, are used to run relational databases and data warehouse software to do transaction processing and analytics against, and assuming that about half of the workloads are latency sensitive and performance constrained, that is around 1.5 million units of database servers that might be accelerated by FPGAs. And if the company can get a 10X boost in performance and database size with its FPGA acceleration, then this could potentially be a lot of Xeon processors that Intel does not end up selling.

As we said before, Intel must be pretty afraid of something to have paid so much money for Altera – and to have made such a beeline for it. This is certainly part of it, and Intel clearly wants to control this future rather than be controlled by it.

Swarm64 has dual headquarters in Oslo, Norway and Berlin, Germany, and was founded in 2012 by Eivind Liland, Thomas Richter, and Alfonso Martinez. The company has raised $8.7 million in two rounds of venture funding, has 19 employees, and is now touting the fact that it is partnering with Intel to push its Scalable Data Accelerator into the broader market. The Swarm64 stack is not tied to Altera FPGAs and can run on systems with Xilinx FPGAs, but clearly having Intel in your corner as you try to replace Xeon compute with FPGA acceleration is a plus rather than a minus.

Ahead Of The Hyperscalers

“The challenge of accelerating databases with either FPGAs or GPUs is that you need to push enough data through the analytical engine so that the bandwidth through it is as high as you can possibly make it,” Rönner tells The Next Platform. “For FPGA acceleration on a peripheral card, that is obviously limited to the PCI-Express bus speed, which is one limiting factor. The other element of this is to try to touch as little of the data as is possible because the more you touch it, the more bandwidth you need.”

This is one of the guiding principles in columnar databases, of course, but Swarm64 is not a columnar database. Rather, it is a highly compressed database that keeps active data in the processor main memory and committed data on flash storage. The Lempel-Ziv, or LZ, lossless data compression method is used to scrunch and expand this data, and it is implemented in VHDL on the FPGA for very high bandwidth and very high speed. The FPGAs are also used to accelerate the functioning of the underlying storage engine used in MySQL, MariaDB, and PostgreSQL databases, which is the secret sauce of the Swarm64 stack.

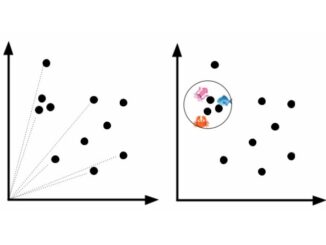

Rönner says that there are two schools of thought when it comes to database acceleration using FPGAs. The first is to literally translate SQL queries in the VHDL language of FPGAs, and people have done this. But this approach has many problems, not the least of which is that you cannot change the queries or the database management systems once you implement it and this takes a lot of time. The other approach is to process the parts of the SQL query on the FPGA – including filtering and SQL query preprocessing – so it reduces the load on the CPU but also reduces the effective bandwidth used to move data into the CPU for processing. The Swarm64 storage engine is not a columnar store, but rather a row store with an indexless structure, but it only chews on the necessary parts of the data like a columnar store does. By not having an index, this reduces a lot of the metadata and other overhead associated with a relational database and allows for data to be ingested and processed in near real-time as it comes in. Swarm64 does store data in its own tables and they are obviously not in the same format as the indexed tables generated by MySQL, MariaDB, and PostgreSQL.

For the past two years, the company has been peddling its own FPGA card, based on chips from Altera, but now it is partnering with Intel to sell a hardware stack based on the current Arria 10 FPGAs and cards made by Intel and is in position to employ the CPU-FPGA hybrids coming later this year as well as the beerier Stratix 10 FPGAs from Intel. The current setup from Swarm64 puts a single Arria 10 FPGA card in a two-socket Xeon server.

The compression and indexlessness of the Swarm64 database storage engine allows for a database table to be compressed down to a 64 TB footprint; a “real” MySQL, MariaDB, or PostgreSQL database with the same data would take up 640 TB of space, just to give you the comparison. That is the current maximum size of the database supported at the moment, and this is sufficient for most use cases given that move databases are on the order of tens of gigabytes to tens of terabytes in real enterprises. This 640 TB effective capacity limit covers 95 percent of the addressable databases used in the world, according to Rönner.

To scale out the performance of the Swarm64 database storage engine, customers can put multiple FPGAs or more powerful FPGAs into their nodes, and if they need to horizontally scale, they can shard their databases (as is often done at enterprises and hyperscalers) to push the throughout even further. (This sharding doesn’t help reduce latencies as much, of course.) In the future, says Rönner, Swarm64 will be implementing a more sophisticated horizontal scaling method that has tighter coupling and that will not require sharding of data. And until then, customers can scale up from one, to two, to four, to eight sockets and a larger and larger main memory footprint to accommodate larger database tables.

In a test to prove the performance of the Swarm64 engine, the company tool a network monitoring data stream and tested how quickly it could ingest data. It took a two-socket Xeon server with 256 GB of main memory and some flash drives and allocated half of the compute and memory to a MariaDB database. Using the default storage engine, this half-node could ingest and query around 100,000 packets per second of data coming in from network devices spewing their telemetry; switching to the Swarm64 engine boosted the performance of this workload – and without any changes to the application or to the MariaDB database management system – to 1.14 million packets per second. Contrast this with the FPGA-accelerated Netezza appliance that IBM acquired a few years back, which is based on a proprietary implementation of PostgreSQL that has had trouble keeping up with the pace of the open source PostgreSQL development. And also realized you could buy a massive NUMA machine with maybe 12 or 16 or 32 sockets and put the same number of FPGAs into the box as sockets and create a massively accelerated database that would scale up nearly linearly.

Swarm64 is charging $1,000 per month per FPGA to license its software stack; the Linux driver that lets Swarm64 talk to the FPGA and the databases will eventually be open sourced, so in theory you might be able to roll your own and support yourself. Either way, you have to buy your own hardware, including the FPGA accelerators, and while FPGAs are not cheap, there is no key component of a modern, balanced system that is more expensive than main memory. And adding experts to the IT staff who understand database sharding or in-memory databases and porting applications to these is not cheap, either. The in-memory databases like SAP HANA, reckons Rönner, cost ten times as much per unit of performance than a server using its software and FPGAs – and the in-memory databases are limited to double digit terabytes of of capacity at that when Swarm64 can scale pretty much as far as customers want if they are willing to tolerate sharding or pay for a big NUMA box. It is fun to contemplate a cluster of fat NUMA machines like maybe the 32-socket SGI UV 300 machines from Hewlett Packard Enterprise, each with 64 TB of physical memory and an effective capacity of 640 TB each running the Swarm64 engine. A tidy sixteen nodes would yield a database with an effective capacity of over 1 PB.

At some point, Swarm64 will be able to take advantage of Optane 3D XPoint SSDs and memory sticks and really expand the effective capacity of a node or a cluster of nodes – and is planning with Intel right now on exactly how best to do this.

The company’s roadmap also calls for the Swarm64 database storage engine to use Oracle’s Data Cartridge analog to a storage engine to provide support for Oracle databases, and this would mean that many customers would not need to go to the 12c in-memory features, which cost $23,000 per core compared to the $47,500 per core charge of the Oracle 12c Enterprise Edition database. This is a potentially huge savings for Oracle shops. Similarly, Microsoft is said to use something very similar to a storage engine for SQL Server, and Rönner believes that with a little help from Microsoft, it might only take three or four months to slide Swarm64 underneath it.

If all of that comes to pass, then those who charge per-core for their database licenses will see a revenue downdraft just like Intel will for Xeon processors and server makers will for server footprints. But Intel will make at least some of it back in FPGA sales.

All of this begs the question: If hyperscalers are so smart, why didn’t they do FPGA acceleration of storage engines before investing so much effort into flash, sharding, and NoSQL? They must really like to have monolithic X86 CPU architectures, but with the Swarm64 driver open source and perhaps opening up the code to the big hyperscalers, Swarm64 might be able to close some big deals up on high, not just in the enterprise datacenter it is targeting. If nothing else, this will prove that it can be done, and if the idea takes off, hyperscalers will probably just invent it themselves. They tend to do that, even if they do reinvent the wheel a lot.

Not to be mean, but this article is in dire need of a few passes through a copy editor.

Also, I think the quoted per-core Oracle costs for in-memory and Enterprise Edition of 12c are reversed.

What is the point of putting expensive FPGAs into your system, when there are SPARC CPUs with SQL accelerators available? …with relative price simmilar to regular Xeons.

Btw. Xeon+FPGA on same die will not happen (at least not big xeons, maybe just small Xeon-D). Intel already anounced that future Xeons will be MCM.

Interesting approach with FPGAs reminiscent of the Netezza approach – whatever happened to them post-acquisition?

The article also touches on the subject of SQL on Hadoop – there are options other than Spark SQL there, as discussed at https://www.linkedin.com/pulse/hadoops-biggest-problem-how-fix-mark-chopping, which give you a major improvement on “speak a dialect of SQL and behave like a very slow relational database with limitations”!

Q: why didn’t they do FPGA acceleration of storage engines before investing so much effort into flash, sharding, and NoSQL?

A: because they didn’t read the nextplatform.com

Going serious Google is capable to design and produce a SQL Processing Unit, but nobody inside the corporation didn’t ask for.