Despite the best efforts of leading cosmologists, the nature of dark energy and dark matter – which comprise approximately 95% of the total mass-energy content of the universe – is still a mystery.

Dark matter remains undetected even with all the different methods that have been employed so far to directly find it. The origin of dark energy is one of the greatest puzzles in physics. Cosmologist Katrin Heitmann, PI of an Aurora Early Science Program effort at the Argonne Leadership Computing Facility (ALCF) and her team are conducting research to shed some light on the dark universe.

“The reach of next-generation cosmological surveys will be determined by our ability to control systematic effects,” Heitmann comments. “Currently there are constraints on cosmological parameters at the few percent level. To reach a more definite conclusion about the cause of the accelerated expansion of the universe, the accuracy of these measurements must be improved. To achieve this goal, researchers have to understand the physics of the universe at a more refined level.”

Even though they are not visible, dark energy and dark matter play the most significant roles in controlling the evolution of the universe. Nevertheless, baryons, the ordinary matter that makes up the visible universe, are also important. Heitmann says that if you restrict yourself to large scales, simulations without baryons provide an adequate description of the observable universe. But now, with vastly improved optical instruments like the Large Synoptic Survey Telescope in Chile, researchers can go even deeper and access more data that will help them accurately describe the stuff of the universe – at this level of description modeling of baryons is necessary.

Key to these advances is the deployment of new, next generation high performance computer systems. This includes the 9.65 petaflop Theta supercomputer that serves to provide scientists with experience using the Intel Xeon Phi processors. The next generation of these processors will be integral to the 200 petaflop Aurora supercomputer due to be installed at ALCF in 2018. Theta provides equivalent processing power to ALCF’s current Mira system.

The goal of the early science project on Theta is to include baryonic physics in a simulation that captures cosmological scales. This simulation will be in preparation for the arrival of Aurora. Aurora will provide the compute capability needed to resolve the small scales that will be important to interpret next-generation observations. Including baryonic physics in cosmological simulations poses a major challenge since the physics is much more complicated when compared to dark matter which in the main current models only interacts gravitationally. “Our goal is to work in concert with very large optical surveys,” she says. “The simulations are needed to interpret the data, test out new theoretical ideas, and to mitigate possible systematic uncertainties in the data”

And that means more memory and more processing power supported by the Intel Omni-Path Architecture (Intel OPA), a next generation member of Intel Scalable System Framework. Intel OPA is designed to scale to tens of thousands of nodes. Moving from the earlier Mira supercomputer to Theta and then on to Aurora should bring a factor of 20 increase in performance and therefore enabling the treatment of the complex baryonic physics in cosmological volumes, according to Heitmann.

With Theta, the cosmologists will have the compute power and fast onboard memory available to conduct detailed, elegant simulations and the ability to include baryons, and baryonic physics in the investigations. “What we want to do with these next generation machines is to pull out all the stops and do a really large cosmological representation with a lot of complex physics included,” she says. “Although Theta doesn’t have the same capabilities as Aurora, it does provide us with a very nice development system that we can use to create some excellent simulations, baryons included.”

Theta is roughly the same size as Mira – a 10 petaflops machine. “The idea is to have a large chunk of the universe simulated on Theta and include the baryonic simulations as well. We have already done a gravity-only simulation and have observed the creation of large-scale structures. We are now fine tuning the code to actually run the baryonic version of that simulation.”

To really understand the baryonic physics in operation during the simulation, researchers have to take into account the feedback effects for example from supernovae or active galactic nuclei. A first principles simulation of baryons is not possible at this point. The researchers have to first create approximate models that are designed with the architecture of Theta in mind and provide the optimum environment for processing baryonic physics.

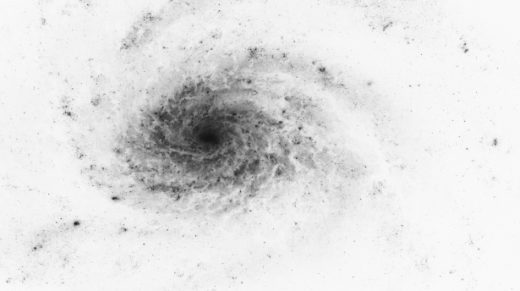

Heitmann notes that when you simulate dark matter you cannot simulate the dark matter particles. She explains, “Not only do we not know what they are, but also the mass of the particles is so small you never have enough of them to conduct the simulation. The solution is to create tracer particles with a mass of 109 solar masses, or smaller. During the simulation, the dark matter starts to clump, forming compact dark matter halos; galaxies live inside these halos. To sufficiently resolve halos, which cover a large range in mass and host galaxies that can be bright or very dim, the number of simulation particles must be significantly increased. Additionally, the simulation volume must also be large in order to properly represent modern sky surveys. The addition of particles increases the amount of memory required, as well as the computational load. Adding more physics also comes with a similar price in both memory and computation.

Theta is supplying some of that horsepower. One of its tasks is to support the ALCF Early Science Program, which helps selected teams engage in leading edge science and adapt their projects to take full advantage of the extensive parallel processing capabilities offered by Theta and its successors. Part of that work is to solidify libraries and other supercomputer essentials such as compilers and debuggers that support current and future production applications.

Using Theta, the researchers recently completed a gravity-only large-scale simulation. A set of smaller simulations included baryons in their mix.

“Almost everything we do is developed within our team,” says Heitmann. “We do not want to depend on someone else’s software that is not performing as well as it should. For example some of the libraries were not scaling and we had to write our own I/O to get good performance. We will do this kind of work on Theta and then transfer it to Aurora.”

At this point Heitmann’s team is just about ready to run simulations that include both dark matter and detailed baryonic physics. The team will analyze those simulations to investigate clusters of galaxies. The work on Theta enables the scientists to make good use of Aurora’s exceptional computation abilities once it arrives.

“The more compute power we have, the more details we can include in our simulations. This will bring the researchers closer to depicting what the universe actually looks like without having to construct models of what we think it looks like,“ she says.

In the process, by comparing simulations to observations, they may discover anomalies in the accepted depictions of the universe and the venerable Theory of General Relativity, which would cause major upheavals in the scientific community.