The software ecosystem in high performance computing is set to be more complex with the leaps in capability coming with next generation exascale systems. Among several challenges is making sure that applications retain their performance as they scale to higher core counts and accelerator-rich systems.

Software development and performance profiling company, Allinea, which has been around for almost two decades in HPC, was recently acquired by ARM to add to the company’s software ecosystem story. We talked with one of the early employees of Allinea, VP of Product Development, Mark O’Connor about what has come before—and what the software performance road ahead will look like in terms of moving performance on pace with new machines.

TNP: You’ve been working on HPC software since the early 2000s and now at ARM, you are likely getting a different set of insights about where HPC is going. What are some of the key shifts you have seen in HPC and what was driving those?

O’Connor: Well, I remember one of the first questions I asked when we started writing DDT, the debugging tool, was how many nodes do we need to support? How many calls should we support? What should the GUI look like and questions of that nature. But within five years we were already scaling it to hundreds of thousands of cores. There was an incredible explosion of parallelism over that period from 2002 on. But other than that pure scaling, it was really quite a homogenous era, which I think is unusual for HPCs history, in which basically every cluster was just a collection of Intel processors, until we started seeing GPUs coming in.

When we look at the last couple of years there’s been this explosion of interest in acceleration. We approached the limit of frequency scaling. I think the next ten years are going to be much more exciting.

TNP: What will some of the problems be from a software point of view as parallelization ticks upwards and more, or at least beefier accelerators come into play?

O’Connor: When I look at the plights of writing software in HPC today, it is clear to me that one of the biggest challenges at the moment is performance portability. A single machine will last maybe from three to five years, and the building you put it in will last for maybe ten years or perhaps more and keeping with a particular architecture, like having a straightforward CPU or GPU or accelerators, might be on ten or fifteen year cycle. But the code, the source code, for an application survives for generations of developers, graduate students, and researchers. We’ve got codes that are decades and decades, hundreds of years of man-years poured into them.

When you’re writing an application, you can’t often afford to be doing a lot of vendor specific work there. You want the applications to perform well on today’s architecture, but also to have those fall in to tomorrow’s. That’s the real challenge, and one of the things we spent a lot of time doing at Allinea is working toward openness and breaking down barriers. The starting point is being focused on producing a clear and consistent experience across all of these different platforms. No one wants to waste all the investment in codes every time a new architecture or system comes along because it will be different. Being able to make sure that the investment in expertise building can be carried on and multiplied in future systems is important to people, and we put a lot into making this possible.

TNP: Let’s talk about performance portability as people eventually move to exascale machines. In some cases, codes will need to be entirely refactored and others will have a great deal of new investment going in. What is the challenge here—what will happen as new choices are on the horizon for those that are going to have to do significant work anyway?

O’Connor: I’m not seeing any particular areas having to rewrite their codes completely. I think it’s more a case of new codes, or perhaps a bit more looking around (for instance, “shall I do this in python first and try to get some techniques that are being utilized elsewhere”) but there are other things to think about.

It seems like every time we go to another three orders of magnitude in power, there is always panic about whether a protocol will scale. People say, “it won’t work, we’ll have to rewrite everything in this completely different way of doing things,” but so far that hasn’t been true. We don’t have reason to believe it will be true this time either. I would still expect to see a lot of applications running MPI plus OpenMP for exascale.

One of the interesting things being done here now is around task based parallelism. When you’re prepared to declare how your data is laid out in memory to a run time, and define the tasks you want to perform on that data, you have a runtime to decide where that data, whether it’s put on accelerators, and to take a lot of that scheduling responsibility from the user, that gives you so many things on it for free. For new codes that are being written, I would be astonished if anything replaced MPI and OpenMP in the near future, because the amount of investment to rewrite some of the admission critical codes is stupendous. I expect us to find ways of making traditional paradigms work and continue to work for the foreseeable future.

In terms of changes, in the HPC community I am most encouraged by the work around open standards at the moment. Things like finally bringing in accelerators to OpenMP 4.0 and making open standards. This is going to allow vendors to be more innovative in what they provide, if they know there’s an open standard they can target, rather than having to also sell an API and another way of writing code on top of that. I think that’s going to open quite a lot of innovation in this space.

Every next step in terms of order of magnitude is hard fought and hard won. To align this with software, we have to remember it is really difficult to predict where the next bottlenecks are going to be on the next architectures and systems until you see them.This is one of the reasons why we had to make sure that we could profile at really large scale, because when you look at the behavior of an and then you look at it again at 10,000 or 100,000 cores you see totally different sets of bottlenecks.

To a large extent, the same is true with debugging, strictly because of race conditions, when you go to another order of magnitude in scale you find new bugs you didn’t know you had. One in a million chances happen nine times out of ten at the scale of something like Titan. It’s always a challenge. The question really is whether we need new software development paradigms. I don’t think we have the choice to move to a new software development paradigm. I don’t think that’s an option for HPC.

TNP: In the here and now, is there a particular architecture or accelerator that highlights this performance portability problem particularly well? And for Allinea/ARM, adding in new elements (HBM, 3DXPoint, etc.) do these create new challenges for moving performance across generations of systems?

O’Connor: Every architecture has its own unique opportunities, and it’s not so much a problem of general portability as it is a challenge in making the most of those architectures. I think when GPUs came out, it was a while before people got a really good handle on how to get the most performance out of them, and we saw a huge improvement in performance over time as people really dug into that more. A part of this, of course, is when a new architecture comes out, you really need the tool support to be there from the start. And its only, for example, in this year that we started to see support for showing how much time is spent on each line of source code for GPU.

We see many similar things in this area moving to manycore, not just dozens, but hundreds of cores. Getting all of these cores to work efficiently with each other, getting memory to be allocated in the right places, making the best use of it, that’s always a challenge. It’s going to be the same with making use of high bandwidth memory and nonvolatile memory as the I/O hierarchy gets deeper. All of these things are really fascinating problems, and the difficulty is when you work in HPC you already have really hard problems to solve, it’s at the limits of what humanity can do, and getting it right on a new architecture is a considerable problem. So we work really hard on our tools to try to keep that issue as simple as we can.

From a broader technology point of view, new system elements are fairly straightforward in terms of supporting new APIs. We are working with all of these partners on that technology to make sure that we can provide a good experience for the user.

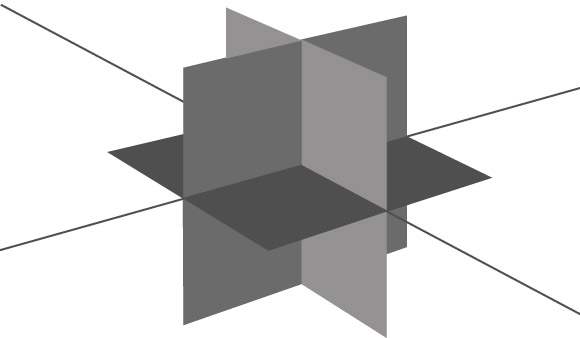

For me, however, the hardest part is thinking, ok now we’ve got all of this information, how on earth do we expose this so that a human will look at and understand and will grasp intuitively? For someone thinking about how to show what are one hundred thousand different processes are doing, but can now look at one image and see the big picture, that will be interesting. And doing this with a deeper storage hierarchy? It’s going to be even more interesting.

TNP: On a slightly different note to end, now that Allinea is part of ARM, what do you see on the horizon for HPC in terms of processor choice and the software ecosystem that will need to be there for people in HPC to get behind ARM?

O’Connor: I can’t talk about any partners, or other than that customers specifically, but there is a lot of interest in ARM. Particularly in the US, in fact. I think the industry as a whole is very interested in seeing more freedom of choice. This is one of the reasons Allinea found it very easy to become a part of ARM, why the two fit; the hardware side has always been about openness and breaking down these barriers so that if, as a software guy, I write my code targeting an conscripted set, then I can run that on hardware run by dozens or hundreds of different companies, and that’s exactly what we’ve been trying to achieve with Allinea tools and HPC. So being able to continue extending that, and obviously the strong support inside ARM as we continue to focus on cross platform supports across all platforms, this culture of openness and building an ecosystem that’s breaking down the barriers that have stopped people from moving their code–it continues to be a lot of fun.

Be the first to comment