Someone is going to commercialize a general purpose, universal quantum computer first, and Intel wants to be the first. So does Google. So does IBM. And D-Wave is pretty sure it already has done this, even if many academics and a slew of upstart competitors don’t agree. What we can all agree on is that there is a very long road ahead in the development of quantum computing, and it will be a costly endeavor that could nonetheless help solve some intractable problems.

This week, Intel showed off the handiwork its engineers and those of partner QuTech, a quantum computing spinoff from the Technical University of Delft and Toegepast Natuurwetenschappelijk Onderzoek (TNO), which as the name suggests is an applied science research firm that, among other things, is working with Intel on quantum computing technology.

TNO, which was established in 1988, has a €500 million annual budget and does all kinds of primary research. The Netherlands has become a hotbed of quantum computing technology, along with the United States and Japan, and its government wants to keep it that way and hence the partnership in late 2015 with Intel, which invested $50 million in the QuTech partnership between TU Delft and TNO so it could jumpstart its own quantum computing program after sitting on the sidelines.

With this partnership, Intel is bringing its expertise in materials science, semiconductor manufacturing, interconnects, and digital systems to play to help develop two types of quantum bits, or qubits, which are the basic element of processing in a quantum computer. The QuTech partnership involves the manufacturing of superconducting qubits, but Intel also is working on another technology called spin qubits that makes use of more traditional semiconductor technologies to create what is, in essence, the quantum transistor for this very funky and very parallel style of computing.

The big news this week is that Intel has been able to take a qubit design that its engineers created alongside of those working at QuTech and scale it up to 17 qubits on a single package. A year ago, the Intel-QuTech partnership had only a few qubits on their initial devices, Jim Clarke, director of quantum hardware at Intel, tells The Next Platform, and two years ago it had none. So that is a pretty impressive roadmap in a world where Google is testing a 20 qubit chip and hopes to have one running at 49 qubits before the year is out. Google also has quantum annealing systems from D-Wave, which have much more scale in terms of qubits – 1,000 today and 2,000 on the horizon – but according to Intel are not a generic enough to be properly commercialized. And if Intel knows anything, it knows how to create a universal computing substrate and scale its manufacturing and its deployment in the datacenters of the world.

“We are trying to build a general purpose, universal quantum computer,” says Clarke. “This is not a quantum annealer, like the D-Wave machine. There are many different types of qubits, which are the devices for quantum computing, and one of the things that sets Intel apart from the other players is that we are focused on multiple qubit types. The first is a superconducting qubit, which is similar to what Google, IBM, and a startup named Rigetti Computing are working on. But Intel is also working on spin qubits in silicon are very similar to our transistor technologies, and you can expect to hear about that in the next couple of months. These spin qubits build on our expertise in ordinary chip fabrication, and what really sets us apart here is our use of advanced packaging at very low temperatures to improve the performance of the qubit, and with an eye towards scalability.”

Just as people are obsessed with the number of transistors or cores on a standard digital processor, people are becoming a bit obsessed with the number of qubits on a quantum chip, and Jim Held, director of emerging technology research at Intel Labs, says that this focus is a bit misplaced. And for those of us who look at systems for a living, this makes perfect sense. Intel is focused on getting the system design right, and then scaling it up on all vectors to build a very powerful quantum machine.

Here is the situation as Held sees it, and breathe in deeply here:

“People focus on the number of qubits, but that is just one piece of what is needed. We are really approaching this as engineers, and everything is different about this kind of computer. It is not just the devices, but the control electronics and how the qubits are manipulated with microwave pulses and measured with very sensitive DC instrumentation, and it is more like an analog computer in some respects. Then it has digital electronics that do error correction because quantum devices are very fragile, and they are prone to errors and to the degree that we can correct the errors, we can compute better and longer with them. It also means a new kind of compiler in order to get the potential parallelism in an array of these qubits, and even the programs, the algorithms, written for these devices are an entirely different kind of thing from conventional digital programming. Every aspect of the stack is different. While there is research going on in the academic world at all levels, as an engineering organization we are coming at them all together because we know we have to deliver them all at once as a computer. Moreover, our experience tells us that we want to understand at any given point what our choices at one level are going to mean for the rest of the computer. What we know is that if you have a plate full of these qubits, you do not have a quantum computer, and some of the toughest problems with scaling are in the rest of the stack. Focusing on the number of qubits or the coherence time really does a disservice to the process of getting to something useful.”

This is analogous to massively parallel machines that don’t have enough bandwidth or low latency to talk across cores, sockets, or nodes efficiently and to share work. You can cram as many cores as you want in them, but the jobs won’t finish faster.

And thus, Intel is focusing its research on the interconnects that will link qubits together on a device and across multiple devices.

“The interconnects are one of the things that concerns us most with quantum computing,” says Clarke. “From the outset, we have not been focused on a near-term milestone, but rather on what it would take from the interconnect perspective, from the point of view of the design and the control, to deliver a large scale, universal quantum computer.”

Interestingly, Clarke says that the on-chip interconnect on commercial quantum chips will be similar to that used on a conventional digital CPU, but it may not be made out of copper wires, but rather superconducting materials.

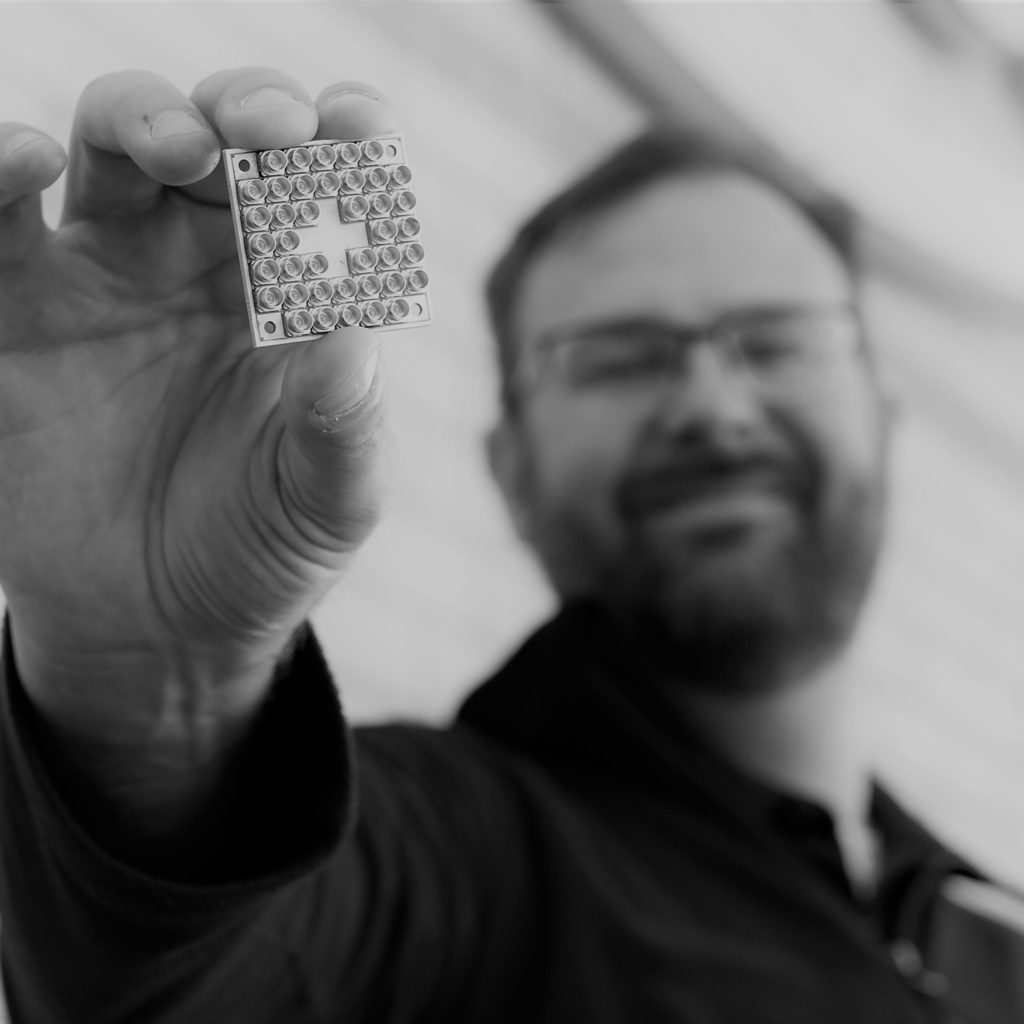

The one used in the superconducting qubit chip that Intel just fabbed in its Oregon factory and packaged in its Arizona packaging facility is a bit ridiculous looking.

Quantum computing presents a few physical challenges, and superconducting qubits are especially tricky. To keep preserve the quantum states that allow superposition – a kind of multiple, concurrent state of the bits that allows for parallel processing at the bit level, to over simplify hugely – requires for these analog devices to be kept at extremely cold temperatures and yet still have to interface with the control electronics in the outside world, crammed into a rack.

“We are putting these chips in an extremely cold environment – 20 millikelvins, and that is much colder than outer space,” says Clarke. “And first of all, we have to make sure that the chip doesn’t fall apart at these temperatures. You have thermal coefficient of expansion. Then you need to worry about package yield and then about the individual qubit yield. Then we worry about wiring them up in a more extensible fashion. These are very high quality radio or microwave frequency chips and we have to make sure we maintain that quality at low temperature once the device is packaged. A lot of the performance and yield that we are getting comes from the packaging.”

So for this chip, Intel has wallpapered one side of the chip with standard coaxial ports, like the ones on the back of your home router. Each qubit has two or more coax ports going into it to control its state and to monitor that state. How retro:

“We are focused on a commercial machine, so we are much more interested in scaling issues,” Held continues along this line of thinking. “You have to be careful to not end up in a dead end that only gets you so far. This quantum chip interconnect is not sophisticated like Omni-Path, and it does not scale well,” Held adds with a laugh. “What we are interested in is improving on that to reduce the massive number of connections. A million qubits turning into millions of coax cables is obviously not going to work. Even at hundreds of qubits, this is not going to work. One way we are going to do this is to move the electronics that is going to control this quantum machine into this very cold environment, not down at the millikelvin level, but a layer or two up at the 4 kelvin temperature of liquid hydrogen. Our partners at QuTech are experts at cryo-CMOS, which means making chips work in this 4 kelvin range. By moving this control circuitry from a rack outside of the quantum computer into the refrigeration unit, it cuts the length of the connections to the qubits.”

With qubits, superposition allows a single qubit to represent two different states, and quantum entanglement – what Einstein called “spooky action at a distance” – allows for the states to scale linearly as the qubit counts go up. Technically, n quantum bits yield 2 to the n states. (We wrote that out because there is something funky about superscripts in the Alike font we use here at The Next Platform.) The interconnect is not used to maintain the quantum states across the qubits – that happens because of physics – but to monitor the qubit states and maintain those states and, importantly, to do error correction. Qubits can’t be shaken or stirred or they lose their state, and they are extremely fussy. As Google pointed out two years ago at the International Super Computing conference in Germany, a quantum computer could end up being an accelerator for a traditional parallel supercomputer, which is used to do error correction and monitoring of qubits. Intel is also thinking this might happen.

The fussiness of superconducting qubits is probably one of the reasons why Intel is looking to spin qubits and a more standard semiconductor process to create a quantum computer chip whose state is easier to maintain. The other is that Intel is obviously an expert at manufacturing semiconductor devices. So, we think, the work with QuTech is as much about creating a testbed system and a software stack that might be portable as it is investing in this particular superconducting approach. Time will tell.

And time, indeed, it will take. Both Held and Clarke independently think it will take maybe eight to ten years to get a general purpose, universal quantum computer commercialized and operating at a useful scale.

“It is research, so we are only coming to timing based on how we think we are going to solve a number of problems,” says Held. “There will be a milestone where a machine will be able to tackle interesting but small problems, and then there will be a full scale machine that is mature enough to be a general purpose, widely useful accelerator in the supercomputer environment or in the cloud. These will not be free-standing computers because they don’t do a lot of things that a classical digital computer does really well. They could do them, because in theory any quantum computer can do anything a digital computer can do, but they don’t do it well. It is going to take on the order of eight to ten years to solve these problems we are solving now. They are all engineering problems; the physicists have done an excellent job of finding feasible solutions out of the lab and scaling them out.”

Clarke adds a note of caution, pointing out that there are a lot of physics problems that need to be solved for the packaging aspects of a quantum computer. “But I think to solve the next level of physics problems, we need a healthy dose of engineering and process control,” Clarke says. “I think eight to ten years is probably fair. We are currently at mile one of a marathon. Intel is already in the lead pack. But when we think of a commercially relevant quantum computer, we think of one that is relevant to the general population, and moreover, one that would show up on Intel’s bottom line. They key is that we are building a system, and at first, that system is going to be pretty small but it is going to educate us about all aspects of the quantum computing stack. At the same time, we are designing that system for extensibility, both at the hardware level and at the architecture control level to get to many more qubits. We want to make the system better, and larger, and it is probably a bit premature to start assigning numbers to that other than to say that we are thinking about the longer term.”

It seems we might need a quantum computer to figure out when we might get a quantum computer.