For several years, GPU acceleration matched with Intel Xeon processors were the dominating news items in hardware at the annual Supercomputing Conference. However, this year that trend shifted in earnest, with a major coming-out party for ARM servers in HPC and more attention than ever paid to FPGAs as potential accelerators for future exascale systems.

The SC series held two days of rapid fire presentations on the state of FPGAs for future supercomputers, with insight from both academia, vendors, and end users at scale, including Microsoft. To say Microsoft is an FPGA user is a bit of an understatement, however, since the company has the largest known installation of FPGAs on the planet dedicated to both network offload and application acceleration.

Andrew Putnam was one of the founding leads behind Microsoft’s Catapult FPGA program and was one of the presenters on the reconfigurable exascale front during SC. While he can see a path to exascale scientific computing for traditional HPC, he reminded everyone that Microsoft’s scale dwarfs HPC significantly—a fact that put the FPGA conversation at scale into some real perspective. “Here at the Supercomputing conference, the largest, fastest four machines are up to 40 or so single or dual socket servers. But in just each one of our datacenters there are between 20,000 and 100,000 dual socket machines with giant Xeon CPUs. And this is not just in one datacenter; we have been steadily adding to our footprint, adding now to six continents in 17 countries.”

Putnam continues, putting HPC’s exascale woes into much tighter perspective. “We [Microsoft] talked about how we had millions of servers a few years ago, so you can only imagine how any we have now. These things burn hundreds of megawatts and our power bill alone is in the hundreds of millions of dollars. We are growing 100% per year in terms of the number of cores in our datacenters, a pace we’ve kept for the last five years. In 2010, we had 100,000 compute instances, today we have millions. Azure storage went from tens of petabytes to exabytes; the datacenter networks went from tens of terabits per second and now to petabits per second,” Putnam says.

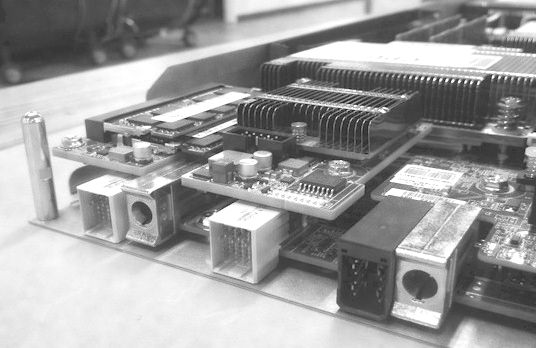

But the real point, other than the growth, is that with all of these server additions since 2015, each new machine has a big FPGA sitting in back hooked into PCIe for traditional compute offload tasks (i.e. taking a piece of code writing it to RTL and sending to the bus) and also sending network traffic straight to the NIC without roping in the CPU. The other part of what makes Microsoft’s use of FPGAs so fascinating, other than the sheer scale and range of applications, is that the FPGAs can all talk to another across the company’s vast network of datacenters worldwide. In short, a network of over 100,000 FPGAs is no small feat—and with just a couple of network hops, one FPGA can reach another globally.

All of this FPGA investment in Catapult has meant halving the server spend on the Bing search workflow, Putnam says, and has led to other proof of concept programs like the Microsoft Azure Smart NIC and BrainWave, the company’s deep learning accelerator. And while this is all big efficiency news for Microsoft, Putnam says the hard work on FPGAs is definitely not over, especially for the next stage of adoption at Microsoft and elsewhere.

“The FPGA community has to figure out a way to be able to build on legacy, on work that has come before rather than having to throw everything away and start fresh with a new generation.”

“The biggest problem for FPGAs at scale for supercomputers or hyperscale is not actually that they are hard to program,” Putnam says. He agrees they are not always simple for developers, but the problem is larger and more nuanced than that. “With what programming difficulties there are, people have to go back every time a new generation FPGA comes out and fight the battles they already won over and again.”

The cloud can offer a relatively stable hardware platform to counter this lack of deep roots. “What we really need to being doing is dividing code into hardware microservices so instead of worrying about what application an FPGA is focused on, we send the data to the application and it promises to give everything back without worrying about what is in the middle. This is what we call hardware as a service at Microsoft and there’s a lot of work we can do here.”

Ultimately, Putnam says for FPGAs to take off in supercomputing, it is worthwhile to look to an HPC standby for guidance; Fortran, he says is a great example of where FPGA programmability needs to go. It is something that can be iterated on over years by teams regardless of hardware, time, or place. Code reuse is central to solving at least some of the programmability challenges. For exascale—and its much larger hyperscale counterpart.

Be the first to comment