One of the most interesting and strategically located datacenters in the world has taken a shining to HPC, and not just because it is a great business opportunity. Rather, Verne Global is firing up an HPC system rental service in its Icelandic datacenter because its commercial customers are looking for supercomputer-style systems that they can rent rather than buy to augment their existing HPC jobs.

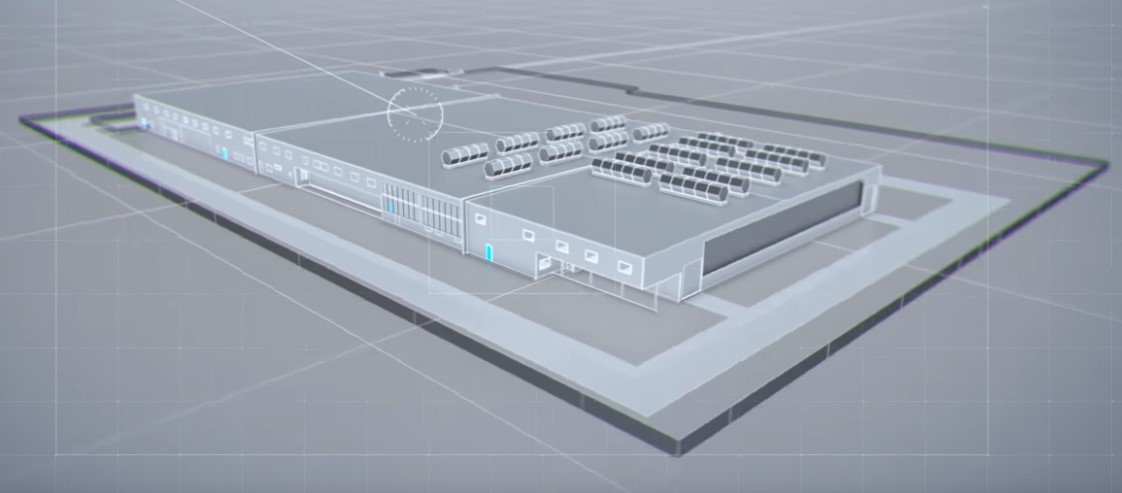

Verne Global, which took over a former NATO airbase and an Allied strategic forces command center outside of Keflavik, Iceland back in 2012 and converted it into a super-secure datacenter, is this week taking the wraps off a new service it calls HPC Direct. The Verne Global facility has well over 100 megawatts of potential capacity it can draw on in the facility, and thus far it has made data rooms out of about 25 megawatts of that capacity. Dominic Ward, managing director at Verne Global, tells The Next Platform that the company has the capability to scale to over 100 petaflops of compute within the facility, but has not obviously built this capability out because, by our reckoning, it would cost many hundreds of millions of dollars to do that with a reasonable mix of CPU-only and CPU-GPU hybrid clusters.

Up until now, Verne Global has been primarily focused on being a co-location facility where enterprises plunk their systems, storage, and networks in secure data rooms and do what they do. But with the HPC Direct offering, Verne Global is getting a little cloudy and offering to invest in systems and sell dedicated, bare metal capacity to customers who make a relatively long commitment to using that gear. Customers will be billed monthly, on a subscription basis like the other big public clouds that now have HPC-style compute with CPUs and often augmented by GPUs or FPGAs. The HPC service is meant to be an adjunct to the compute and storage that companies already have on site for their core commercial applications, and by selling bare metal HPC slices, Verne Global can help companies avoid a substantial capital outlay as they explore the use of traditional HPC applications or fire up new workloads such as machine learning.

“This is very much a customer-led demand,” says Ward. “High performance computing has always been at the heart of everything that we have done. Each of our customers, regardless of industry or vertical sector, have similar challenges around HPC and they all use HPC in a slightly different way with different application types. But one of the things we observed about the way customers use their compute is that often HPC as a service could augment their existing resources. What we have created is something to cater to this demand. We are not trying to replace anything – although we would be happy to do that if any customers want to do that.”

The very first customers that Verne Global lined up five years ago were in the automotive sectors, where there is a lot of computer aided design and computational fluid dynamics work, and in the financial services sector, where big banks and hedge funds run Monte Carlo simulations for risk analytics and investment portfolio management. The company had a pretty fast uptake for various other kinds of scientific simulations, including weather forecasting done by shipping, agricultural, and air transportation companies and various bioinformatics and genetic sequencing applications needed by research organization in North America and Europe. From this core base, Verne Global has expanded out to cover tens of industries, according to Ward, and has even added some customers from Asia.

Part of the reason for that expansion is that two months ago the TeleGreenland undersea fiber optic cable that links Iceland to North America and Europe was upgraded to 100 Gb/sec and now Verne Global can offer customers more bandwidth at much lower prices. Verne Global has a slew of points of presence (POPs) that link into the redundant multi-terabit/sec telecom network that it accesses from Iceland; the network links terminate right inside the datacenter itself. Electricity comes directly into the datacenter, too, through Iceland’s dual source – hydroelectric and geothermal – power grid. The datacenter is cooled completely by ambient air, and there are redundant mechanical cooling systems in the event that a fluke of weather causes outside air cooling to stop working. The datacenter has backup UPSes and diesel generators that can let it ride out brownouts and keep going in the event of blackouts.

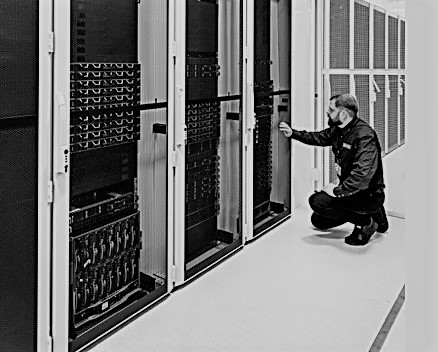

During the beta program of the HPC Direct service, customers used a mix of “Haswell” and “Broadwell” Xeon E5 systems with a variety of configurations tuned to their particular applications. Verne Global is adding “Skylake” Xeon SP nodes now, too. The base setup for the HPC Direct service has Skylake Xeon processors and 14 GB per memory per core allocated to them. The servers have NVM-Express flash cards and flash SSDs for local storage, and link out to the world with dual 10 Gb/sec Ethernet ports and to each other using a dual-rail 100 Gb/sec EDR InfiniBand network. All data on both networks is encrypted by default, and these networks are designed from the get-go for secure multitenancy.

As for Skylake Xeons, some of the computational fluid dynamics codes that Verne Global’s custoemrs want to run on the HPC service do not yet scale as well on these new processors as they do on the earlier Broadwell Xeons, and the added cost of Skylakes is also prohibitive to them, too. But Ward is confident that over time the codes will be tuned and run better on Skylakes and then customers will want more oomph if they can get it at a reasonable price.

“Some customers have seen huge performance increases with Skylake Xeons, and I really do believe that the choice of hardware is application driven,” says Ward. “We work with customers to provide them with the hardware that will work most efficiently for their workloads. We are agnostic about architecture, and are very keen to say so.”

Thus far, the demand for GPU accelerated systems has been “relatively light,” according to Ward, but the pace is expected to pick up as more customers jump into machine learning. The company has one customer, called DeepL, that is using a GPU accelerated system that weighs in at 5.1 petaflops that runs machine learning training for natural language processing.

The HPC Direct service does not run on shared infrastructure, which is important for the kinds of customers that Verne Global is attracting. The machines are offered as bare metal, not virtualized, to get maximum performance and there are no upfront fees to reserve instances. It does take time to place and order and build clusters to specifications, just like it would with any OEM and ODM.

It is tough to compete with the HPC compute at Amazon Web Services and Microsoft Azure, Ward concedes, but once you take into account the cost of moving data around inside the cloud as it is being processed, then the costs on those public clouds skyrocket. Verne Global is not charging for moving data around the infrastructure – linking co-lo systems with adjunct HPC iron is, after all, its sales pitch – and Ward says that when you take all of the costs into account, the HPC Direct service will absolutely be competitive with AWS and Azure, like for like. The company did not release pricing, so we cannot see precisely how HPC Direct stacks up.

This kind of competition is why we think AWS, Microsoft, Google, and other public cloud players will start ramping up bare metal services. New fangled HPC and machine learning frameworks and their applications don’t like or need virtualized instances. It is also a wonder that more hyperscale datacenters are not located in Iceland, given the perfect temperature and humidity it offers for outside air cooling and the abundance of renewable energy thanks to the volcanic activity near the surface of the country.

Secure ? Not so sure… Cf Intel ME flaws. Hope admin has updated all the servers 🙂

Intel has identified security vulnerabilities that could potentially impact certain PCs, servers, and IoT platforms.

Systems using Intel ME Firmware versions 11.0.0 through 11.7.0, SPS Firmware version 4.0, and TXE version 3.0 are impacted. You may find these firmware versions on certain processors from the:

6th, 7th, and 8th generation Intel® Core™ Processor Family

Intel® Xeon® Processor E3-1200 v5 and v6 Product Family

Intel® Xeon® Processor Scalable Family

Intel® Xeon® Processor W Family

Intel Atom® C3000 Processor Family

Apollo Lake Intel Atom® Processor E3900 series

Apollo Lake Intel® Pentium® Processors

Intel® Celeron® G, N, and J series Processors

https://www.intel.com/content/www/us/en/support/articles/000025619/software.html