From DRAM to NUMA to memory non-volatile, stacked, remote, or even phase change, the coming years will bring big changes to code developers on the world’s largest parallel supercomputers.

While these memory advancements can translate to major performance leaps, the code complexity these devices will create pose big challenges in terms of performance portability for legacy and newer codes alike.

While the programming side of the emerging memory story may not be as widely appealing as the hardware, work that people like Ron Brightwell, R&D manager at Sandia National Lab and head of numerous exascale programming efforts do to expose as much of those new capabilities to developers is a critical first step toward making full use of new memory devices. And while it is important to allow expert HPC developers an inside, abstracted view of the memory hierarchy, it is just as critical to ensure that less seasoned HPC codes have enough of a high-level view to see the data movement prices they will pay generally while still allowing deeper insight to the pros.

Brightwell explains that as emerging memory systems and architectures are introduced into conventional memory hierarchies that additional memory complexity along with the existing programming complexity and architecture heterogeneity make utilizing HPC extremely challenging for developers.

When it comes to emerging memory and system architectures, the biggest concerns application developers in the labs have are portability and sustainability, says Brightwell. “They want to be able to have a programming model that allows them to write code once and have it perform well whatever happens with future architectures or memory systems.”

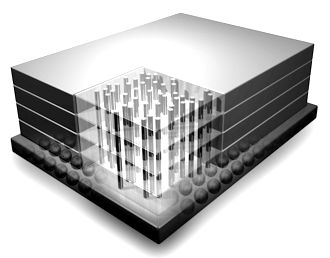

Brightwell’s co-author on a recent paper based on an SC17 conference workshop, Yonghong Yan from the University of South Carolina, says that many he has spoken with are interested in 3D, non-volatile and phase change memory on the horizon but want a way to use the existing software and toolchains they are accustomed to versus writing new software. Yan says he is a proponent of OpenMP for the current time because of the memory management API for dealing with high bandwidth memory and advocates for building on top of that and the others with input from the wider HPC developer community.

“The memory wall challenge — the growing disparity between CPU speed and memory speed — has been one of the most critical and long-standing challenges in computing. For high performance computing, programming to achieve efficient execution of parallel applications often requires more tuning and optimization efforts to improve data and memory access than for managing parallelism. The situation is further complicated by the recent expansion of the memory hierarchy, which is becoming deeper and more diversified with the adoption of new memory technologies and architectures such as 3D-stacked memory, non-volatile random-access memory (NVRAM), and hybrid software and hardware caches.”

“Performance optimization has thus shifted from computing to data access, especially for data-intensive applications. Significant amount of efforts of a user is often spent on optimizing local and shared data access regarding the memory hierarchy rather than for decomposing and mapping task parallelism onto hardware. This increase of memory optimization complexity also demands significant system support, from tools to compiler technologies, and from modeling to new programming paradigms.” Explicitly or implicitly, to address the memory wall performance bottleneck, the development of programming interfaces, compiler tool chains, and applications are becoming memory oriented or memory centric.

“Memory-centric programming refers to the notion and techniques of exposing the hardware memory system and its hierarchy, which include NUMA regions, shared and private caches, scratch pad, 3-D stack memory, and non-volatile memory, to the programmer for extreme performance programming via portable abstraction and APIs for explicit memory allocation, data movement and consistency enforcement between memories.”

From the outside, it may seem like this is an easy enough problem to solve. After all, we already have data-centric programming models that lets developers see the data flow for more refined approached to code that can be portable and high performance. And with MPI as well the same concept applies to allowing an abstracted view of the network. While techniques like exposing the cache, which is normally hidden, have been around for a long time, what Brightwell wants to see is a far more comprehensive effort that extends more broadly into OpenMP, Chapel, CUDA, and OpenACC. Brightwell says that newer efforts like OpenMP memory management for supporting 3D stacked memory and the PMEM library are steps in the right direction but to get truly portable performance for current and future architectures and memory hierarchies there will need to be a more concentrated, collective effort across programming model communities (OpenMP, PGAS, etc).

The challenge for HPC programming models has always been how to expose locality without actually doing so. Going back to the MPI connection in terms of abstraction, that model gives you some understanding from a programmers standpoint of locality—with MPI, you know when you’re going to do explicit message passing and you know the cost. The challenge around memory-centric programming models is similar—you’re focused on how to expose locality from a programming standpoint without explicitly exposing it and doing assembly-level programming at the application level, Brightwell says.

“I see opportunities for existing programming approaches to add abstraction layers to be able to do that. We need to present a memory model to the programmer so they can at least understand how it could map to different memory hierarchies out there. Those abstractions are things we need to develop and iterate around,” Brightwell concludes.

Be the first to comment