As the list of the most powerful supercomputers on the planet reveal, GPU accelerated high performance computing is alive in well across many technical and scientific domains. However, despite its status as a definite HPC problem, weather forecasting has been much slower to jump on the GPU bandwagon.

There are solid reasons for this resistance as NOAA and others have explained. But GPUs might be in the forecast for more weather prediction supercomputers as porting becomes easier and taking advantage of larger memory and compute capabilities on GPUs without hopping into motherboard memory is possible.

After two decades at The Weather Company, which is now part of IBM, Todd Hutchinson has seen standard numerical weather prediction codes grow and the price-performance demands from CPU-only supercomputers powering long and short-range forecasts scale in kind.

A decade ago his teams took cues from a separate graphics group at the Weather Company and wondered how a dense code like WRF might get a performance boost.

Now, as Director of Computational Meteorological Analysis and Prediction, Hutchinson sees the manifestation of those early ideas about speeding weather prediction and doing so in a way that cuts down on operational costs using GPUs. But as anyone who has tried to cram big, grizzled code into a computational sportscar like the Nvidia Volta GPU well knows, getting to the point of port and run is a serious undertaking.

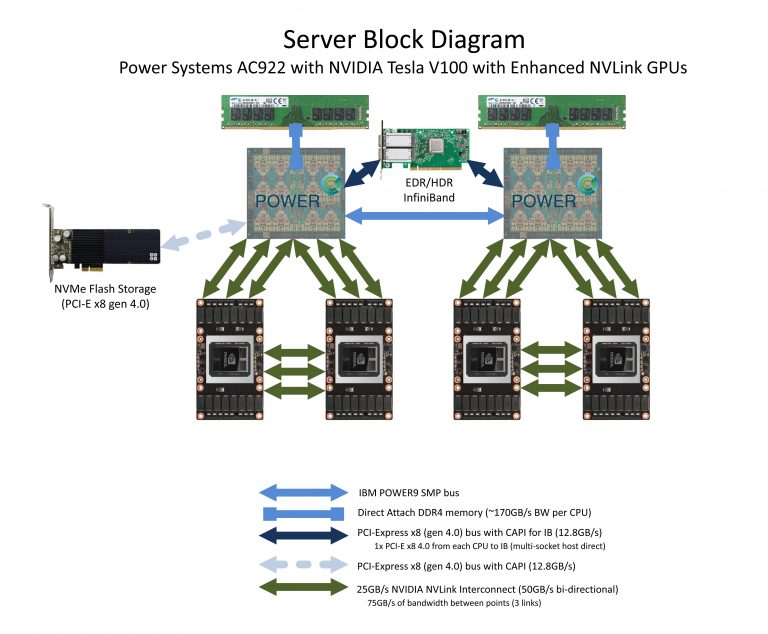

It took his team over two and half years to get their new weather prediction model humming on the same kind of system found on the top-ranked Summit supercomputer—an IBM Power9 driver with Volta GPU accelerators with NVlink for fast processor communication—but the results are well worth the effort.

The GPU-ready code Hutchinson’s team has developed for the Infiniband-connected 84-server IBM Power9/Volta GPU (4 per node) system reduces the lines required for finer-resolution forecasts over previous CPU-only models at The Weather Company. It hits the three kilometer range and will provide Weather.com and related users with 15 minute forecasts for the current day (impressive since most weather forecasting operates in 10 kilometer and does not provide hyper-local or fast refreshes).

Those resolutions and refreshes would be possible on CPU-only supercomputers—they just would not be nearly as fast and, when viewed over time, as cost-effective.

“Prior to the IBM acquisition we always looked only at CPU systems but were always hopeful with GPUs on the horizon,” he tells The Next Platform, pointing to cooperation with research groups like the National Center for Atmospheric Research (NCAR), among others, for pushing the long effort. After all, it is in the best interest of both a commercial weather entity like The Weather Company and research-focused centers to find the most computationally and cost efficient ways of delivering rich, reliable forecasts.

For HPC shops where getting the price/performance ratio right with 24/7 mission-critical deliverables, getting a boost in both departments can make a huge difference. Weather forecasting certainly fits that bill and Hutchinson approaches hardware choices with a keen eye on operational cost for increasingly complex workloads like this new forecasting product.

The GPU difference is a big one, he says. “A single GPU is about four times as fast a single Broadwell or Power9 CPU for us when in the context of a whole model for us. When you take that 4X you get great performance but GPUs are not cheap so we have to account for that cost. When it comes down to it, we are getting a better value on the purchase price of the entire system compared to a CPU-only system. It’s not a huge one in that sense. Where the value really comes from is in the operating costs over time since the HPC system is smaller than it would have otherwise been. We are looking at 84 servers and if we were CPU only it would be in the 400-500 server range.”

With these results, one has to wonder why GPUs have not taken deeper root in weather forecasting. Hutchinson says that there are two relatively recent differentiators. First is the speed of the GPUs themselves but to get those speedups it is necessary to port the entire model—hundreds of thousands of lines of code that were written for CPU-only machines.

The model Hutchinson’s team is using is a few years versus several decades old, which means there is not the overhead of organic growth found in older models. WRF is well over half a million lines of code, mostly rooted in Fortran. This new model is based on the same ideas but with less code. Still, the effort to get this up and running on Power9/Volta GPU systems took two and a half years.

For codes like The Weather Company’s, the computational profile is fairly flat, which means teams had to hit many pieces of the model itself in their iteration to get real acceleration. In other words, it is not the speedup of a single piece of the workflow, the whole code needed to be optimized. None of this could have been done “easily” without software and compiler tech like the OpenACC directive in particular, he says. “It is capable of directing work on the GPUs and makes it much easier to do the port. One of the big differences now is that we used to have to do ports with a custom programming language like CUDA, which can often be more complex and less portable,” Hutchinson explains. While performance might not be at CUDA levels the time to port the entire model is a game-changer.

Not to diminish all the code footwork, but the IBM Power Systems AC922, the same basic setup found on top supercomputers like Summit and Sierra, with Volta GPUs, NVlink, and RDMA capabilities in GPU Direct mean some new avenues around old bottlenecks could be carved, allowing the team to prove the place of GPUs in weather forecasting models.

Not to diminish all the code footwork, but the IBM Power Systems AC922, the same basic setup found on top supercomputers like Summit and Sierra, with Volta GPUs, NVlink, and RDMA capabilities in GPU Direct mean some new avenues around old bottlenecks could be carved, allowing the team to prove the place of GPUs in weather forecasting models.

GPUs are not known in HPC for their massive memory capacity but the team worked within these constraints on both the 16GB and 32GB variants of their cards. “As you divide the problem, more pieces get a smaller memory footprint on each GPU board, which is a good thing since the more GPUs we have the less memory we need to utilize each of them,” he says.

“Memory bandwidth has traditionally been the bottleneck with this code but the way it’s been ported allows an entire stream of processing to run on the GPU without any communication back to the motherboard memory. It’s using the GPU memory for the most part with very little communication back,” Hutchinson says.

“The way we divide the processes is key; we take a region of the world, divide it into sectors that are small and have to communicate with each other. So there might be a grid point over the Boston area that has to talk to a grid point over New York City. Those two pieces of information might be on different GPUs so in that case, the flow between the two would go through NVlink if it’s on a local system and if it’s on an adjacent system it would go through Infiniband, so the real bottleneck is in this adjacency in the model.”

The new IBM Global High-Resolution Atmospheric Forecasting System (GRAF) will be the first hourly-updating commercial weather system that is able to predict something as small as a thunderstorm globally. Compared to existing models, it will provide a nearly 200 percent improvement in forecasting resolution for much of the globe (improving resolution from 12- to 3-square kilometers). It will be available later this year.

This approach is allowing The Weather Company to zoom in at three kilometers on over 40% of the earth with concentration on populated areas. Doing this efficiently means a more cost-effective way to deliver a service to Weather.com and associated outlets that will make a discernable difference in same-day forecast specificity, locality, and accuracy.

While single-kilometer resolutions in weather forecasting systems are still some time away (although have been proven in some prototypes), it will take far denser compute resources to deliver efficiently. Although GPUs have typically not been the primary choice for production systems at ECMWF and other major weather forecasting centers (minus a few exceptions), Hutchinson feels this could start to change.

But then again, those other sites are not fortunate enough to have a parent company that can provide heavy duty supercomputing might at the drop of a hat.

Be the first to comment