NVM-Express has been on a steady roll for the past several years, a hot technology in a storage industry that is looking for ways to best address the insatiable demand for higher performance and throughput and lower latency driven by the need to process data faster and more securely. As enterprises adopt modern workloads that leverage such advanced technologies as artificial intelligence, machine learning and data analytics, and as the massive amounts of data being generated continue to grow, traditional SANs are struggling to keep up with demand.

All-flash arrays based on SSDs are increasingly becoming the norm, and the NVM-Express protocol is being leveraged to improve the performance of flash and other non-volatile memory, thanks to such features as up to 64,000 queues per non-volatile memory (NVM) controller and up to 64,000 commands per queue, a simple set of 13 commands, and multi-thread and multi-process NVM access. As readers of The Next Platform know, there is a broad range of tech vendors – including system OEMs like IBM, NetApp and Dell EMC, as well as component makers such as Intel, Marvell/Cavium, and Mellanox Technologies – are making strong pushes to grow the NVM-Express capabilities in their portfolios and establish themselves in this fast-growing space.

That now includes moving into the NVMe-over-Fabrics (NVMe-oF) space, where the idea is to create a mesh for moving storage commands between nodes using Ethernet or InfiniBand networks and RDMA technology for exchanging main memory data while bypassing the CPU, driving even more performance, throughput and efficiencies.

Many of the same vendors that have gravitated to NVM-Express are now either putting NVMe-oF products and support in place or have announced plans to do so in the near future. There also are several smaller vendors – think Excelero, E8 Storage, Pure Storage, Apeiron Data, and this week Vast Data – that are looking to gain traction against the larger and more established competitors.

Pavilion Data Systems can be included in that bunch. Founded in 2014, the company has raised $33 million in two rounds of funding based on its vision of leveraging NVMe-oF to enable enterprises and HPC organizations to more quickly process, more and analyze data to fuel better business decisions. The company has 65 employees split between its locations in San Jose, Calif., and Pune, India, and management team members have experience with such datacenter tech vendors as Pure Storage, Veritas, Cisco Systems, AMD, Oracle, Broadcom, and Violin Memory.

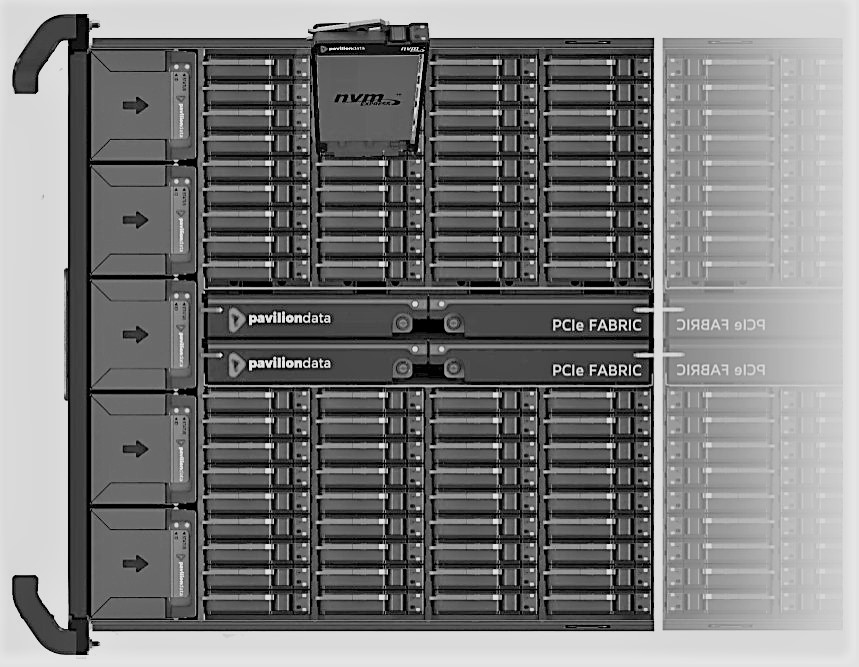

In May 2018, the company announced its first NVMe-oF storage platform, a 4U appliance that offers performance of 20 million IOPS and 20 microsecond latency. Density can range from 14 TB to 1 PB in four rack units and the platform can scale from one to four to 40 100 Gb/sec Ethernet ports and two to 20 active-active controllers. It leverages RAID 6, Intel processors and standard 2.5-inch NVM-Express drives. In November, Pavilion introduced the latest version of the array that hits write speeds of 60 GB/sec for a single system or 15 GB/sec per rack unit with RAID 6 enabled.

A single system can deploy up to 20 storage controllers in an array, and each blade includes four network ports, which brings the number of Ethernet ports in a single array to 40. The hard uses low-power Intel chips as storage controllers, creating what is essentially a cluster of 20 computers running inside a single array, Sosa says. Each controller has its own memory and own copy of the OS

“All the storage functions are coordinated by two management controllers we have in the system,” he says. “Once you’re doing I/O, these separate storage controllers are serving all the I/O directly. When you’re doing management operations, the management controller is setting up the provisioning of volumes and doing other management tasks in the array. We connect all those ten line cards that each have two storage controllers to a NVM-Express drive array of 72 drives. Internally we have a redundant PCIe network that connects all the controllers to all these drives. This is what gives us some of the raw horsepower we need to actually deliver that kind of performance and to completely disaggregate not just the storage media but also all the storage controller software into our array. This is what allows us also to use just the regular in-box NVMe-oF drivers on all the clients because we’ve offloaded everything. We do all the data management in our platform, just like a regular old flash array, just with a lot more performance and a lot more density. And it’s pretty low-cost as well. It’s our enclosure we’ve designed, but all the silicon is off -the-shelf silicon, parts that you can buy from anybody. I mentioned Intel controller CPUs and we use standard PCIe switching components that you can buy. We’ve created our own PCI-Express network with these things internally and then we use off-the-shelf NVM-Express drives as well.”

“What we’re building is essentially an all-flash array that leverages NVM-Express end to end,” Jeff Sosa, vice president of products at Pavilion, tells The Next Platform. “The really unique thing about it really is the amount of performance density we provide in the platform. For example, in this 4U appliance we can serve 120 Gigabytes a second of bandwidth and nobody else can I think of is close to that.”

Increasingly, enterprises and HPC organizations are running large data analytics workloads and applications leveraging modern databases as Splunk, Spark, Tableau, MongoDB, Couchbase, and Cassandra, Sosa says.

“Traditional architecture with these over the last ten years has been using direct-attached storage [DAS] and a bunch of servers,” he says. “The performance we’re delivering out of this appliance allows you to disaggregate all that storage into a centralized storage appliance. You could you put one of these in per rack, replace all the direct-attached SSDs, get the same or better performance as you were getting from the local SSDs, both in terms of latency and bandwidth, and then get all those other benefits of shared storage. The key is being able to disaggregate the storage but still provide all the performance but not run software on all the hosts, like you would in a software-defined kind of approach.”

The Pavilion appliance supports a broad array of protocols, including RoCE (RDMA over Converged Ethernet) and NVMe over TCP. The appliance can also run NVMe over Fabric using InfiniBand, Sosa says.

Pavilion has seen the use of its appliance expand over the past year. The company has four target segments: scale-out cloud players, financial services – particularly those involved in high-frequency trading – media and entertainment and HPC. Initially the company was focusing on rack-scale data environments, replacing SSDs in large-scale cluster databases. However, Pavilion has now begun to seen activity in private clouds and HPC. For example, the Texas Advanced Computing Center in Austin is using Pavilion’s appliance with its Wrangler data analysis system, which is based on Dell EMC products, including its rack-scale flash technology. It’s a 10 PB storage system that comprises 120 Intel-based servers for data access and embedded analytics workloads.

“They were looking to have broader access to the central storage and what they were getting with the prior solution,” Sosa says. “The fact we can connect into their InfiniBand network directly and run NVMe over fabric over InfiniBand, it allowed them in that cluster to just get access from any node at any time. if it’s completely plugged into the whole network through the switches.”

Initially some companies worry that such performance means the platform is expensive. However, the company allows customers to buy a raw Pavilion platform and purchase their own SSDs for it, which also can provide greater flexibility by allowing for different media tiers in the same platform.

“We use RAID in groups of 18 drives, but you could have a sort of a read-only tier of flash and then you can also have another higher performance write-intensive tier of flash,” he says. “It’s completely up to the customer. As long as they’re buying SSDs that we’ve qualified – and we’ve qualified pretty much all the major vendors at this point – that gives them a lot of cost advantages, so the cost model ends up being much more similar to what they were doing before anyway, where they’re buying SSDs direct in usually pretty large volumes, so they have pretty aggressive pricing. Then what we do is we basically sell the system in modular chunks. With these line cards, you can start with just two of them. You can start with just four storage controllers and eight ports and leave all the rest of the slots blank. A base configuration like that would cost you around $75,000.”