The advent of scalable analytics in the form of Hadoop and Spark seems to be moving to the end of the Technology Hype Cycle. A reasonable estimate would put the technology on the “slope of enlightenment” where organizations are steadfastly using Hadoop and Spark to solve real problems or provide new capabilities.

At this stage of the adoption cycle, the hype has been replaced with actual use cases. Often times the successful use cases are not necessarily what fueled the initial hype cycle. In the case of Hadoop and Spark, the focus on huge clusters and datasets has shifted to a more pragmatic approach. That is, not every organization needs petabytes of storage and ten thousand cores to find value in their data when using Hadoop and Spark.

Indeed, the recent merger of the two “big” Hadoop companies (Cloudera and Hortonworks) indicates the market is maturing and adjusting to the tail of the hype cycle. To be clear, Hadoop and Spark are still viable technologies in the “age of data.”

Start Where the Data Lives

The cloud has become a popular alternative to on-premises data processing. The decision to use the cloud, however, should not be based solely on costing models. There are data movement costs, security issues, and ownership issues (you own the data but not the hard drive). In some situations, these issues don’t matter and in others they are a hard wall between on-premises and the cloud.

The obvious strategy is to analyze the data where it is created. That implies Web-based companies using the cloud should keep and analyze their data in the cloud. The corollary also suggests if data are created on-premises then do the analysis locally. There is a third scenario developing where data are being collected at the “edge” of the datacenter or cloud. Edge data are those data that are generated locally but not necessarily in an on-premises or cloud datacenter. One example is Industrial Internet of Things (IIOT) data where factory floor information is collected and used to increase productivity. Logically, data analytics “in place” at the edge makes the most sense in these scenarios. A recent article at The Next Platform, entitled The Evolving Infrastructure At The Edge, discusses this growing ecosystem.

The Thing About Hadoop and Spark

Before any discussion of Hadoop and Spark at the edge can begin, there is an important point to be made about scalable analytics tools. Often time’s market hype is couched in a “Hadoop versus Spark” technology battle. The overused “killer technology” arguments are often part of the above-mentioned discussion. In reality, things that work tend to stick around for any number of reasons. Consider that 220 billion lines of COBOL code are currently being used in production today and this is not the language de jour.

A functioning scalable analytics platform is more than a single application and usually provides an array of tools for both analytics and data management. In addition, many real data science applications consist of multiple stages that may require different tools at each stage. Experienced Data Scientists and Engineers are well aware of the dangers of a single solution mentality and are not so much concerned about fashion as they are about results.

It is Not Necessarily about Large Data

Data happens and information takes work. Estimates are that by 2020, 1.7 megabytes of new data will be created every second for every person in the world. That is a lot of raw data.

Two questions come to mind. What are we going to do with it and where we going to keep it. Big Data is often described by the three Vs – Volume, Velocity, and Variability – and note not all three need apply. What is missing is the letter “U” which stands for Usability. A Data Scientist will first ask, how much of my data is usable? Data usability can take several forms and include things like quality (is it noisy, incomplete, accurate) and pertinence (is there any extraneous information that will not make a difference to my analysis). There is also the issue of timeliness. Is there a “use by” date for the analysis or might the data be needed in the future for some as of yet unknown reason. The usability component is hugely important and often determines the size of any scalable analytics solution. Usable data is not the same as raw data.

Next comes the actual storage. As mentioned data are being collected at unprecedented rates. Many of big large Internet companies have been collecting data for a while and they can make the case for massive and scalable data repositories. As more organizations begin to accrue more data, one thing has become quite clear. As explained in a recent article on The Next Platform article, there is not enough capacity to keep all the expected new data in the cloud. This in-rush of data means that some data will have to analyzed “in-place” at the edge. This requirement is actually is good news for the bloated cloud or datacenter because pre-processing data can be done at the edge creating smaller feature based data sets that are more easily moved.

As with many market buzzwords the term Big Data can have many different meanings. Often times it is associated with massive Hadoop clusters, but often it has more to do with the limitations of conventional databases and data warehouses solutions. It turns out not everyone needs petabytes of storage and ten thousand cores to perform data analytics. Indeed, depending on your data set size, a spreadsheet may work just fine. To be fair, companies with a massive web presence need every bit of scalable processing they can find and “heroic” Hadoop/Spark clusters make sense. In reality, the number of Big Data stalwarts is not that large and a majority of companies that are adopting Hadoop/Spark are doing so for reasons in addition to the volume of data.

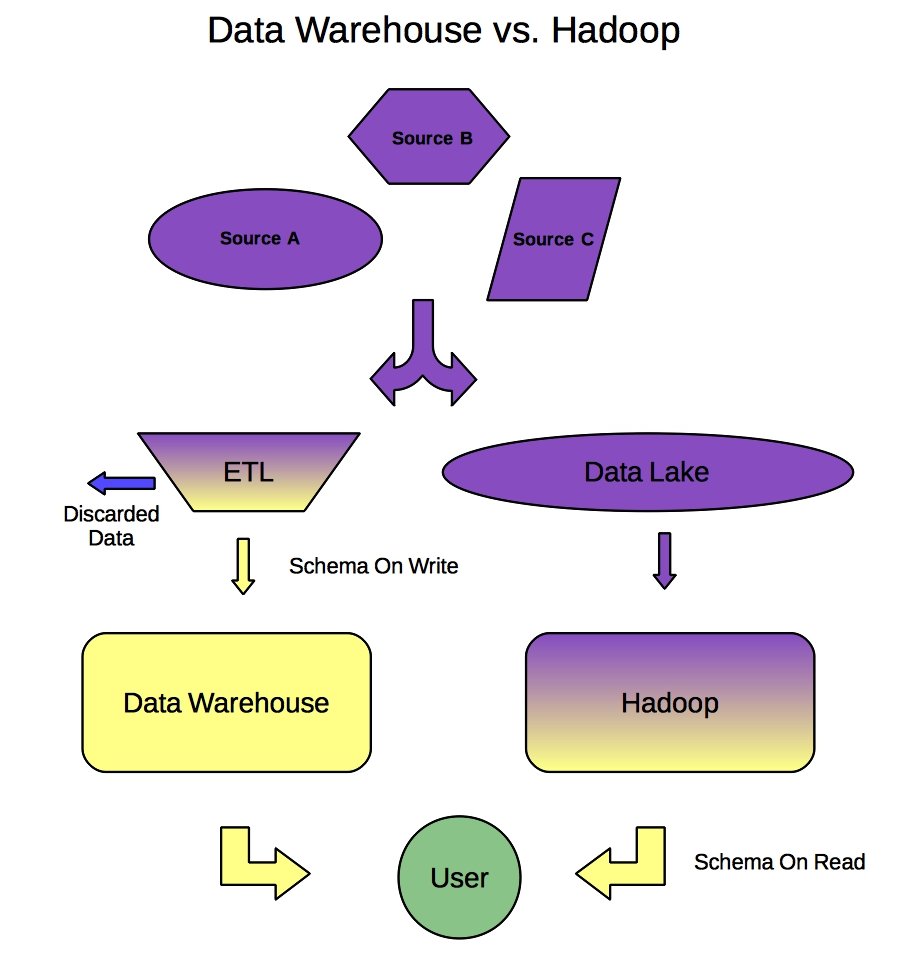

If one looks closely at how Hadoop and Spark are used the term “Data Lake” usually enters the conversation. A modern data lake is a key part of understanding scalable analytics. A data lake is described with the aid of Figure One. The figure starts with three sources of data (A, B, C) and takes two paths to usability.

The left side of the figure is the traditional data warehouse path where data are “cleaned” and placed into a highly efficient database. The cleaning step is where data are Extracted from source data, Transformed and/or cleaned, and Loaded into the database (ETL). In the process of the ETL step data maybe discarded because it is considered irrelevant or it just won’t fit into a relational model. This process is called Schema-On-Write because a predefined database schema must be created prior to committing the data to the data warehouse.

The right side is a contrasting Hadoop/Spark dataflow where all of the data are placed into a data lake or huge data storage file system (usually the redundant Hadoop Distributed File System or HDFS) The data in the lake are pristine and in their original format. Hadoop, Spark and other tools define how the data are to be used at run-time. This approach is called Schema-On-Read and allows maximum flexibility as to how data are used. The ETL step has now become a flexible part of the analytics application. In other words, applications are not limited to a predefined database schema and can allow the Data Scientist to determine how the raw data are to be used for a given analysis. In addition, there may be times when some or all of the data are “unstructured” and not able to fit into a database schema and yet can yield insights if used properly (e.g. graph data, voice, images, video, text feeds, etc.). Finally, there is also a real-time capability offered by a data lake. If the data in-flow velocity is high (one of the three V’s) and a fast turn-around is needed, then bringing the ETL step into the application can provide real-time streaming capabilities.

The other advantage of a Data Lake is scalability as the data volume grows. Since the lake is a flexible repository and not defined by a database size it can grow quite large. Allowing for future growth is important because just having data does not necessarily mean you can or will use it for business insight.

A Funny Thing Happened on the Way to Insight

As mentioned, the closer one gets to insight the smaller the data set that is required. A large part of data science is devoted to cleaning and preparing data. This aspect is often the least expected part of Data Science and is often referred to as data munging or data quality control. There are two basic activities in this process;

- Identifying and remediating data quality problems

- Transforming the raw data into what is known as a feature matrix, a task commonly referred to as feature generation or feature engineering

In the era of Big Data, raw data size is typically measured in terabytes or petabytes, and thus processing such large datasets in memory (on a single laptop or server) becomes difficult, if not impossible. The size of the original data often requires a scalable method such as Hadoop or Spark. However, the feature matrix is often much smaller than the original data because the data munging process reduces the data set to that which is actually needed to run a model. It should also be noted, that determining the correct or best feature matrix can be a time consuming process and the flexibility proved by a Hadoop/Spark data lake can be very helpful in this process. In general, often times the analysis moves from a large volume with low/medium computation data munging stage to a small/medium volume with high computation model development stage. Again, Hadoop and Spark applications are not necessarily always about data size.

No Datacenter Needed

Running analytics at the edge usually means living outside of the datacenter. This requirement can include places like a laboratory, office, factory floor, classroom, or even at home. As mentioned above there can be situation where large data volume is not the sole driving force for effective analysis.

Quite often, edge system clusters cannot be composed of datacenter nodes. A datacenter provides access to large amounts of (clean) power, is designed to remove exhaust heat, and has a very high tolerance for fan noise. Merely stacking datacenter nodes in a non-datacenter environment may work from a performance standpoint, but may fail other basic requirements such as;

- A low heat signature – datacenter nodes make very good space heaters and may not be suitable for local environments

- A low noise signature – it should be possible to have conversations and phone calls next to an edge system.

- Acceptable power draw – an edge system must work with available power, this requirement means taking full advantage of but not swamping standard “office/lab” power distribution.

While these requirements may not allow frontline datacenter performance, they provide extreme flexibility in terms of resource placement. It is possible to design and build high performance systems within the heat/noise/power envelope described above.

When Hadoop clusters started to gain traction in the market (around 2012) a typical worker “node” consisted of current state of the art commodity hardware. These suggested worker node requirements are shown in the table below. An economical edge worker node is outlined in the right column.

| Specification | Circa 2012 In The Datacenter | 2019 At the Edge |

| CPU | 2×6 core/threads@2.9 GHz/15 MB cache | 1×6 core/threads@3.7 GHz/12 MB cache |

| Memory | 64 GB DDR3-1600 ECC | 64 GB DDR4-2666 ECC |

| Disk Controller | SAS 6 Gb/s | SATA 6 Gb/s |

| Disks | 12×3 TB SATA II 7200 RPM | 2×4 TB SSD |

| Network Controller | 2×1 GbE | 1 x10 GbE/1×1 GbE |

Using the 2019 edge nodes described in the table, it is possible to place an eight node Hadoop/Spark cluster almost anywhere (that is, 48 cores/threads, 512 GB of main memory, 64 TB of SSD HDFS storage, and 10 Gb/sec Ethernet connectivity). These systems can be placed in tower or rack-mount cases. The power design fits most US industry, academic, or government standards. For instance, it is possible to run up to twelve edge nodes using standard 15A electrical service (1200 watts max). In addition, a full sixteen-node edge cluster could provide up to 96 cores/threads, 1 TB of main memory, 128 TB of raw HDFS SSD storage, and require a 20A service (1600 watts max).

As the specification shows, it is possible to stand-up quiet, cool, and power efficient edge clusters with more computer power, faster storage and networking than the typical 2012 Hadoop worker node. These systems represent a new class of high performance “No Datacenter Needed” analytics clusters.

The Action Is At The Edge

The advent of edge analytics requires a closer look at the process of data acquisition, processing, and computing environment. By definition, computing at the edge requires thinking and working outside the datacenter box. The following points may help guide this process.

- Big Data is defined by Volume, Velocity, Variability and Usability

- Hadoop and Spark are not necessarily about data size, but more about flexibility of a data lake

- Analyzing data in-place at the edge reduces the data-pressure in the datacenter and cloud

- The balance between storage and computation often changes the closer one gets to insights

- Edge hardware must be based on “No Datacenter Needed” designs where the local environment dictates the performance envelope.

The design of edge systems has been a continuing part the Limulus Project. The Limulus project tests concepts, designs, and benchmarks commodity based open-source HPC and Hadoop/Spark clusters for personal and edge computing. Commercial versions of Limulus edge systems are available from the project sponsor Basement Supercomputing.

Did not get what you meant to convey by this article

(1) Is it that with the increase in computing power , you do not need Hadoop or Spark cluster at Data Centers ?

(2) Or that the Data Lake in Data Center can do heavy lifting and a subset of data can go to edge cluster to provide Insights?

this article is interesting but completly outdated in his concept. Native Cloud Solution like Snowflake offers natively ingestion of structured and semi structure data, work in batch or real time and allow to merge all the component of the schema into one solution at scale. The Cloud data platform is evolving much faster than the hadoop generation did. Except Spark, nove of an hadoop platform components are required. The costs and complexity of hadoop platform make them irrelevant regarding the market expectations. Today, the cloud offering is so wide and performant that it doesn’t make any sense to invest in an hadoop cluster.

I was delighted to see written up what I have, in fact, been supporting for several years. At this point, the edge computer still typically lives in the cloud. Administered by DevOps teams, but owned by edge data/ML/Semantic developers delivering analytics/visualization/predictive events to the biz owners.