Optical computing, a technology that traces its roots back to the 1930s, is enjoying something of a revival these days. Most of that is a consequence of the rise of machine learning, an application set that is particularly suited to the analog nature of the technology. And because optical computing uses photons instead of electrons, it promises both higher performance and lower power consumption than its digital counterpart.

As we have reported previously, there are number of startups in this space, including Optalysys, Lightmatter, Fathom Computing, Lightelligence and LightOn. That last one is a little-known French startup that has developed something called an Optical Processing Unit (OPU). The 13-person company was founded in 2016 and has collected at least $3.3 million in venture capital.

While the LightOn technology has yet to be formally released, researchers and other potential customers are already banging on prototype hardware thanks to an arrangement with OVH, a European cloud provider. The OPU prototype, known as “Zeus,” can be accessed on an OVH server equipped with a standard CPU and GPU. The hardware can be programmed with Python and is already supported with Scikit-Learn. Support for other popular machine learning frameworks such as TensorFlow and Keras are supposedly in the works.

According to LightOn co-founder and chief technology officer Laurent Daudet, the affinity of optical computing for machine learning stems from the fact that optics allows you to do Fourier transforms essentially for free. And once you have Fourier transforms, you can do statistical correlations, and that means you can perform pattern matching, which is at the heart of machine learning.

Daudet says there were rudimentary neural networks in the 1980s, but at that time Moore’s Law was going full steam and you could hardwire Fourier transform logic into your chips if you so desired. Also, optical computing couldn’t provide the higher precision needed for things like traditional HPC (and still can’t), so the technology wasn’t seen as something that would be generally applicable to the industry. Today, with Moore’s Law on the decline and with lower precision machine learning becoming the central paradigm of information technology, optical computing is enjoying a renaissance.

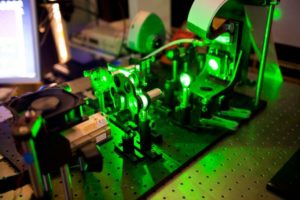

LightOn’s OPU is a relatively simple device. It’s comprised of a laser light source, a light modulator in the form of a video projector chip, a layer of scattering material, and a camera. This allows the system to do the analog equivalent of a vector-matrix multiplication, which is the basis for most neural network processing. The OPU encapsulates the equivalent of a matrix with a million by a million elements, which would suck up terabytes of memory on a digital system.

The OPU operates in the kilohertz frequency range, which is orders of magnitude slower than a conventional processor. But since the matrix multiplication is done in parallel fashion, the computation is extremely quick. Daudet says the device can do the equivalent of 10^15 operations per second (that’s petaops) with just a few watts. “It’s many, many orders of magnitude more efficient in terms of operations per watt than what you can do on a standard silicon computer,” says Daudet.

Suitable applications include image classification, recommender systems, graph analysis, anomaly detection, video classification, and natural language processing. LightOn has performed a handful of demonstrations with the technology, including a movie recommender solution, image recognition for digits, and transfer learning that applies new data to a previously trained neural network.

One interesting use case is detecting the point at which patterns change in data streams exhibiting some sort of variance – for example video imagery, stock activity, weather data, and so on. The advantage of the OPU for problems like this is the unlike digital implementations, the calculation time doesn’t vary with the size of the data (as long as it fits in the million by million matrix). In general, the OPU really only shines with these larger data sets, since for smaller problems, GPUs can offer comparable performance.

LightOn hasn’t set a date yet on when the OPU will be generally available to the public and is still looking for more proof points from beta users.

Be the first to comment