If you were expecting Nvidia to start talking about its future “Ampere” or “Einstein” GPUs for Tesla accelerated computing soon just because AMD is getting ready to roll out “Navi” GPUs next year and Intel is working on its Xe GPU cards for delivery next year, too, you are going to have to wait a bit longer. Nvidia still has money to squeeze out of the Tesla T4 and V100 accelerators based on the respective “Turing” and “Volta” architectures.

The anticipation is building for competition between Nvidia, AMD, and Intel for accelerated computing, and it won’t be long before Nvidia co-founder and chief executive officer Jensen Huang pulls out the GPU roadmap to show what Nvidia is working on in the future – we expect it around the time of the GPU Technology Conference next spring, when AMD and Intel will also be talking loudly about their GPU compute.

Everyone is impatient now. And that is because new technology drives new markets at an accelerated pace. The rapid-fire cadence of new generations of Tesla GPU accelerators from Nvidia over the past decade got the high performance computing and artificial intelligence sectors of the IT industry used to seeing new compute engines every couple of years. In the early days of accelerated computing, Nvidia was very open with its ambitious GPU roadmaps, but as it has become more established in the datacenter, it has grown more tight-lipped about its future plans. This is a natural conservatism that comes with being the dominant player in its chosen fields of engagement and having current products. And as is obvious from AI benchmarks done over the past two years, there is plenty of performance that can be gained from improvements in the software stack running on the T4 and V100 GPUs, and much of the low hanging fruit should be picked here about the same time Nvidia needs to actually start talking about Einstein GPUs, whatever they are besides a shrink to 7 nanometer chip processes from Taiwan Semiconductor Manufacturing Corp.

Whatever the plan is, all that Huang didn’t say much about it on a call this week with Wall Street analysts going over the financial results for Nvidia for its second quarter of fiscal 2020 ended in July.

“Data center infrastructure really has to be planned properly, and the build-out takes time,” Huang explained. “And we expect Volta to be successful all the way through next year. Software still continues to be improved on it. In just one year, we improved our AI performance on Volta by almost 2X – about 80 percent. You have to imagine the amount of software that’s built on top of Volta — all the Tensor Cores, all the GPUs connected with NVLink, and then the large number of nodes that are connected to build supercomputers. The software of building these large-scale systems is really, really hard. And that’s one of the reasons why you hear people talk about chips, but they never show up because building the software is just an enormous undertaking. The number of software engineers we have in the company is in the thousands, and we have the benefit of having built on top of this architecture for over one and a half decades. And so, when we’re able to deploy into data centers as quickly as we do, I think we kind of lose sight of how hard it is to do that in the first place. The last time a new processor entered the datacenter was an X86, and you just don’t bring processors in the datacenters that frequently or that easily. And so, I think the way to think about Volta is that it’s surely in its prime, and it’s going to continue to do well all the way through next year.”

So true about bringing a new processor architecture into the datacenter. It might be a glasshouse, but you need a pretty big stone to smash into it. And that is against due to the natural conservatism of large enterprises, who cannot take the same kinds of risks as a break-fast and move-fast hyperscaler that invents all of its own software stack.

The good news for Nvidia is that after a decade of seeding new markets for GPU compute in its various forms – traditional simulation and modeling in HPC, machine learning in AI, a smattering of database acceleration, and now an expanding data science area that complements these more established sectors – it has a baseline of business in the datacenter that keeps chugging along, with the usual ups and downs that are typical of spending among HPC centers, hyperscalers, and cloud builders.

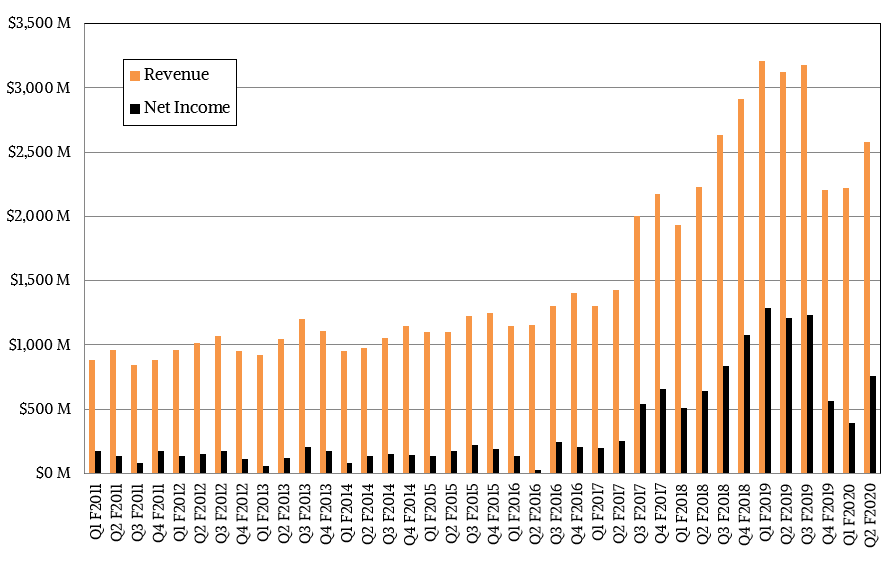

In the second quarter, Nvidia’s overall revenues were down 21.1 percent, to $2.58 billion, with net income cut in half to $552 million as it makes investments in its future GPUs ahead of their launch next year. Research and development spending rose by 17.5 percent to $704 million in the quarter, and that probably includes money allocated for Einstein GPUs plus a kicker to the NVSwitch interconnect and its related NVLink 3.0 protocol as well as work it is doing with TSMC to perfect 7 nanometer chip etching and the subsequent move to 5 nanometer processes that follows it. That increase in R&D spending had an impact on net income, no question about it, but this is table stakes to be in the tech sector.

Revenue and net income in Q2 of fiscal 2020 was not impacted by the pending $6.9 billion acquisition of Mellanox Technologies, which has just received approval from regulators in the United States but still needs approval from regulators in Europe and China before it can close.

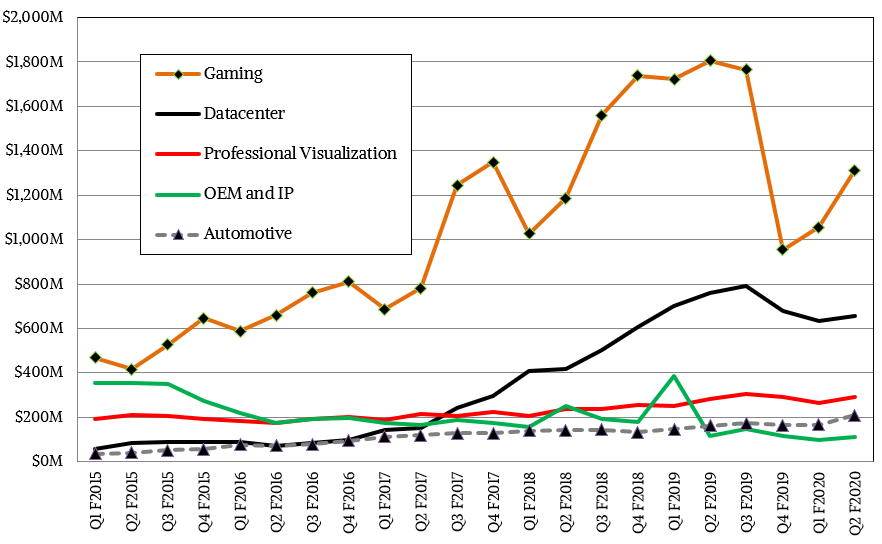

During this same time, Nvidia’s datacenter business did not contract by as much as the overall business did, which has been hurt by a glut of GPUs in the channel, mostly GeForce and Quadro devices that are aimed at gamers and professional graphics users. Datacenter sales in Q2 fell by 13.8 percent to $655 million, and that includes revenues not only from Tesla GPU accelerators and NVSwitch ASICs, but also whole DGX-1 and DGX-2 systems that Nvidia sells directly to customers. It is not clear how much of the revenues come from systems, but our guess is that the DGX systems drove about 20 percent of the $2.67 billion in revenues that Nvidia’s datacenter business raked in during the trailing twelve months. It is substantial, and it makes Nvidia one of the largest suppliers of supercomputers in the world.

Chew on that for a minute.

While Nvidia does not provide details, Huang reiterated that its inference compute business is growing, and this is largely due to the success of using the same Tensor Core units in the Turing architecture that are the key component of its dynamic ray tracing for gaming and rendering for inference engines in hyperscale, cloud, and on premises infrastructure. This inference business is now a “double digit” part of the datacenter business – call it somewhere between $300 million and $400 million in the trailing twelve months. This is also a significant business, and this is due to the fact that the Tesla T4 accelerators have the inference performance and bang for the buck to compete against CPUs and FPGAs, which have dominated machine learning inference to date.

The FPGAs and CPUs are not giving up this business without a fight, by the way, and in many ways, this is where Nvidia will have to compete harder. It already owns machine learning training with heftier GPUs, and unless and until some architecture than can do both training and inference comes into the field and smashes through the glasshouse, Nvidia will probably maintain its enormous lead in machine learning training. Particularly among shops that are doing both HPC and AI and soon data science and analytics. The more general purpose solution will win at clouds and in enterprises, even if it costs a premium, because of its wider utility. This, after all, is what Intel has been doing with its Xeon chips in the datacenter until AMD fielded credible and competitive processors with the new “Rome” Epyc 7002 series of CPUs. Nvidia will face similar pressures as AMD and Intel field credible GPUs, but they will have to get their software acts together to compete against the powerful combination of Tesla accelerators and the CUDA parallel programming environment.

Nvidia’s datacenter business was up 3 percent sequentially, with the softness in spending among hyperscalers and cloud builders that has hurt all system suppliers in the first half of 2019. The datacenter business actually had a relatively easy compare in Q2 fiscal 2020 because Nvidia had shipped tens of thousands of Volta GPUs for the “Summit” supercomputer at Oak Ridge National Laboratory and the “Sierra” supercomputer at Lawrence Livermore National Laboratories earlier in 2018. That bubble would have made for a very tough compare had it occurred in the prior year’s Q2. Now, as companies install baby versions of these IBM systems as well as DGX iron from Nvidia and clones of it from various OEM and ODM suppliers, Nvidia will have a steady state of revenue from the datacenter. We don’t expect the kind of explosive growth we have seen in past years, but rather a more gradual rise that is typical of a more mature but still exciting collection of market sectors. There is plenty of room for Nvidia to double and triple and maybe even quadruple its datacenter business, but it is not going to happen overnight.

And it surely is not going to happen with an impressive set of Einstein Tesla CPU accelerators next year to keep AMD, Intel, and Xilinx at bay.

Be the first to comment