The European Commission has anted up €3.9 million to create a set of tools and runtime frameworks that will be used to support the exascale supercomputers to be deployed across the continent in the coming decade. The project, known as the European joint Effort toward a Highly Productive Programming Environment for Heterogeneous Exascale Computing (EPEEC), kicked off in October 2018 and is scheduled to run through September 2021.

The end date puts it a couple of years ahead of Europe’s first exascale deployment in 2023, but in line with pre-exascale systems that are expected to start showing up at the beginning of the next decade.

As reflected in the project’s name, EPEEC’s goal is to produce a set of development tools and runtime software that can support both the scale and heterogeneous nature of exascale supercomputers. Heterogeneity, in particular, has become a central theme for the effort, which stems from the fact that the Europeans appear to have settled on the host-accelerator model for future supercomputers. That applies to both the pre-exascale systems, which will based CPU-GPU designs, as well as the exascale systems, which are slated to incorporate an Arm processor and a RISC-V-based accelerator, along with other specialized hardware components. In this case, heterogeneity also applies to the memory subsystem, which is increasing in complexity thanks to innovations like storage-class memory and high-bandwidth memory.

More broadly, EPEEC is supposed to address the entire plethora of software issues deemed critical to HPC, namely execution efficiency, scalability, energy awareness, composability/interoperability, and programmer productivity. The latter has been the pipe dream of the HPC community for decades, and has been held back by application software that relies on older programming languages (C, C++, and Fortran) and a set of competing, and often conflicting accelerator frameworks (OpenMP, OpenACC, CUDA, OpenCL, and SYCL). That has made programming HPC applications more art than science.

“Using a supercomputer efficiently should not be limited to ninja programmers,” said EPEEC’s technical manager Antonio Peña, leader of accelerators and communications for HPC at the Barcelona Supercomputing Center. “It should be open to domain application developers with a general understanding of parallel programming.”

The idea is that EPEEC will provide automated generation of compiler directives for applications, creating a higher level of abstraction for developers that is normally the case. Developers will also be able to use their preferred programming language (as long as those languages are C, Fortran, or C++), as well as accelerator programming model (OpenMP, OpenACC, CUDA, or OpenCL). In addition, developers will be able to choose either a global shared memory or distributed shared memory model.

The EPEEC software will be derived largely from existing European-made tools and runtime libraries, reflecting the same inclination toward domestically-produced technology that the EU is pursuing for its exascale hardware. Much of the software development effort will be undertaken at the Barcelona Supercomputing Center in Spain, but will also include additional partners, namely Fraunhofer (Germany), INESC-ID (Portugal), INRIA (France), CINECA (Italy), Appentra Solutions (Spain), IMEC (Belgium), Eta Scale AB (Sweden), Uppsala University (Sweden), and CERFACS (France). Even though this is a strictly European effort, all the source code will be available in GitHub, making it available to anyone interested in the technology.

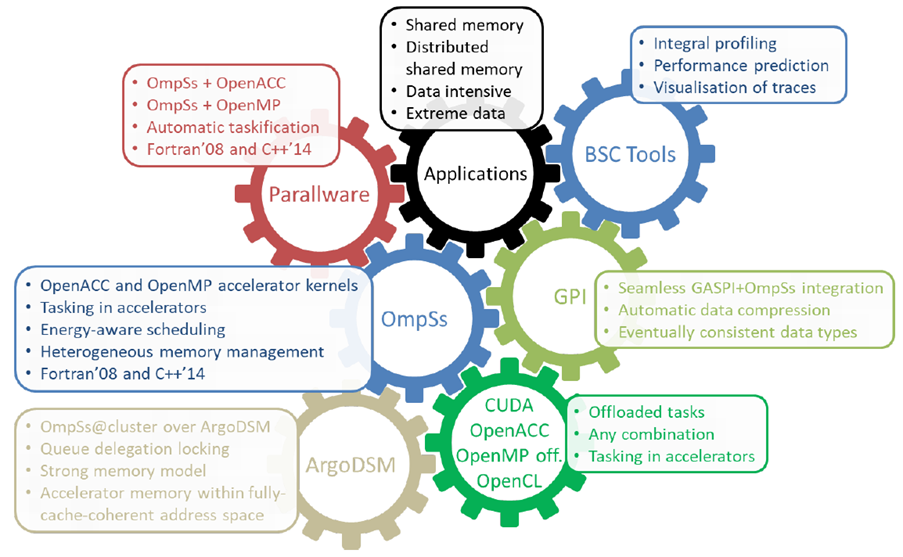

EPEEC will rely on three principle run-time components: OmpSs, a shared-memory programming model based on pragma directives and tasking, GASPI, a partitioned global address space programming model using one-sided communication, and ArgoDSM, a distributed shared memory model that runs atop an RDMA network.

For EPEEC, OmpSs is being enhanced to include accelerator directives and tasking within accelerators. Backends for OpenACC, OpenMP, CUDA, OpenCL, and SYCL will be developed such that the low-level interfaces of these frameworks will be encapsulated within OmpSs. A distributed-shared memory backend for ArgoDSM will also be developed so that OmpSs applications can be seamlessly dispersed across cluster nodes. A particular implementation of GASPI, known as GPI, will be supported by OmpSs to offer an alternative to the distributed shared ArgoDSM model.

A variety of program development tools from European software vendors and national labs will be supported under the EPEEC effort. Those include Appentra’s Parallelware, a static analysis tool that will help developers convert their serial codes to parallel ones by suggesting directives for OmpSs, OpenMP, and OpenACC. Performance analysis tools Extrae, Paraver, and Dimemas are also being enhanced to support EPEEC’s programming models. The Mercurium C/C++/Fortran source-to-source compiler is also being tweaked to support the EPEEC runtime functionality.

The project will be use five applications that will serve as co-design touchpoints and as technology demonstrators. They were chosen to span a variety of compute-intensive, data-intensive, and extreme-data scenarios and include AVBP (fluid dynamics and combustion), DIOGENeS (nanophotonics/nanoplasmonics), OSIRIS (plasma physics), Quantum ESPRESSO (materials sciences) and SMURFF (life sciences).

A deeper look into the specific components of the EPEEC programming environment can be found here, along with a pointer to the relevant GitHub repository.

Be the first to comment