Every orchestra needs a conductor to keep everyone playing together on pace, and while a good conductor doesn’t need to know how to play every instrument well, they have to know how to play many instruments and also to understand how it all comes together to create a symphony.

This, analogously, is what the director of science at a national supercomputing lab does every day: Orchestrate the science that gets done with the assistance of massive amounts of computation. And while we like massively parallel systems like nobody’s business, we would be the first to admit that the supercomputer is not an instrument so much as the stage where scientists can play out their ideas and see what they sound like, resulting in papers that advance whatever science they happen to be playing and, ultimately, changes in the way we live as we apply that knowledge.

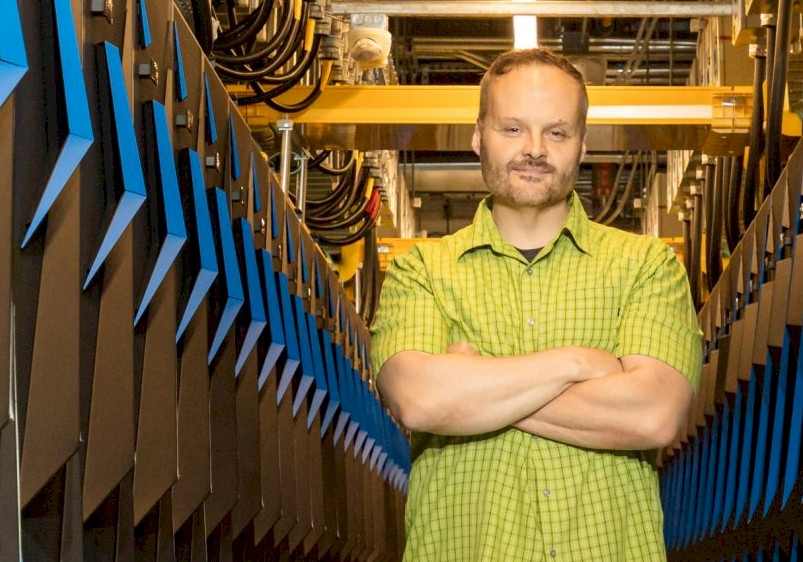

At Oak Ridge National Laboratory outside of Knoxville, Tennessee, that conductor job – the director of science at the Oak Ridge Leadership Computing Facility, or OLCF as the organization and supercomputers like Jaguar, Titan, Summit, and Frontier were called – has just been given to Bronson Messer, who has a long affiliation with the University of Tennessee and with Oak Ridge. Messer’s predecessor, Jack Wells, who is well known to The Next Platform, was named director of Oak Ridge National Laboratory’s newly formed Office of Strategic Planning and Performance Management at the turn of the year, opening up the position that Messer was tapped to fill.

Messer reached out to The Next Platform to have a chat about the challenges are picking the right science to do on exascale class machines and in recruiting talent to create the software to do science on these behemoth calculating engines, among other things. Messer is a computational astrophysicist who first worked at the lab as a 16-year-old summer intern back in 1987, the decade when technical computing went mainstream and supercomputing became more pervasive. And as such, supercomputers have been ever-present in his education and in his work.

Timothy Prickett Morgan: There are always more avenues of research that you might pursue than you can pursue. How do you curate and cultivate the science and the applications underneath it that are going to be necessary for the Frontier exascale-class machine, and how are you preparing to go to 10 exaflops or whatever the next bump up in performance is? We are already at pre-exascale, and a year and a half ago we had a long chat with Jack Wells, your predecessor, about getting code ready for the pre-exascale Summit machine that was just-then installed. Oak Ridge does a lot of the work to make code run pretty on these machines long before the first server node is installed.

Bronson Messer: That’s right. At this point, we have long experience in preparing for a platform that is brand, spanking new. Furthermore, we have a lot of experience doing hybrid node architectures. When we started working on applications for Titan back in late 2009, we instantiated something we call the Center for Accelerated Application Readiness, or CAAR. And my standard pun is – and I refuse to apologize for it – is CAAR is our vehicle to drive a handful of key applications long before the machines come into the datacenter.

TPM: You know that you are forcing me to mix metaphors in this story, right?

Bronson Messer: Uh-huh. So we have done CAAR for Titan, we did we did CAAR for Summit, and we are doing CAAR for Frontier right now. And in each one of those cases, we take a handful of applications and we make sure that they are going to be ready Day One so that we can actually do science on the machine immediately. There is obvious benefit from that. When the machine lands on the floor and is accepted, we will have useful work to do immediately.

But it is more than that. There are ancillary things that come along with CAAR that are just as important. We develop a set of best practices that we can then share with other users to tell them exactly how they really want to use the machine. It’s one thing to give your users a description of speeds and feeds and a list and a catalog of programming models and software that are available on the machine. But it is quite another thing to help them learn how to use the machine. And I think CAAR has been instrumental in that in the past with Titan and Summit, and I certainly have every confidence it will be instrumental for Frontier as well.

The other key thing with CAAR is it trains our staff by living right there on the bleeding edge. With a handful of user teams, our staff gets trained up really, really fast. It’s like being thrown into the deep end of the pool over and over and over again with beta software.

TPM: That’s called drowning. Or hazing. Or something.

Bronson Messer: We learn to be strong enough to keep our heads above water.

I think that the key thing about being director of science is that you get to learn a lot of science really, really fast. You have to learn enough science in various disciplines to be able to understand what somebody wants to do and to be able to gauge how important it is to other efforts in the community or at least to know how to formulate the question and then get that answered by somebody else who’s actually an expert, which is – it’s a daunting task. For somebody who is a science groupie like me, it’s a lot of fun.

TPM: What’s your background?

Bronson Messer: I actually was I was a grad student at the University of Tennessee and did my thesis work at Oak Ridge. I left for a while and went to the University of Chicago, and there I worked in the Flash Center for Computational Science. I’m a computational stellar astrophysicist by training. Or as I tell middle school students – although this joke is completely lost on them now – I do Beavis and Butthead science. I just watch stuff blow up. I am mostly interested in supernova explosions in all their various forms, and astrophysical transience and dense matter. I was at Flash for a few years and when the OLCF was being formed in 1992, I finished up that postdoc and was drawn back by the siren song of the biggest machines in the world. I have been here ever since.

TPM: So with CAAR, Oak Ridge has been down this road before – see what I did there? – with Titan and Summit. Now you are on the long and winding road to Frontier. Is there anything different about the process this time around as we break through the exascale roadblock? Does it take longer to get applications to work across increasingly higher scale, or is it always about the same amount of grief to get it to work?

Bronson Messer: It’s interesting. As we’ve moved from Titan through Summit and now on to Frontier, the number of network endpoints that people have to worry about — the number of MPI ranks, for example — has stayed fairly, fairly constant. Right. Factors of two, but not factors of 10. It’s the it’s the power of the node that’s become much more much bigger.

TPM: Correct me if I’m wrong, but I always thought people generally tied an MPI rank to a single core, roughly, and with hybrid machines, it was one core per streaming multiprocessor, or SM, on the GPU.

Bronson Messer: No, that’s not the way most people are using the hybrid node architectures that we have. We saw this to a lesser extent on Titan, but let’s take Summit as the baseline. Most people computing on Summit assign a single MPI rank to each GPU. That’s the thing that sort of makes sense.

TPM: How, then, do you allocate the CPU? Do you allocate a bunch of cores off a CPU to each GPU?

Bronson Messer: Correct. I can tell you what we did for the Flash code, for example, as part of ExaStar project on Summit. On the Power9 CPU, we had a single MPI rank running, then multiple OpenMP threads running over all of the cores. Those cores each fed individual CUDA streams to be able to do chunks of work on the GPU at a time. So you’re actually using the individual cores, using OpenMP, to feed the GPU work constantly. But it’s a single rank per GPU, which is for a lot of reasons the simplest way to think about it.

We had a few people do variations on that theme, where they might have multiple GPUs being used by a single rank. That’s one way. Or multiple ranks using a single device. But never matching a single core to up to a rank. That doesn’t work, and people are going to use Frontier the same way they use Summit. The default case will be a single MPI rank per GPU. But now that MPI rank has to do a lot of work. It really is the architecture that’s on the node that presents the excitement.

The thing that will change for us with Frontier is, of course, scale – exascale is bigger than anybody’s ever done before. But the other thing is that we are going to be roughly coincident with other architectures that also employ hybrid CPU-GPU nodes. When we started with Titan, we were the only game in town – at the high end anyway. And although portable performance for applications was something we were concerned with from the outset, it was something that frankly, we couldn’t do because it didn’t make any sense. We had hardware that was fundamentally different from hardware that any of our users would get to run on elsewhere. That’s not really the case with Frontier.

The important thing is the HIP compatibility layer that AMD’s got going on. So if we can evangelize HIP effectively to our user community as an effective way to let them talk to the low level details of the GPU architecture that they want to use, I think HIP has already proven itself to be a very effective way to marshal the GPUs. Our experience so far has been to take code that’s all CUDA, and then you need an afternoon to run a HIP-ify script to convert all those CUDA commands over to HIP, and then you need another few hours to go through and fix up the things that the script didn’t quite get right. At that level of work, almost any team can do it.

TPM: What is the biggest stumbling block you have for this stuff at this point? I’m assuming you’ve got enough expertise at Oak Ridge. Way back in the summer of 2013, I talked to Jeff Nichols, the associate laboratory director for computing and computational sciences, when I visited Oak Rudge to lay my hands on Titan, and Jeff said that the hardest thing was not getting the money for the machine or building the facility. It was trying to keep people coming to Eastern Tennessee, with Google and Facebook and all these other big companies hiring so many smart people. Oak Ridge is still doing great science, and this is the mandate and this is what matters. But it’s still hard, I assume.

Bronson Messer: I would say that problem is certainly still persisting. We still compete for the best talent with a whole a wide swath of different industries. I think the thing that attracts people and keeps them doing this kind of work is that they are very excited about actually pushing back the frontiers of knowledge. You know, the rewards in other kinds of careers are fine. But I found that the people who stick around the longest and that actually get the most done are motivated by the work itself. They very much like being part of the team that gets something done. And in this case, that means defining a project and completing a scientific campaign all the way to the paper being published. That is a very attractive prospect for a fair number of people. Most people who get involved in technology have from their earliest memories have interested in looking at the universe and trying to figure out how it works.

One of the things I’ve done during the lockdown is talk to middle school students and high school students on Zoom and Skype or Google Hangouts. And the one thing always I end up talking to high school students interested in science, and they are not sure if they should study this kind of science or engineering or whatever. And I always tell them the same thing. Major in math. You will never go wrong and you will never learn too much math for almost anything that’s technical or scientific.