When AI/ML came onto the scene in the supercomputing community, common wisdom was that it could do much to augment HPC applications but it would be not be able to replace many of the computationally-intensive physics and simulation workloads. While that is still the case, there are some emerging areas that show just how big of a slice neural networks might take out of traditional simulations.

A recent example that caught our eye is from KTH Royal Institute of Technology in Sweden where a convolutional neural network paired with a multilayer perceptron network were able to prove out in comparison to traditional supercomputing simulations. This is not without some caveats, but it does show an expanding role for deep learning in scientific computing domains—even with physics-based simulations, which so many have said cannot, by their nature, have an AI angle given current limitations of neural network complexity and capability.

Here’s one of the reasons why this KTH example holds so much weight: the simulation in question is based on the particle-in-cell (PIC) method, which takes massive computational power and is used at scale on top supercomputers to get a better handle on nuclear fusion, astrophysics, laser/plasma interactions, and so on. These (often) Fortran-based approaches to plasma physics are at the heart of some of the largest-scale research programs on traditional HPC systems, which means any added efficiency is a big deal in terms of time to solution, cost of running the simulation, etc.

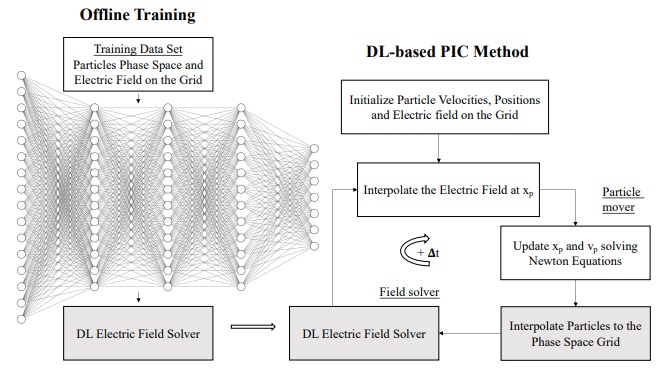

To test the success of the new PIC method using deep learning, a benchmark called two-stream instability was used. This measures the level of electrostatic instability in the absence of a magnetic field, in a very brief sense. The deep learning PIC method KTH researchers developed was able to produce accurate results in the benchmark, which used deep learning specifically in one step of the overall workflow—the electric field solver, which is, as they describe, a “simple prediction/inference step involving a series of matrix vector multiplications” which were offload to GPU.

It is disappointing that there is not more detail about the computational performance differences but these can be inferred from the benchmark and understanding the relative expense computationally of each of the steps in the traditional versus AI/ML PIC method. Still, the results KTH did publish speak volumes about AI/ML can slice into traditional physics simulations.

To give a sense of how important the deep learning component is to this new PIC approach, they add that “traditional PIC methods require a linear system that involves more operations than the prediction/inference step” and that “an additional advantage of the DL electric field solver is that it does not need communication when running the DL-based on distributed memory systems as all neural networks can be loaded on each process.”

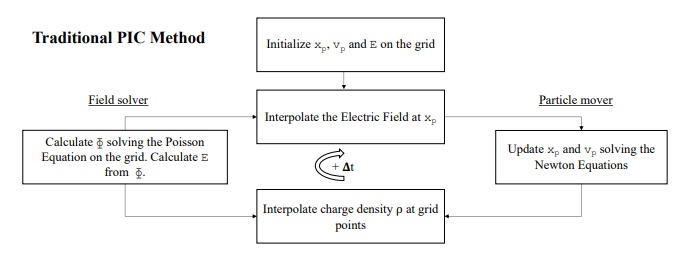

Even though on first glance below it looks like the traditional method has the most streamlined workflow, each segment is highly iterative—many thousands of passes, making it computationally expensive and time-consuming.

Below is the deep learning workflow, which looks more complicated but in fact eliminates many of the time-consuming iterations via a CNN and a multilayer perceptron network.

“This work is only the first step towards the integration of DL techniques in PIC methods. DL-based PIC methods can be improved in several ways. For instance, more accurate training data sets can be obtained by running Vlasov codes that are not affected by the PIC numerical noise. In this work, we use the NGP interpolation scheme for the phase space bidding. The usage of higher-order interpolation functions would likely improve the performance of the DL electric field solver as it would mitigate numerical artifacts introduced by the binning,” the authors say.

“The experiment used a node with two 12-core Intel E5-2690V3 Haswell processors. The computational node has 512 GB of RAM and one Nvidia Tesla K80 GPU card, also used in the training of the networks. The neural networks are implemented using TensorFlow [19] and Keras. On such a system, the training of the MLP network and CNN take approximately 18 minutes and 2 hours, respectively.”

Aside from the new method, the real story here is that as physics-informed neural networks (PINNs) become more sophisticated, work like this can have potentially game-changing impacts on the compute resources required. For much of what happens in scientific computing, high levels of accuracy are needed—something that PINNs are targeting in multiple areas, even though it’s still early days. This work did not use PINNs but the authors say that doing so would “improve the conservation of total energy and momentum and the performance of the DL-based PIC method.”

We are watching several examples of PINNs and more generalized AI/ML approaches find a way into traditional HPC simulations. Sometimes it’s as simple as denoising images for simulations, other times the neural networks are doing heavy lifting in a full replacement, although these cases are still quite rare except for a few examples in weather and CFD.

It’s nonetheless important to keep tabs on where AI approaches are going for HPC as it will change the nature of the systems that are deployed at the largest centers. We’ve already seen a large move to GPUs over the last several years, more recently due to AI/ML requirements. But trends like this could also set the stage for more special-purpose AI accelerators designed to do a pared-down, AI-specific version of what GPUs do now.

Be the first to comment