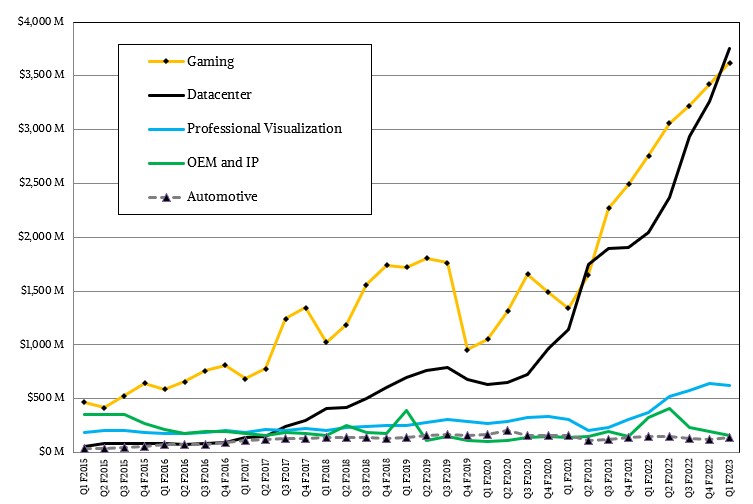

Something that we have been waiting for a decade and a half to see has just happened: The datacenter is now the biggest business at Nvidia. Bigger even than the gaming business for which it was founded almost three decades ago.

The rise of the datacenter business has been no accident, and is the result of very deliberate engineering and investment by Nvidia, and it has been a remarkable thing to watch. The existence of The Next Platform was in large part done to chronicle the rise of the new kinds of platforms that Nvidia has been creating since the first Tesla GPU compute engines and the CUDA development environment for them emerged.

And this, the establishment of Nvidia as an accelerator for HPC simulation and modeling and the evolution of AI training and inference from identifying pictures of cats on the Internet to all kinds of manipulation of data in its many forms to the creation of new insights that would not be possible with conventional programming, is perhaps only the beginning. Nvidia, like many others, has set its sights on creating immersive worlds – intentionally plural – of the metaverse overlaid upon the physical reality we all inhabit.

There will be much gnashing of teeth that Nvidia is predicting a weaker second quarter of its fiscal 2023 over the next week or so, until the next crisis on Wall Street happens, but none of this matters much in the long run. That weakness is no surprise, given the lockdowns in China and the war in the Ukraine, and many IT vendors are feeling the pain there. Case in point: the latest financial results from Cisco Systems, which we discussed recently.

The fact remains that Nvidia has a very strong gaming business and a very strong datacenter business, and it is entering the world of general purpose computing with its “Grace” Arm server chips and that will only expand its total addressable market all that much more. Thanks to its acquisition of Mellanox, it has an interconnect and DPU lineup to match its existing GPU compute engines and its impending CPU compute engines, and of course, it sells systems and clusters as well as the parts that OEMs and ODMs need to make their own.

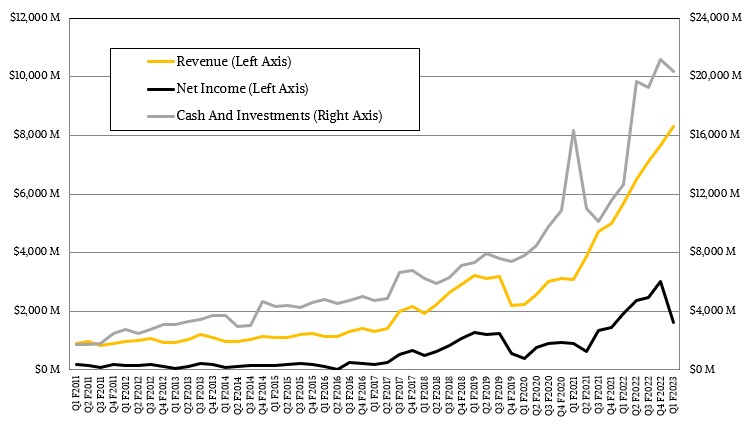

In the quarter ending on May 1, Nvidia’s overall revenues rose by 46.4 percent to $8.29 billion, but net income fell by 15.4 percent to $1.62 billion largely because of a $1.35 billion charge that Nvidia had to pay to Arm Holdings for its failed attempt to acquire it. This may be a small price to pay for the tighter focus that Nvidia will now enjoy. The good news is that Nvidia has $20.34 billion in the bank and a total addressable market of somewhere around $450 billion, as it outlined earlier this week in its presentations from the Computex conference in Taiwan.

During fiscal Q1, Nvidia’s datacenter division posted sales of $3.75 billion, up 83.1 percent, while the gaming division only grew by 31.2 percent to $3.62 billion. It is hard to say if datacenter will remain Nvidia’s dominant business from this point forward, or of the two divisions will jockey for position. A lot depends on the nature and timing of the competition Nvidia increasingly faces in these two markets, and how Nvidia fares as it builds out a broader and deeper datacenter portfolio, including CPUs.

“Revenue from hyperscale and cloud computing customers more than doubled year-on-year, driven by strong demand for both external and internal workloads,” explained Collette Kress, Nvidia’s chief financial officer, in a call with Wall Street analysts. “Customers remain supply constrained in their infrastructure needs and continue to add capacity as they try to keep pace with demand.”

Our model suggests that of the datacenter revenue in the quarter, $2.14 billion of that was from the hyperscaler and cloud builders, up 105 percent, while other customers – academia, government, enterprise, and other service providers – rose by 60 percent $1.61 billion.

We used to have a way to see how much revenue the Mellanox business contributed, but that is very tough to estimate with any kind of accuracy because InfiniBand and Spectrum networking is embedded in Nvidia’s systems and clusters. We have no doubt that the ConnectX network interface business remains strong, and Kress did mention that sales of 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec adapters were strong and were accelerating the business. “Our networking products are still supply constrained though we expect continued improvement throughout the rest of the year,” Kress added.

We have no doubt that the networking unit is larger than when Nvidia closed the Mellanox acquisition two years ago, but can’t say by how much. It could represent 15 percent of total revenues and about a third of datacenter revenues, but we do not have a lot of confidence in that estimate except in the broadest sense, such as over the trailing twelve months. The HPC and AI businesses are inherently choppy, and so is selling into the hyperscalers and cloud builders.

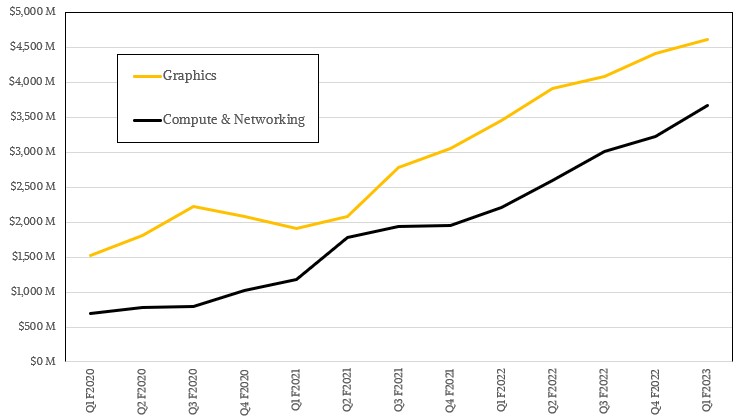

What we can say is that its Compute & Networking group had sales of $3.67 billion, up 66.2 percent in the quarter, but that its Graphics group “only” grew by 33.8 percent to $4.62 billion.

Despite the fact that Nvidia is only forecasting $8.1 billion in sales for the second quarter of fiscal 2023, co-founder and chief executive officer Jensen Huang remained sanguine.

“We had a record datacenter business this last quarter,” said Huang on the call. “We expect to have another record quarter this quarter, and we are fairly enthusiastic about the second half. AI and data-driven machine learning techniques for writing software and extracting insight from the vast amount of data that companies have is incredibly strategic to all the companies that we know. Because in the final analysis, AI is about automation of intelligence and most companies are about domain-specific intelligence. We want to produce intelligence. And there are several techniques now that have been created to make it possible for most companies to apply their data to extract insight and to automate a lot of the predictive things that they have to do and do it quickly.”

Huang added that the networking business is “highly supply constrained” and that demand is “really, really high.” The supply of networking products, which relies on components from other vendors and not just the chips that Nvidia has etched, is expected to improve each quarter through the remainder of the fiscal year. The “Hopper” GH100 GPU and its H100 accelerator, which comes in PCI-Express 5.0 and SXM5 form factors, is expected to be available in fiscal Q3 and will ramp closer to the end of the fiscal year, which means December 2022 and January 2023. In the meantime, A100 is the datacenter motor that still owns GPU compute, and companies are buying as many as Nvidia can have made.

And now, we will be looking to see when and if the Compute & Networking group can become larger than the Graphics group. So far, it doesn’t seem likely.

Be the first to comment