If quantum computers are going to become a commercial thing sometime down the road – and there’s a lot of money and time going into the effort to make them viable for use by HPC organizations and enterprises – it’s increasingly likely that it will be in combination with classical computers. Essentially the workloads that can be done through quantum computer will run on quantum computers; the rest will stay on high-end classical supercomputers.

The systems will be tightly integrated to not only quickly and efficiently move the applications between them but also to address everything from quantum error correction to calibration control to hybrid algorithms. In many ways it becomes an acceleration issue, which is at the core of Nvidia’s DNA.

“We have the shared perspective of quantum machines at Nvidia – and I think with most of the community – that quantum is never going to be quantum-only,” Tim Costa, director of HPC and quantum at Nvidia, tells The Next Platform. “We mean it in two ways. The first is that applications will continue to run large portions of what they do on CPUs as they do today, on GPUs as they do today, for the parts of applications best suited to those. But as quantum processors become more and more capable, some algorithms that can transformatively be accelerated by a quantum processor will move over to a quantum processor. But all these units need to be working together.”

Right now, quantum is a research play. Commercialization is coming, though, Costa says. To run a useful quantum computer, the classical computing side needs to be at a petascale level, in part because such a quantum computer will need to be fault-tolerant, which is going to require hundreds of thousands of perfect logical quantum bits, or qubits, Costa says. Also, it will take more than a dozen parameters to calibrate per qubit independently.

“Leaders in the field have been turning to AI methods and graph neural networks to do that work, which are obviously very well-suited to accelerate computing,” he says. “All that is to say that what we need to be able to do is to really couple large-scale GPU-accelerated supercomputing to quantum computing to both accelerate applications in a transformative manner but also to actually run those quantum computers.”

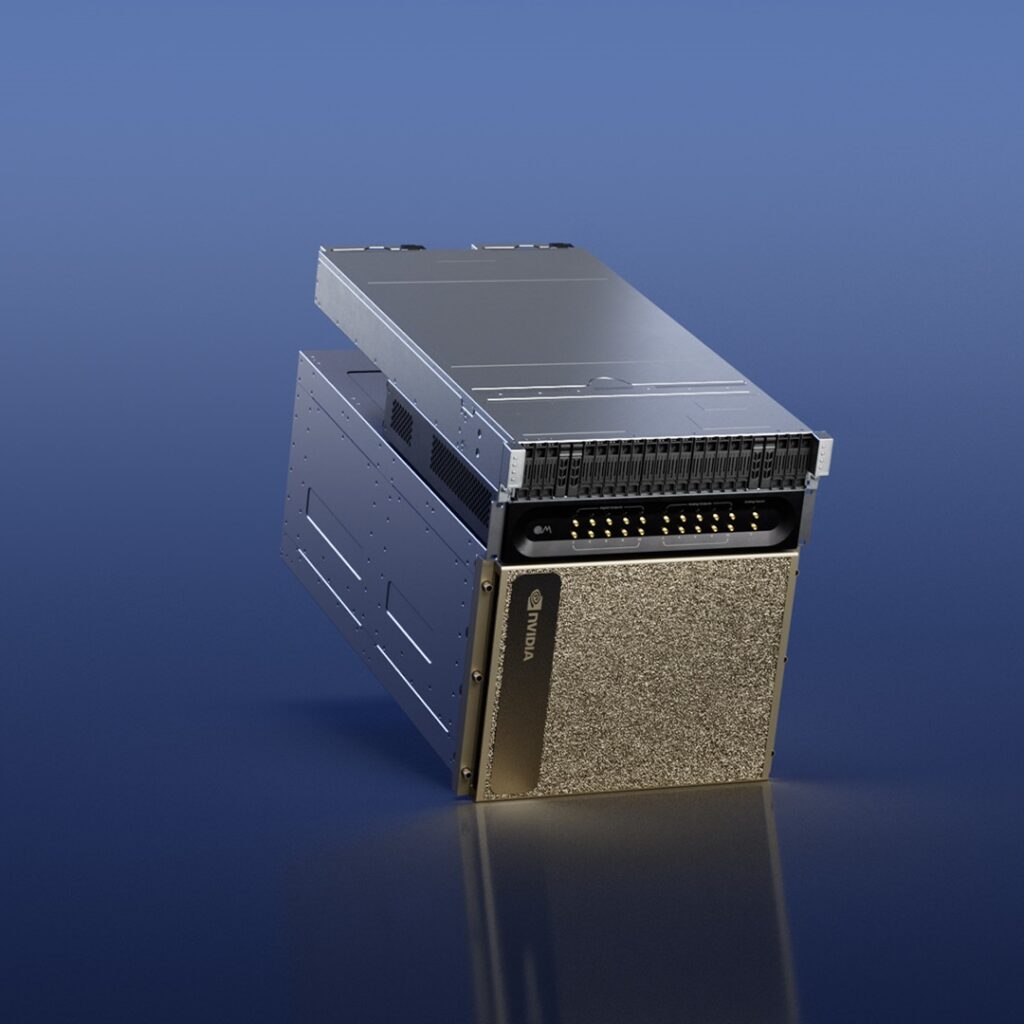

At GTC 2023 this week, unveiled DGX Quantum, a system powered by Nvidia’s AI- and HPC-focused Grace-Hopper Superchip and CUDA Quantum open-source programming model and including startup Quantum Machine’s OPX+ quantum control platform to work in a quantum-classical environment. The co-designed system more easily connects Nvidia’s GPUs to a quantum computer to do error correction at extremely high speeds, Nvidia founder and CEO Jensen Huang said during his GTC keynote.

“Error correction over a large number of qubits is necessary for recovering data from quantum noise and decoherence,” Huang said.

The Grace-Hopper system – an integration of Nvidia’s Grace CPU and Hopper GPU – is connected to OPX+ via PCIe interconnect, enabling a sub-microsecond latency between GPUs and quantum processing units (QPUs) on the quantum system. Reducing latency between the classical and quantum systems is key Costa says. Some groups are looking at Ethernet, but the overall roundtrip between the quantum and classical is slower or as fast than what’s needed to do quantum error correction, which leaves no time for actual compute, Costa says.

“You can move the data back and forth, but you’ve done nothing with it in time,” he says. “Not particularly useful. With this system, we were able to get it down to 400 nanoseconds, which is two orders of magnitude better than Ethernet.”

Grace-Hopper is about speed and scale, with Nvidia saying it delivers up to 10 times higher performance for complex workloads handling terabytes of data. OPX+ is aimed at accelerating the performance of QPUs and quantum algorithms and, like Grace-Hopper, can be scaled depending on need.

“This is scalable in both directions,” Costa says. “You can add more OPX+ when you have to control more qubits on a larger quantum system [and] you can add more Grace-Hopper nodes in order to scale up your GPU compute. You can also couple this system to your existing and very accelerated supercomputing infrastructure. It can also be that gateway to what you’ve already built in terms of adding quantum acceleration to your system.”

The power needed in the classical computing part of this hybrid environment is important to ensure the quantum computing side can do what it needs to do. The problem of error correction for qubits is complex, but there is a lot of work that needs to be done simply to prepare for the quantum system.

“If you have a million qubits and 12 parameters to independently optimize per qubit, that’s going to take a heck of a lot of horsepower as well,” Costa says. “There is that classical compute requirement. I don’t know that I think it’ll be exascale per QPU, that feels pretty large, but it is significant. It’s a great opportunity for Nvidia here, which is one of the reasons we’re chasing it in this collaboration.”

It’s also important to remember that quantum computers are meant to solve problems that can’t be solved now with classical systems, Itamar Sivan, co-founder and CEO of Quantum Machines, tells The Next Platform.

“You’re saying, ‘Well, it sounds quite crazy. We need so much classical compute power to just drive a single quantum processor. It sounds unreasonable,” Sivan says. “But on the other hand, this goes back to what we’re here to do. Quantum computers are not meant to take problems that we’re solving today and then solve them faster.”

Nvidia also released CUDA Quantum for building quantum applications in C++ and Python that initially went into private release in July 2022 under the name QODA and is now open source. They rebranded it after getting tired of explaining what QODA was, Costa says. It’s again for hybrid classical-quantum environments to enable integration and program of QPUs, GPUs, and CPUs in a single system.