Any performance comparisons across compute engines in use in a datacenter are always valid for a point in time since new CPUs, GPUs, FPGAs, and other ASICs are always coming into the market at different times.

When AMD launched the “Genoa” Epyc 9004 processors back in November 2022, the Intel “Ice Lake” Xeon SPs were in the field since April 2021 after many delays and the “Sapphire Rapids” Xeon SPs that were supposed to compete against the prior AMD “Milan” Epyc 7003 processors (launched in March 2021) were themselves late into the field. After initial pre-launch shipments to the hyperscalers and cloud builders, the Sapphire Rapids Xeon SPs finally were launched in January 2023. But even then, AMD was complaining that it could not get its hands on Intel’s most recent server CPU and Intel was complaining likewise that it could not get its hands on any Genoa Epycs. So all of the benchmarks they were showing off were comparing their most recent CPUs against the competitor’s prior generation.

This is not precisely useful, and leaves a lot of server buyers and system vendors guessing on what CPUs they should invest in.

Now, Intel has finally gotten its hands on systems using 32-core Epyc 9004 processors – nowhere near the top bin 96-core parts, mind you – and has run some benchmark tests against these machines as well as on its own 32-core Sapphire Rapids CPU, using the Intel compilers on its own chips and the AOCC compilers from AMD on the Epyc chips. These 32-core parts are in the sweet spot of pricing and for certain software that is licensed in 32-core chunks (as is the case with VMware server virtualization software, for instance).

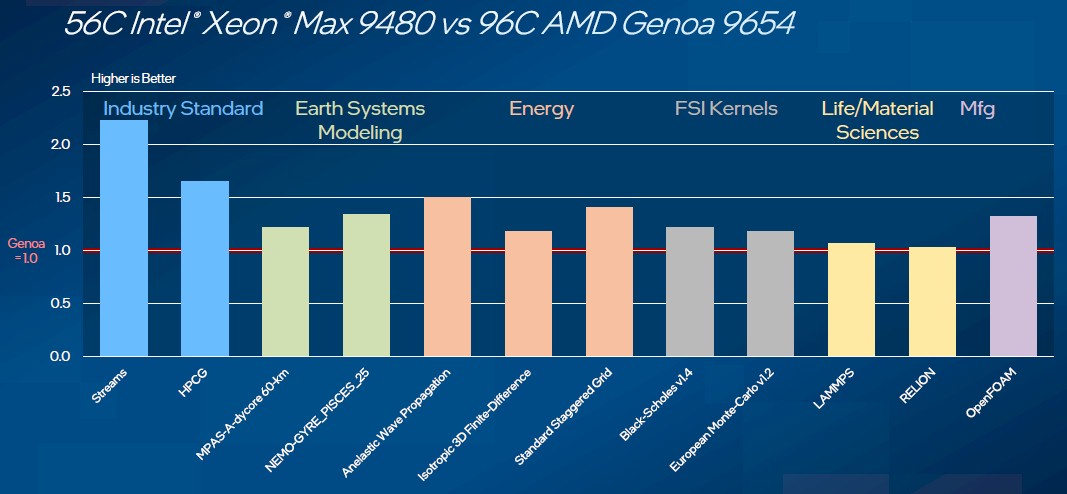

Intel tested certain HPC workloads on these 32-core systems, but on other HPC workloads that were bound by memory bandwidth, Intel pit its 56-core Max Series CPU based on Sapphire Rapids with HBM2e memory (rather than regular DDR5 main memory) against the 96-core Genoa Epyc chip using DDR5 memory.

The AMD chip has plain vanilla DDR5 memory only, and on the HPC runs with the Max Series CPUs – why can’t Intel just call these the Xeon Max series? – the machines do not have supplemental DDR5 memory added to the servers, which is a possible option. Rather, the Max Series CPUs have the relatively small 64 GB memory HBM2e stacked memory, which is kinda tight capacity wise but which has 1,230 GB/sec of memory bandwidth. A single Sapphire Rapids Xeon SP socket has about 307 GB/sec of DDR5 memory bandwidth and a single Genoa Epyc 9004 socket has about 461 GB/sec of bandwidth. So with the comparisons with the Intel Sapphire Rapids Max Series CPUs, Intel has a 2.7X advantage on memory bandwidth, but AMD has a 24X advantage on memory capacity.

The Genoa chip used in the Intel benchmark runs has a dozen DDR5 memory controllers while the Sapphire Rapids chip only has eight, so on memory bound workloads on systems not using the HBM2e memory, the resulting AMD systems have a significant potential performance advantage.

If Intel wanted to push it, as we pointed out in January in Building The Perfect Memory Beast, Intel could have added DDR memory and CXL extended memory on some of the PCI-Express slots, yielding 1,729 GB/sec of aggregate memory bandwidth (1.69 TB/sec) and could have 1 TB of memory spread across the two Max Series processors their regular DDR5 memory, 128 GB of memory on the HBM2e memory on the two processors, and then the remaining 384 GB on PCI-Express lanes with CXL memory DIMMs to match the same 1.5 TB of capacity. Admittedly, the programming across those three memory tiers would be tricky.

On those server workloads where Intel can bring to bear its wider AVX-512 vector engines, its new AMX matrix engines, or any number of the accelerators for I/O, encryption, data management, and so forth, the Sapphire Rapids chips can hold their own or beat the Genoa Epycs.

It is not a coincidence, of course, that Intel is doing this the day before AMD is widely expected to launch its “Genoa-X” cache-boosted variant of the Genoa lineup as well as its 128-core “Bergamo” Epyc CPU, the former primarily aimed at HPC workloads and the latter aimed mostly at hyperscalers and cloud builders. Intel wants to be part of the conversation at the moment when AMD is going to shift the conversation.

Intel is sharing this preliminary performance data with The Next Platform, and therefore we are with you. Take it as you will, and realize that our purpose is to present all of the information we can gather on compute engine performance and that we always recommend that before you buy a lot of any CPU, you need to do your own benchmark tests on real systems to see how they stack up.

The Systems Under Test

Intel secured systems using the Genoa processors from Supermicro, which is unique in the market in that it makes motherboards as well as components to build systems as well as selling finished servers and completely integrated rack-scale systems. The Intel machine used in the generic workload tests had a pair of Intel Xeon SP-8462-Y+ processors, which have 32 cores each running at 2.8 GHz and a 300 watt thermal design point (TDP). The Sapphire Rapids server, a Supermicro SYS-22IH-TNR, had 1 TB of main memory – 16 slots with 64 GB of DDR5 memory running at 4.8 GHz.

The AMD Epyc server had a pair of 32-core Epyc 9354 chips, which run at 3.25 GHz and which have a 280 watt TDP. That’s 16.1 percent more clocks and 6.7 percent lower wattage compared to the 32-core Sapphire Rapids chip. These AMD processors were plugged into a Supermicro AS-2025HS-TNR server, with its 24 memory slots configured with 64 GB of memory running at 4.8 GHz for a total of 1.5 TB of main memory. That gave the AMD machine a 50 percent memory capacity and memory bandwidth advantage, core for core.

The storage and networking on the systems under test were not disclosed, but we surmised that a couple of flash cards and a couple of 10 Gb/sec Ethernet ports, and maybe 100 Gb/sec Ethernet ports for the HPC workloads were what were used on the systems. We checked in with Intel to find out the specifics, and indeed, wherever possible, the machines had a single 1.92 TB flash drive from Samsung and an NVM-Express M.2 boot drive. Most of the machines indeed use one or two 10 Gb/sec Ethernet ports, and 100 Gb/sec Ethernet ports when appropriate.

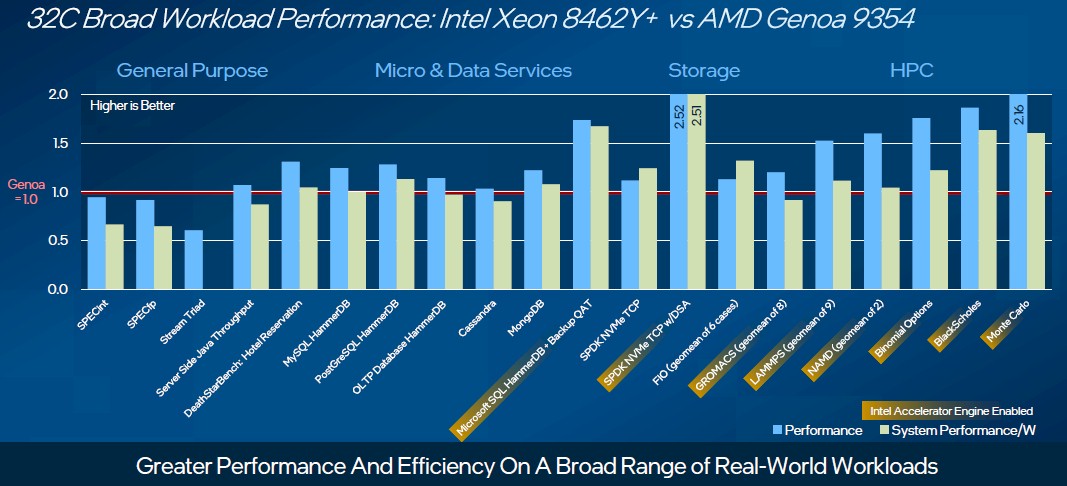

And now, without further ado, here is how the 32-core machines stack up against each other on the generic workloads, according to Intel:

As you can see, on the generic SPEC integer and floating point performance tests, the 32 core Sapphire Rapids chips are delivering close to the same performance, but because they are burning more watts, their system performance per watt is not as competitive. And no surprises that on the STREAM Triad memory bandwidth tests that the Xeon SP-8462-Y+ chip does not do as well as the AMD Epyc 9354. It is not clear why Intel did not show the system performance per watt bar in this chart, but it is probably on the order of 40 percent that of the AMD Epyc 9354.(We wish Intel gave out the actual performance numbers on all of these tests, not just relative performance.)

What is clear on this chart is that the Sapphire Rapids chips can get within spitting distance of the AMD Genoas, core for core. AMD, of course, has more cores that it can bring to bear, but clock speeds scale down and that memory bandwidth gets spread across more cores, so it is important to not just multiply the performance of a 32-core chip by 3X to get the estimated performance of a workload on a 96-core Genoa part. It will depend on how sensitive it is to memory capacity and memory bandwidth as well as the compute capacity.

For workloads where Intel can bring acceleration to bear – the QAT accelerator with the backup on databases, DSA with the SPDK for NVM-Express flash storage, and AVX-512 for financial services and technical computing HPC applications – a 32-core Intel chip can beat a 32-core AMD chip, and for certain workloads, it looks like a 32-core part could give a 96-core Genoa part some run for the money. A 56-core or 60-core Sapphire Rapids chip could meet or beat a 96-core Genoa on storage and HPC workloads. We wish Intel had tested top bin parts, but Intel says it cannot get its hands on the most capacious Genoa parts.

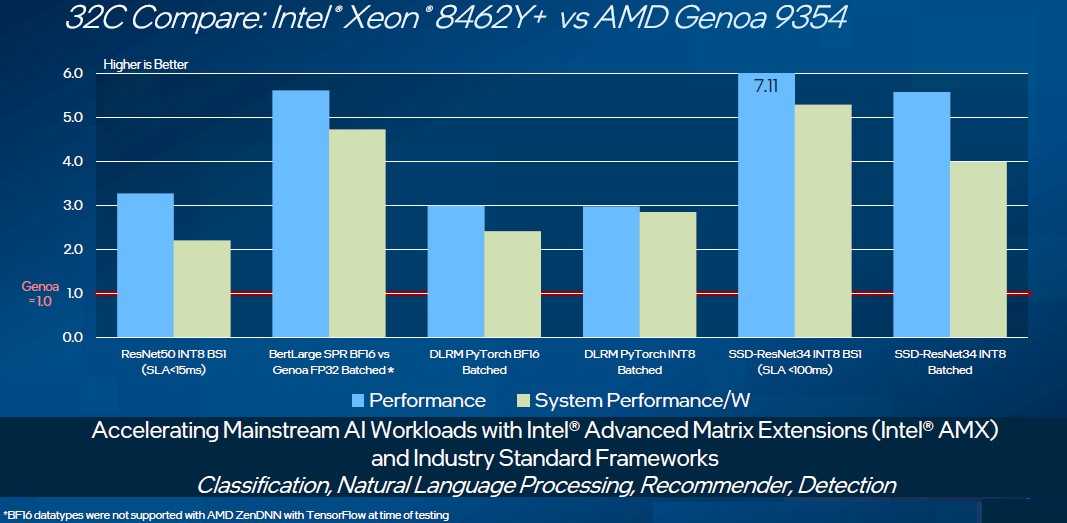

Here is how the Xeon SP-8462Y+ system stacks up against the AMD Genoa 9354 on AIX inference workloads, which shows the benefits of the AMX matrix math unit added with the Sapphire Rapids chips:

Intel’s 32-core chip has a 3X to more than 7X performance advantage on a variety of AI inference workloads, although the gap does close a little based on performance per watt because the Intel chips run hotter. (None of these Intel charts talk about pricing, of course.) Now we know why AMD will eventually be adding those hard-coded DSP-based AI engines from its Xilinx FPGAs to the Epyc line of CPUs in the “Turin” generation. . . .

FYI: The Intel performance on the BERT-Large test was so high because BF16 data types were not yet supported in the AMD Genoa AMD ZenDNN software stack yet, and that gap will no doubt close once that software is updated.

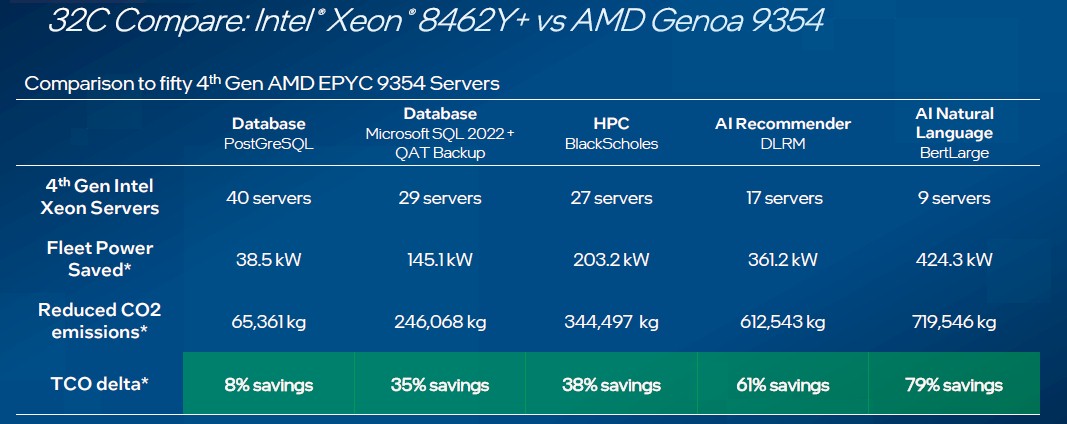

The way to think about the performance advantages, according to Intel, is to see how many two-socket 32-core Intel Sapphire Rapids servers it takes to support a given workload compared to a two-socket 32-core AMD Genoa server, and here is how Intel does this math:

The chart above assumes total cost of ownership over four years, with electricity costs set at 10 cents per kilowatt and a datacenter power usage effectiveness (PUE) or 1.6, which is not all that great but still seen in a lot of datacenters. (A PUE of 1.2 or 1.1 is becoming more common in new builds of datacenters as electricity costs soar.)

That leaves HPC workloads where they are not compute bound, but memory bandwidth bound, and for that we have the comparison on a two-socket system using the 56-core Xeon Max-9480 against a similar system equipped with a pair of 96-core AMD Epyc 9654 processors. Take a look:

As we pointed out above, the Max Series CPU system has 128 GB of total HBM2e memory and 2.4 TB/sec of aggregate memory bandwidth with cores that run at 1.9 GHz, while the AMD Genoa 9654 processors run at 2.4 GHz and have a total of 1.5 TB of main memory but only around 921 GB/sec of memory bandwidth. The AMD system has cores that run at 26.3 percent higher than the Sapphire Rapids Max CPU does, and it also has 1.7X more cores. But the Intel system has 2.7X more memory bandwidth across its cores, and clearly, these things wash against each other. We would have expected the performance of the Sapphire Rapids Max CPU to deliver better oomph on the STREAMS Triad and HPCG tests.

We wonder now how a Genoa-X system with vertical cache and a Genoa-Antares CPU-GPU system based on the Instinct MI300A compute engine with shared HBM3 stacked main memory will do on all of these memory bandwidth sensitive workloads.

Hopefully it won’t take another seven months to find out.

Initial Sapphire Rapids and Genoa supply stats run to date as of 6.3.23;

Sapphire Rapids;

MAX = 0.00069% at $1K AWP currently $11590 only 9480 5 core shows in channel

Platinum = 10.3% at $8811.28

Gold = 41.4% at $1766.56 subject XCL gold SKUs return to secondary + 15% prior 8 weeks

Silver = 14.2% at $692.16

W34xx_ = 2.2% at $2950.27

W24xx_ = 31.6%* at 1096.63

Full line $1K AWP = $2198.97

*Note channel secondary shows increasing trade-in W32xx_ @ and < 16C and W22xx all SKUs + 112% and 31% respectively prior eight weeks. Tim, outside servers themselves, how about a report on SR accelerated development system options. Specific W is it a box? Are they cards?

SR by core grade run to date;

60C = 1.14%

56 = 2.34%

52 = 0.54%

48 = 4.68%

44 = 0%

40 = 0%

36 = 1.40%

32 = 10.97%

28 = 3.01%

24 = 18.6%

20 = 6.09%

18 = 1.14%

16 = 12.58%

12 = 13.58%

10 = 7.63%

8 = 8.7%

6 = 7.63%

Note; Strategically Intel fills SI channel with 12 to 24 core Gold 55xx and Silver 44xx equals 33.2% of total volume run to date.

Genoa;

128C tbd

112 tbd

96 = 30.15%

84 = 4.56%

64 = 21.6%

48 = 5.61%

32 = 20.62%

24 = 6.8%

16 = 10.66%

Strategically AMD continues to focus on top core count product @ 64C +

2P = 72.3%

1P = 17.6%

X = tbd

F = 1%

$1K AWP = $7143.43

See my Seeking Alpha comment line for related reporting;

https://seekingalpha.com/user/5030701/comments

Mike Bruzzone, Camp Marketing

“…because BF16 data types were not yet supported in the AMD Genoa AMD ZenDNN software stack yet”

Intel has bf16 support in the AMX matrix operations. AMD’s avx512 bf16 performance should still trail. Intel has presented comparisons of AMX with their own avx512 bf16 performance that you could use as a reference.

Great benchmarks and analysis! It seems that Sapphire Rapids is a proper contender for upcoming ’bouts of the Battle Royale … can’t wait to see Aurora slide into its glass slippers and moozy its gluteus maximuses on over to the Ballroom Blitz! El Capitan’s swashbuckling MI300A (with HBM if I remember) should retake the crown of this pirate HPC tango contest soon after Aurora, but the Xeons should still provide a nice break in metaphors and plotlines.

Also, it seems that Xeon Max with AMX and HBM could compete well with Fugaku on HPCG (better oomph expected as the article astutely notes!) — something to watch …

Putting aside the imminent release of the Genoa-X parts, it’s worth noting that Intel didn’t use AMD’s best 32 core chip in this comparison. The 9374F clocks nearly 20% faster than the 9354 featured in these tests. Most likely Intel’s performance gap would shrink but their efficiency gap would grow.

Intel also supports a gen3 “Crow Pass” Optane on SPR, but it looks like future memory expansion could be via the pcie/CXL in concert with IPUs. Emerald Rapids is rumored to have some extension to the SPR CXL, and Granite Rapids and Sierra Forest are rumored to implement the full CXL 2.0. Sierra Forest is coming in 1H 2024, according to Intel in their last earnings call.

https://videocardz.com/newz/intel-birch-stream-platform-details-for-future-granite-rapids-and-sierra-forest-cpus-leak-out