The National Center for Supercomputing Applications at the University of Illinois just fired up its Delta system back in April 2022, and now it has just been given $10 million by the National Science Foundation to expand that machine with an AI partition, called DeltaAI appropriately enough, that is based on Nvidia’s “Hopper” H100 GPU accelerators.

There are thousands of academic HPC centers around the world that have fairly modest for pretty big systems, and collectively they probably comprise – here is a wild guess because we have never seen such data – somewhere around two-thirds of the HPC capacity in the world. And that’s a wild guess because the Top500 supercomputing list is full of machines that do not do HPC as their day (and night) jobs and isn’t long enough to capture the details of all of the machines at these academic research centers.

It is with this in mind that we ponder what $10 million buys in terms of capacity these days.

The original Delta machine, which we profiled here and which cost $10 million as well, uses a mix of Apollo 2500 CPU nodes and Apollo 6500 CPU-GPU nodes from Hewlett Packard Enterprise all lashed together with the “Rosetta” Slingshot Ethernet interconnect created by Cray and now controlled by HPE. There were 124 Apollo 2500 nodes with a pair of 64-core AMD “Milan” Epyc 7763 CPUs , 100 Apollo 6500 nodes with one of the same Milan CPUs and four 40 GB Nvidia “Ampere” A100 accelerators, and another 100 Apollo 6500 nodes with four Nvidia Ampere A40 accelerators, which are good for rendering, graphics, and AI inference. All of these machines had 256 GB of memory, which is a low amount for AI work but a reasonable if somewhat light 2 GB per core ratio. (3 GB per core is better, and 4 GB per core is preferred.) The Delta system also had a testbed partition based on the Apollo 6500 enclosures that had a pair of the Milan CPUs and eight 40 GB Nvidia A100 SXM4 GPUs interlinked with NVSwitch fabrics and able to access 2 TB of main memory. This was clearly aimed at AI workloads.

If you do all of the math on those CPUs and GPUs in the Delta system, you get a combined 6 petaflops across the vector engines that HPC workloads are tuned for and 131.1 petaflops for FP16 operations across the CPU vector engines and the GPU matrix math engines without sparsity turned on for AI workloads.

NCSA has not participated in the Top500 rankings of supercomputers since Cray built hybrid CPU-GPU “Blue Waters” system back in 2012. That Blue Waters machine had a peak 13.1 petaflops at FP64 double precision and cost a whopping $188 million and had 49,000 Opteron processors and 3,000 Nvidia GPUs. Delta had less than half the performance of Blue Waters on FP64 work, but 10X the oomph on FP16 math. (You would just quarter fill a vector to kluge FP16 support on the older machine.) And it obviously burns a lot less power and takes up a lot less space.

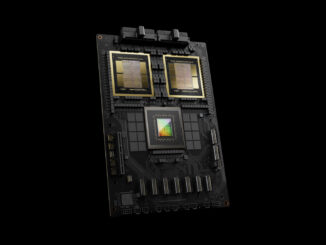

The $10 million DeltaAI upgrade, about which we know very little, looks like it is populating a bunch of those Apollo 6500s with newer “Hopper” H100 SXM5 GPU accelerators and hooking them into the Slingshot network. The award was announced here by the NSF and was announced there by the NCSA, and there is not a lot of detail. So we hauled out the Excel spreadsheet once again.

The DeltaAI award says: “The compute elements of DeltaAI will provide more than 300 next-generation Nvidia graphics processors delivering over 600 petaflops of half-precision floating point computing, distributed across an advanced network interconnect for application communications and access to an innovative, flash-based storage subsystem.”

When we do the math on that, if you have 38 servers with eight H100 SXM5 GPU accelerators, that is 304 GPUs and 601.6 petaflops peak at FP16 on the H100 Tensor Cores with sparsity on. So, that is the only possible configuration that hits the datapoints for a DeltaAI configuration. The budgeting works out, too, if you assume NCSA got about 30 percent off on the GPUs and only burned 10 percent of the $10 million on networking. The DeltaAI partition, by the way, will add another 10.6 petaflops of FP64 vector performance on the CPUs and GPUs in those 38 nodes. So the total performance of the Delta+DeltaAI machine will be 16.6 petaflops peak at FP64 and 732.8 petaflops peak at FP16 precision for AI work.

In the original “Delta” article (as noted by Eric), the machine had one MI100 node (among a bunch of A40 and A100 nodes, with EPYCs). That was possibly leveraged to get a competitive discount on the H100 upgrade (lest nVidia wanted them to go the MI300 route …) — nice Chicago-style poker bluff if it was!

Still, a mildly steeper discount (just a nudge really) could have helped NCSA-Delta pass the all-CPU Toki-Sora (A64FX, 16.6PF) more cleanly, and possibly even HiPerGator (17.2PF of EPYC+A100), and further establish the need for “everyone” to upgrade to the Hopper Stomper … could this be a case of “missed it by just *that* much”?