If Microsoft has the half of OpenAI that didn’t leave, then Amazon and its Amazon Web Services cloud division needs the half of OpenAI that did leave – meaning Anthropic. And that means Amazon needs to pony up a lot more money than Google, which has also invested in Anthropic but which also has its own Gemini LLM, if it hopes to have more leverage – and get the GPU system rentals in return.

We live in strange times. Microsoft investing $13 billion in OpenAI – with a $10 billion promise last year – and now Amazon making good on its promise to invest $4 billion in Anthropic by kicking in the second traunch of $2.75 billion is a brilliant way to buy a stake in any AI startup. You get access to the startup’s models, you get a sense of their roadmap, and you get to be the first one to commercialize their products at scale.

As we have pointed out before, we would love to see how Microsoft and OpenAI and Amazon and Anthropic are booking these investments as well as licensing of LLMs and rental of machinery to train and run them in production as part of products. There is a danger of this looking like roundtripping, where the money just moves from the IT giant to the AI startup as an investment and then back again to the IT giant. (This kind of thing used to happen in the IT channel from time to time.) It would be enlightening to see how these deals are really structured. But there is a likelihood that they are really minority stakes in the AI startups for enormous sums and an actual exchange of goods and services on the part of both parties.

Hey, if you could get away with that, you would. And if you are Amazon/AWS or Microsoft/Azure, you would give Anthropic or OpenAI big bags of cash knowing full well that the vast majority of it would come back as sales of reserved GPU instances in the cloud. Well, in the case of OpenAI, some of that money may be used to make custom ASICs for AI inference and training. . . . which Microsoft is already doing itself with its Maia 100 line of chips.

Laissez Les Bon GPU Temps Rouler!

How much money are we talking about to train these AI models? A lot.

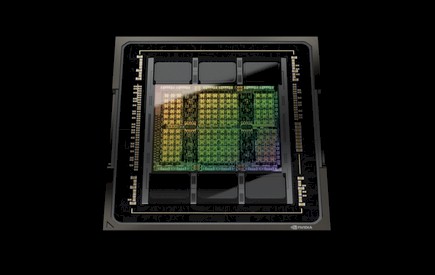

In his opening keynote at the GTC 2024 conference last week, Nvidia co-founder and chief executive officer Jensen Huang said that it took 90 days and 15 megawatts of juice to train 1.8 trillion parameter GPT-4 Mixture of Experts LLM from OpenAI. The system used was a cluster of SuperPODs based on the “Hopper” H100 GPUs using InfiniBand outside of the node and NVLink 3 inside of the node to create an eight-CPU HGX compute complex.

Microsoft does not yet provide pricing on its ND H100 v5 instances, but it is $27.197 per hour for the eight-GPU ND96asr A100 v4 instance on demand and $22.62 per instance for a one year reserved “savings plan.”

We have to use AWS pricing as a guide to get Microsoft pricing for H100 instances. We do not believe that pricing will be substantially different between the two cloud builders for the H100 instances, even if they were for the A100s. There is too much demand and too much competition for them to get too far out of whack with each other.

On AWS, the p4de.24xlarge A100 instance, which is based on the same eight-way HGX A100 complex as the Microsoft ND96asr A100 v4 instance, it costs $40.96 per hour on demand and $24.01 per hour with one year of reserved capacity. On the newer p5.48xlarge instance based on the H100s that was launched last July and based on essentially the same architecture, we think it costs $98.32 per hour with an eight-GPU HGX H100 compute complex, and we think a one-year reserved instance costs $57.63; we know that a three-year reserved price for this instance is $43.16.

On AWS, as you need 1,000 of these HGX H100 nodes to train GPT-4 1.8T MoE in 90 days. If you had 100 nodes, it would take about 90 days and if you had 3,000 nodes, or around 24,000 H100 GPUs, it would take around 30 days. Time is definitely money here. With 1,000 nodes, a cluster of 8,000 H100 GPUs using the AWS p5.48xlarge instances would cost $124.5 million. If Microsoft holds its pricing advantage over AWS, the its instances will be 5.8 percent cheaper, or $117.3 million. To train GPT-4 1.8T MoE in 30 days instead of 90 days, you are talking to $351.8 million.

That is the cost for one model, one time. OpenAI and Anthropic – and others – need to train many LLMs at large scale for a long time. They need to test out new ideas and new algorithms. At these prices, $4 billion only covers the cost of training around three dozen 2 trillion-ish parameter LLMs in a 90 day timeframe. As time goes on, and the Anthropic customer base grows and Amazon’s usage of Claude LLMs grows, inference will be a bigger part of that budget.

So you can see why anyone training generative AI at scale needs a partner with lots of hardware and deep pockets. Which explains why Google, Microsoft, AWS, and Meta Platforms will dominate the leading edge of models, and why Cerebras Systems needs a partner (G42) with deep pockets to help it build $1 billion worth of systems to prove out the architecture of its systems. As you can see, $1 billion or $4 billion or $13 billion doesn’t actually go very far on the cloud. And that is why Microsoft and AWS are taking stakes in OpenAI and Anthropic.

Here is another thing to consider: It only costs around $1.2 billion to own a machine with 24,000 H100 GPUs, so if you run the GPT-4 1.8T MoE model four times on a cloud, you might as well just buy the hardware yourself. Oh wait, you can’t. The hyperscalers and cloud builders have had a lock on GPU supplies; they are at the front of the Nvidia GPU allocation line, and you most certainly are not.

If you do the math on the cost of a 24,000 H100 system, that works out to around $46 per HGX H100 instance per hour to own it. Obviously, that hourly price does not include the cost of the datacenter wrapping around the machine, the power and cooling for that machine, the management of the cluster, or the systems software to make it ready to run LLMs.

Now, with the recently launched “Blackwell” GPUs, Huang told CNBC that these devices would cost between $30,000 and $40,000, which we presume is a list price from Nvidia not the street price that is almost certainly going to be higher. We think that the list price of the H100 was around $21,500, and that the top-end B200 will deliver around 2.5X the performance at the same precision. That is 2.5X the oomph for 1.9X the price, which is only a 26 percent improvement in price/performance at the same precision on the tensor cores. (We still don’t know what is going to happen on the CUDA cores in Blackwell.) Obviously, if you shift from FP8 to FP4 precision for inference, then it is a price/performance increase of 63 percent.

All of this explains why the cloud builders and the dominant AI startups are not-so-strange bedfellows. As part of the deal, Anthropic is going to port its Claude family of LLMs to the Trainium and Inferentia custom ASICs designed by AWS for its internal use and for cloud customers. AWS contends that it can drop the cost of running generative AI by 50 percent or more compared to GPUs, and that is clearly going to help not only drive adoption of generative AI on the Bedrock service, which now has over 10,000 customers and which just went into production in October 2023, but give parent Amazon an LLM – Claude 3 and later generations – and a hardware platform it controls that it can use to add GenAI to its many applications and business lines.

While we are thinking about the cloud builders and their AI startup LLM partners, we wonder if there is not another kind of back-and-forth financials going on.

If we were OpenAI or Anthropic, we would not only want to license our LLMs to Microsoft and AWS, but we might also ask for a revenue sharing deal as GenAI functions are added to applications. This might be hard to manage and police, of course, but we think ultimately token generation counting is going to have to be done so revenue can scale with use. We do not think OpenAI or Anthropic is foolish enough to give their cloud partners cheap, perpetual licenses to their LLMs. But, then again, with GPU hardware being so hard to come by at scale, maybe they had no choice for these special cases.