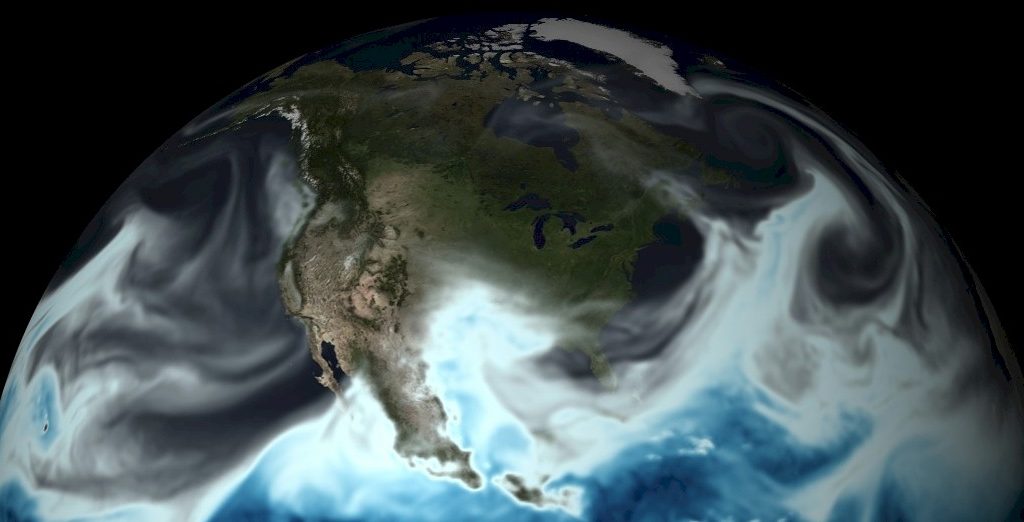

All of the weather and climate simulation centers on Earth are trying to figure out how to use a mixture of traditional HPC simulation and modeling with various kinds of AI prediction to create forecasts for both near-term weather and long-term climate that have higher fidelity and go out further into the future.

The National Oceanic and Atmospheric Administration in the United States is no exception, but it is unique in that it has just been allocated $100 million from Congress and the Biden administration through the Bipartisan Infrastructure Law and Inflation Reduction Act to upgrade its research supercomputers at its HPC center in Fairmont, West Virginia.

That sounds like a lot of money for an HPC system, and it is, but this $100 million represents only a fraction of the money that Congress has allocated towards weather and climate issues through these two laws.

The Bipartisan Infrastructure Law, signed by President Biden in November 2023, has $108 billion to support transportation and related infrastructure, including weather and climate forecasting and mitigation over the course of the five years. NOAA has been allocated three different tranches of funds as part of this law, including $904 million for climate and data services, $1.47 billion for natural infrastructure projects to rebuild American coastlines, and $592 million to steward fisheries and other protected resources.

The Inflation Reduction Act also included $2.6 billion for rebuilding and fortifying coastal communities and $200 million for climate data and services.

NOAA has both production and research systems, and they are separately funded. In the latest rounds of funding, General Dynamics Information Technology is the primary contractor. NOAA’s Weather and Climate Operational Supercomputing System (WCOSS) got its latest twin pair of HPC systems, nicknamed “Cactus” and “Dogwood,” which were installed in June 2022 and which drive the national and regional weather forecasts for the National Weather Service, which in turn feed forecast information into AccuWeather, The Weather Channel, and myriad other weather services.

The new “Rhea” supercomputer that will be installed at the NOAA Environmental Security Computing Center (NESCC) in Fairmont, which operates under the auspices of the Research and Development HPC System (RDHPCS) office.

The Rhea system will be a follow-on to a “Hera” system installed at the Fairmont datacenter in June 2020. Hera is a Cray EX system that has a total of 63,840 cores and has a peak FP64 performance of 3.27 petaflops, of which 2 petaflops came from GPU accelerators. The Hera system was integrated by General Dynamics as well, and was one of five research supercomputers operated by NOAA at the time at HPC centers in Boulder, Colorado; Princeton, New Jersey; Oak Ridge, Tennessee; and at Mississippi State University in Starkville, Mississippi.

Back then four years ago, those five research centers had an aggregate performance of 17 petaflops, and most of that capacity was driven by CPUs with a healthy dose of GPU nodes either inside of these clusters or alongside of them. Hera has a Lustre file system built by DataDirect Networks with 18.5 PB of capacity.

A statement put out by NOAA says that Rhea will add about 8 petaflops of total compute (presumably at FP64 precision) to the organization’s current capacity of about 35 petaflops across those five research facilities. We have learned from sources inside NOAA that another machine, nick-named “Ursa,” is expected to be installed sometime this winter. We also learned that depending on how the supply chain and new datacenter that is being built in Fairmont – which is just a few miles down Interstate 19 from Prickett’s Fort State Park, constructed by some ambitious New Jersey and Delaware folk at the confluence of Prickett’s Creek and the Monongahela River in 1774 – treats NOAA. After Rhea and Ursa are installed, NOAA will have around 48 petaflops of aggregate capacity.

The Fairmont datacenter was built in 2010, and as part of the $100 million allocation, GDIT is subcontracting for the construction of a modular datacenter at NESCC. This new datacenter site at NESCC will be designed to have additional modular datacenters added to it, and have the machines interconnected as need be we presume. iM Data Centers is a subcontractor to GDIT for this part of the project.

NOAA talked vaguely about the performance of the Rhea machine, and for good reason. The machine will be installed next year and will hopefully be operational in the fall of 2025. But as we know, supply chains are still a little wonky, and parts can change or be in short supply and delay projects. The people at NESCC don’t want to jinx anything or make promises that are too precise.

What we can tell you is that Rhea will be based on servers that come from Hewlett Packard Enterprise – possibly Cray CS chasses and racks like the existing Hera system, but NOAA is not saying – and the primary compute nodes will be based on two-socket servers using the 96-core versions of the impending “Turin” Epyc 9005 processors from AMD. These Rhea nodes will have 768 GB of memory, which is pretty decent for an HPC system, and will be interlinked with a 200 Gb/sec NDR Quantum-2 InfiniBand fabric from Nvidia. This network will be in a fat tree topology with full bi-section bandwidth.

A subset of the Rhea nodes will be equipped with Nvidia “Hopper” H100 GPUs with 96 GB of main memory. NOAA is not being precise about how the flops will shake out with Rhea. But with Hera, the ratio of CPU compute to GPU compute was 1.27 to 2, and if that ratio holds, then there will be 3.1 petaflops of Turin CPU compute and 4.9 petaflops of H100 GPU compute at FP64 precision.

For storage for Rhea, NOAA is tapping DDN for a Lustre parallel file system as with the Hera system. Like many HPC systems these days, NOAA is also going to take a relatively smaller all-flash NFS array from Vast Data out for a spin.

It is not clear how the $100 million granted to NOAA under the two laws mentioned above is being precisely allocated. We presume that this funding includes the cost of the Rhea system, the modular datacenter to house it, and the construction of the datacenter site for a few more modular datacenters to eventuallybe built there. It probably also includes the electricity and cooling over a certain number of years, and it might include the cost of the Ursa system as well. We think $100 million should buy a fair amount of flops across CPUs and GPUs, and only hope that NOAA can do the hard work of integrating AI into weather and climate models to make these forecasts better in terms of resolution and reach into the future.

This is what supercomputers are for, and we think NOAA should have gotten a lot more money to build even larger clusters to push the weather and climate envelopes. But, to be grateful in an uncertain economic and political world, this is a good start.