Brad McCredie like engines, and more importantly, he likes to make them go fast. His love of Dodge Challengers – he has multiple ones, including a Hellcat – is a manifestation of his need for speed. And so is his work steering the design of IBM Power CPUs over the decades, and in the past several years, helping AMD deliver the past two generations of Instinct GPUs as its corporate vice president of GPU platforms.

As far as we know, AMD has just turned in a gangbuster year for GPU sales, thanks in large part to the adoption of its “Aldebaran” MI200 series and more recently its “Antares” MI300 series. We expect for AMD to sell more than $5 billion in GPU accelerators into the datacenter in 2024, somewhere close to 10X the revenues it derived from GPUs in 2023. AMD has not yet put out a forecast for datacenter GPU sales for 2026, but we expect it to do so when it announces its fourth quarter 2024 financial report on February 4. We would not be surprised to see revenues this year double or triple, given the demand that is out there for accelerators and the dearth of supply.

We sat down recently with McCredie to talk some shop on accelerator engines and what may be in store for future AMD GPUs.

Timothy Prickett Morgan: On the most recent Top500 supercomputer rankings from November 2024, if you just look at new machines added to the list, which is how I am starting to analyze the Top500 these days, systems with the combination of AMD GPUs and AMD CPUs represented 72 percent of peak theoretical FP64 performance. Machines using a variety of CPUs plus Nvidia GPUs represented 27.2 percent of the peak FP64 oomph. This was the first time we have seen AMD beat Nvidia, and this was due in large part to the “El Capitan” supercomputer at Lawrence Livermore and its smaller siblings.

On the prior June 2024 rankings, a little more than 54 percent of the new capacity at FP64 precision was for machines using a combination of Nvidia “Grace” CPUs and “Hopper” CPUs, and other machines using a variety of CPUs and Nvidia GPUs comprised another 25.6 percent of installed FP64 capacity.

Is this back and forth between Nvidia and AMD a leading indicator that the upper echelons of the HPC market will normalize towards half and half at some point?

Brad McCredie: I think that HPC capacity does need to be investable. You have to design double precision and a double precision data pass. You have to do things to support the HPC ecosystem. You can give the HPC ecosystem something else and tell them to deal with it, and I think that’s happened with other vendors in the past. We are choosing to design for HPC customers. And if you look at our roadmap, we have really robust, double precision math all the way out.

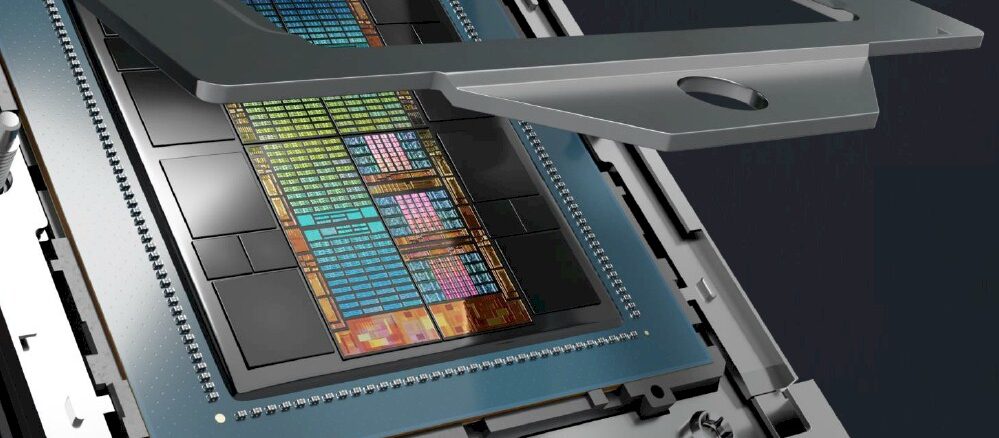

By the way, our chiplet architecture makes it easier for us to make that decision. We are not building large, monolithic chips. And this is giving us a lot of flexibility to cater, and there will be a couple of things that are going to be very interesting as you start looking forward. HPC is so important in the world, and the machines need to be Swiss Army knives. They have got to do AI, they have got to do HPC. They have got to do both well. And with the chiplet architecture, we can actually design these machines with various ratios of these two types of compute.

TPM: Are you going to re-architect for that? Because current GPU designs do not do this. They have vector and matrix math in specific capacities in a chiplet and you scale up the chiplets in a socket. You could break these two into separate chiplets and dial them up and down separately. . . .

Brad McCredie: We are shifting pieces of the architecture to enable us to have that flexibility.

TPM: Interesting. My observation, at least this time around, would be that AMD is designing a device that’s great at HPC as we know it – 64-bit precision and 32-bit floating point – and that can do a good job on AI. By contrast, Nvidia is designing an AI processor that is good enough for HPC.

A “Blackwell” B200s delivers 45 teraflops at FP64 precision on the tensor cores – and we don’t even know what the vectors in the CUDA cores do in terms of FP64, or how many CUDA cores are on the GB200 die. A single “Hopper” H100 does 33.5 teraflops on its CUDA vector cores and 67 teraflops on its tensor cores. Nvidia has FP8 and FP4 flops going through the roof for AI workloads, but has cut back on FP64 radically in the Blackwell socket compared to the Hopper socket.

Brad McCredie: Our take is that if you have the right chiplet strategy, you don’t have to polarize that decision. I think not having to make that choice is the key.

TPM: Well, the Instinct MI300X has 81.7 teraflops of FP64 on the vectors and 163.4 teraflops on the tensor cores, so that is a big advantage for straight HPC workloads.

I don’t think Nvidia is as worried about the HPC community because Hoppers are still available – like the “Kepler” K80 when the “Maxwell” GPUs, the first accelerators that Nvidia created for AI workloads, came out more than a decade ago.

Brad McCredie: I think you have to give the HPC community the hardware that they need to do their computations. So you have to look at the data formats between AI and HPC. There’s a difference there. The system structures are changing as well between the two. And I think some flexibility there is important as well.

I think everybody loves having the network hanging off of the accelerator. I think we need some flexibility between a flatter, broader network that HPC likes compared to the pod architecture that AI likes. Creating some flexibility between these options is something we are going to be looking at to enable systems optimized for various workloads.

TPM: Can you ramp up the amount of Infinity Fabric coming off of the GPUs? Because that is an area where I wish there was more. Looking at the benchmarks, that bandwidth coming off the accelerator seems to be more important for AI training than AI inference. NVLink and NVSwitch didn’t seem to help all that much on certain AI inference workloads in the tests you have done compared to using Infinity Fabric.

In any event, I can envision one of the one of the dials you might turn on a chiplet is to have a lot more Infinity Fabric for a training GPU than for an inference GPU and keep everything else more or less the same so you can cross link more devices and have a bigger memory domain higher bandwidth links between devices. Or is that just too much change?

Brad McCredie: AI is obviously extremely large market, and there’s a lot of things going on in AI. There are more and more papers being written about it, and you really can break inference into prefill and decode – and they have different compute needs. The general thought is that decode is much more memory bandwidth heavy, prefill is much more compute heavy. Each one has various memory bandwidth and compute needs . . . .

TPM: . . . so you can make a workflow on a device or a workflow across different devices on a system board. You pour the data in one end, and it accelerates here when it needs it, and then pass it over to there . . .

Brad McCredie: Well, interesting things are happening is all I will say. And I think this will all have an impact on architectures as we go forward.

TPM: I also think it is time to break the tyranny of having the memory so close to the GPU using electric wires. I would love for more memory to be able to be hooked into a GPU from a slightly farther distance using optical links rather than having to pay the higher costs of stacking that memory up and keeping it a few millimeters away. Thoughts?

Brad McCredie: It’s a challenge, to be sure. If you think about how much we’re packing into a GPU, we have blown through the reticle on the base silicon. So all of these devices are getting bigger, and cooling is becoming critical.

TPM: And hence why I said that I want the distance between the memory and the compute device to get larger so we don’t have these secondary heating effects that can corrupt the memory.

Brad McCredie: What you are describing is a hairball, and it is not the first time the conversation has been had. [Laughter]

That was not an insult. I know that you know. But if you look at things that have advanced computing over the past, I think we could have a great discussion debating how much performance came from the transistor speeding up versus how much performance came through integration. I think it would be a very good discussion. On CPUs, the caches used to be separate, and then the northbridge and the southbridge came onto the die. How much of the performance came from just getting all those components onto the same die, versus from the transistor wiggling faster?

I think betting against integration and keeping things close is a tough bet to place. I think we’re going to still continue to find more stacking. I think we’re going up instead of out, but don’t get the wrong idea, I don’t have anything written in stone tablets. There’s still going to be a place for faster, lower power interconnects, no matter what. The question is: Where are we going to draw the dividing lines and where are we going to put the interfaces?

TPM: That decision will be driven by money. . . .

Brad McCredie: That decision is going to be driven by performance, which, to me, still regulates straight to money.

TPM: OK, here’s a question that has nothing specific to do with technology. You steered Power CPU design at IBM for a long time, and you have steered a few generations of AMD GPUs. Are you having fun?

Brad McCredie: Yes, I am having fun. Obviously, building CPUs is cool, but there was so much code that had gone before and all these legacy things that hamstring our efforts. But this AI workload is incredible, and every bit of network bandwidth, network latency, compute capacity and performance, and memory capacity and performance – everything you put into the design gets consumed. I have never seen a workload like this.

TPM: Is it getting harder to make these compute engines?

Brad McCredie: Look at all of the microcosms for our industry. Obviously technology scaling – Moore’s Law and blah, blah, blah – did it get harder? Yes, and the process guys had to make FinFET 3D transistors. But this industry is just so amazing. Of course we have to find new ways to do things even at the higher levels. But, you know, we are bringing in more networking, and that is because we are parallelizing more for the first time. We finally have got a really parallel workload.

We are going for the 3D packaging to get more silicon area into a space and then bringing in the cooling. I don’t know if harder is the right word, but we are doing it in different ways. But here is the thing. As my college professor used to always say: “Always stay close to performance, Brad, because everybody always needs to go faster.” And so every day, we are just finding new ways to go faster.

Be the first to comment