It is often said that companies – particularly large companies with enormous IT budgets – do not buy products, they buy roadmaps. No one wants to go to the trouble of optimizing software for something that turns out to be a one-off product, forcing them to port code and tune it for another device.

It happens.

And thus, having future product roadmaps is as important for chip makers trying to get people to buy their compute engines as a demonstrated ability to have had a roadmap in the past and to have steered the products to market on it without going off the road.

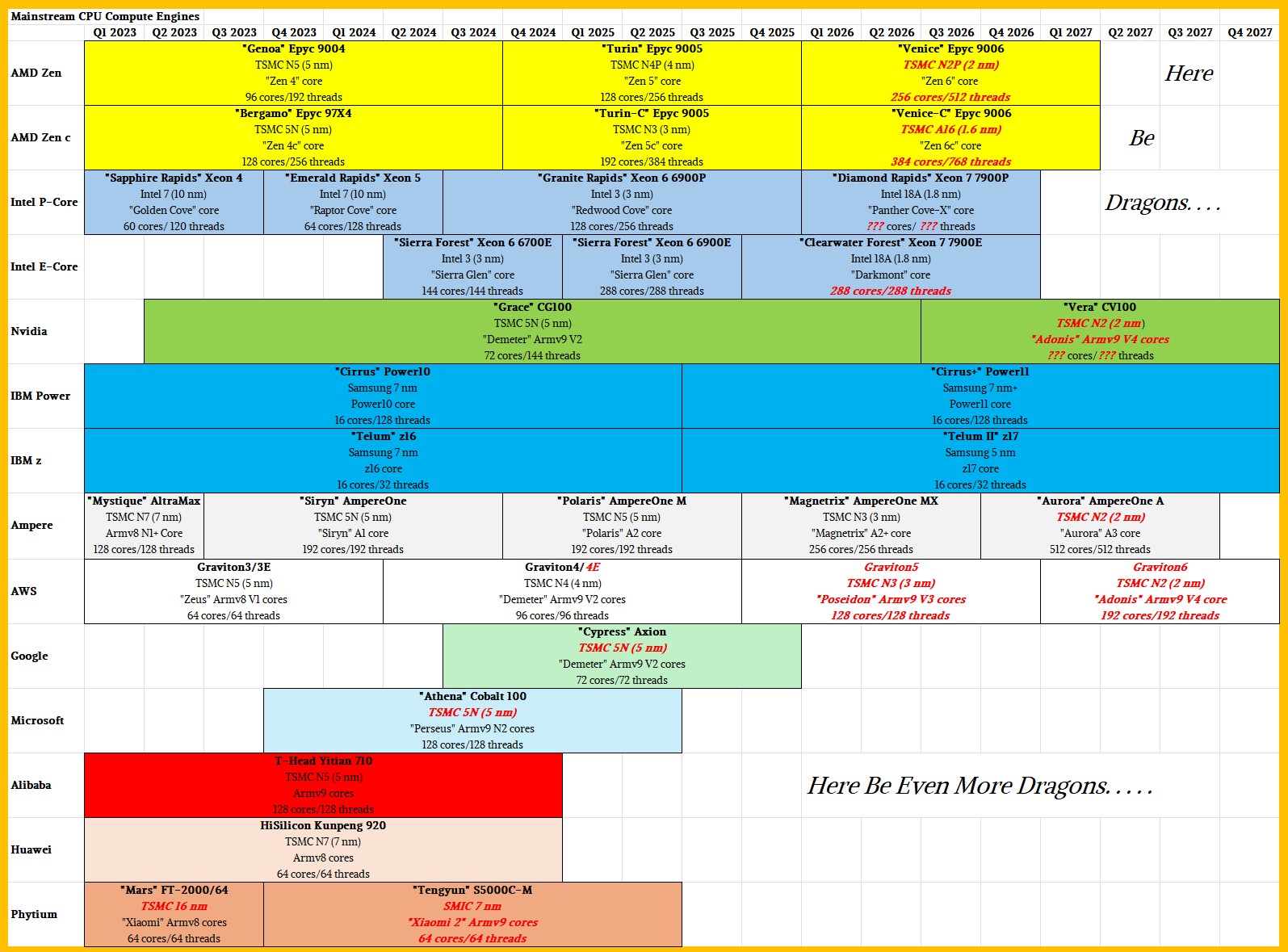

This being the beginning of the year, and datacenter compute engines being near and dear to our hearts, we took some time and put together a master set of roadmaps for CPUs, GPUs, and AI accelerators that stretches from 2023 through 2027 inclusive. Today, we are going to look at the mainstream CPUs that are in the market today and that are coming down the pike. We will look at GPUs and custom AI accelerators separately.

To keep things relatively simple, our CPU road atlas – well, that is what a collection of roadmaps is, right? – does not include specialty processors like the ones that AMD and Intel have created for the telecom and service provider markets, or in the case of AMD, the Epyc X variants with vertical L3 cache memory, or in the case of Intel, the Xeon variants that have HBM memory instead of plain vanilla DDR5 memory.

We have rounded the launch dates of processors to their nearest calendar quarter to keep it simple, and in the case of future products with vague launch dates, we have done our best to estimate when we think they will come out. Where we have a sense that a compute engine will be available for a long time – Nvidia’s “Vera” Arm server CPUs or IBM’s impending “Cirrus+” Power11 and “Telum II” z17 processors – we run it out to the future margin. We have also rebranded the Intel Xeon line back to Xeon 4 for “Sapphire Rapids” and to Xeon 5 for “Emerald Rapids” because we are sick of trying to remember which one is fourth gen and which one is fifth gen. Enough.

There are a lot of salient characteristics we could try to cram into these charts, but we stuck with the basics: The codename and name of the CPU, the process its compute cores are etched with and the foundry they are etched in, the type of cores they have, and the number of cores and threads for the top bin part in the family. At some point, we will expand this to add peak integer and floating point performance, probably a SPEC rating for the top bin part if we can get one.

The X86 processor is still the volume leader, and that is why the Epyc CPUs from AMD and the Xeon CPUs from Intel are at the top of this table.

Both AMD and Intel have most of their mainstream server CPUs in the field for 2025, but Intel has a few more to get out the door in Q1 2025 and there is a chance that AMD might surprise us with a “Turin-X” variant with vertical cache aimed at HPC workloads.

The only new mainstream server CPU expected this year is the “Clearwater Forest” Xeon 7 from Intel, and that is expected at the end of this year. If the plan pans out, Clearwater Forest will be the first CPU from Intel to use its 18A (1.8 nanometer RibbonFET) manufacturing process. The rumor mill has it that this Xeon 7 E-core part – short for energy efficient, and based on a variant of the Atom cores called “Darkmont” that are missing simultaneous multithreading and AVX-512 vector math units, among other things – will have up to 288 cores across four tiles, double that of the Xeon 6 “Sierra Forest” 6700E chip it will replace in the Intel lineup.

The Xeon 6 6900 series has a doubled up E-core Sierra Forest variant, called the Xeon 6 6900E as you might expect, that is due in Q1 2025 with up to 288 cores across two compute tiles. The high end “Granite Rapids” P-core version of the Xeon 6, the Xeon 6 6900P that was announced in September 2024 ahead of the initial Sierra Forest chips, has 128 P-cores in a socket, will be followed with lower bin Xeon 6 6700P parts that peak at 86 cores and even lower bin parts called the 6500P and 6300P that we have not heard much about as yet, which are also expected in the first quarter of this year.

The Zen 5 (regular core) and Zen 5c (skinnier core with less cache) variants of the Turin processors are going to carry AMD through 2025. No one expects “Venice” Zen 6 and Zen 6c CPUs for the datacenter until 2026, and we are thinking they should be ramping early next year. It is reasonable to expect a significant IPC boost with the Zen 6 and Zen 6c cores and we reckon that AMD will use a process shrink to 2 nanometers to double up the cores and maybe boost the clocks a little bit.

By next year, if this CPU atlas shows anything, it will not be unusual for a server CPU to have hundreds of cores. It will be interesting to see what Intel will do as it shrinks the transistors, and therefore the size of the cores, using its 18A process in 2026 with “Diamond Rapids” Xeon 7 P-core processors. We think Intel needs to have core county parity with AMD Venice Epyc 9006 processors, which should mean doubling up to 256 cores.

One of the big wild cards in the server CPU space is what Nvidia might do with its future “Vera” CV100 processor, expected in 2026. Nvidia was first out the door using Arm Ltd’s “Demeter” Neoverse V2 core in a chip design with its “Grace” CG100 processor launched in Q2 2023. And we think it will very much want to be at the bleeding edge of Arm CPU cores with the Vera processors. That might mean skipping the “Poseidon” V3 core and jumping to the “Adonis” V4 core from Arm. For fun, we guessed that. No matter what, we think Nvidia is less focused on core counts with Vera than NVLink ports and lots of bandwidth. The company is already pairing two “Blackwell” GB200 GPU accelerators to a single Grace chip in the latest Grace-Blackwell superchip, and it might go so far as a one to four ration with the future “Rubin” GPUs and their Vera CPUs. To accomplish this might only mean doubling up the cores. (Clearly, 72 V2 cores is enough for a pair of Blackwells, so why wouldn’t 144 of the V3 or V4 cores be enough for a quad of Rubins?)

Just for fun, and to show what a different world it is that Big Blue operates in, we added the Power and z processors to the middle of the Arm pack. We expect “Cirrus+” Power11 and “Telum II” z17 processors before the end of this year to boost the performance of the Power Systems and System z mainframe lines. The Power11 chip, which we will cover shortly, is all about increasing memory bandwidth and memory capacity compared to the Power10 chip the has been in the field for nearly four years now. The z17 processor has a bunch of tweaks, including an integrated DPU to speed up I/O operations for mainframes, which we covered back in August 2024. Both of these chips are etched by Samsung, IBM’s processor fab partner, and both have sixteen cores on a socket and are implemented in machines that have four sockets in a node, four nodes in a single system image, and tens of terabytes of main memory hanging off of them.

Big iron is truly different.

With that, back to the Arm’s race and what we expect will be a fairly regular cadence coming out of Ampere Computing, which put its latest roadmap out back in July 2024. The company thinks it can double up core counts every year, hitting 512 cores in a single socket by 2027. If history is any guide, then Ampere should be ramping production of its 256-core server CPU through 2025 for volume shipments that begin in the second half of this year, and it is looking ahead to that 512-core “Aurora” AmpereOne chip for the end of 2026 and ramping through 2027.

Amazon Web Services has not said much about its plans for its Graviton line of Arm server CPUs, and frankly we were expecting some sort of upgrade bump as 2024 was coming to an end. Perhaps a deep bin sort Graviton4E with some extra performance, as happened with the Graviton3E a couple of years back. We don’t expect for AWS to start talking about a Graviton5 until the end of 2025 and we conjecture that it might talk about a Graviton6 perhaps in early 2027.

AWS could get more aggressive with core counts, but we think it will not be more aggressive than we have shown with the Arm Neoverse cores or the TSMC processes to etch chips. A lot depends on how quickly AWS wants to shift its compute fleet away from X86 platforms. The faster it wants to move, the higher the core counts will go, mitigated, of course, by the desire to not have to manage too many NUMA domains for software on the processors.

We have put placeholders on this CPU road atlas for Google’s Axion, Microsoft’s Cobalt, Alibaba’s Yitian, Huawei Technology’s Kunpeng, and Phytium’s S5000C-M Arm processors.

We don’t have roadmaps for these families of server CPUs, much less actual specifications for the processors shown. There are not a lot of details on them, and that is no accident. Microsoft, Google, and Alibaba are not interested in selling their Arm server chips to others, and any roadmaps might be reserved only for the largest customers for their Arm instances on their clouds. The Huawei Technology and Phytium chips are behind the Chinese firewall and very little in actually known about plans to update them.

There are a few others who might actually make this CPU road atlas, such as Tenstorrent, Ventana, Esperanto, Meta Platforms, SiPearl, and Fujitsu.

This is a good start. If you have any insight, share.

Nobody does these really useful things like you!

Really appreciate it!

Well, thank you.

So what about the Oracle tax for all these large core count systems? This was a big issue for us a couple of years ago, we explicitly bought four core systems to run ESXi and Oracle DBs on them just to keep down licensing costs. This is a fun roadmap, but it’s totally focused on the max-cores-at-all-cost end of the CPU families.

For any per-core charged software, you need the strongest core you can get and the fewest number to lift the load. Hence, Power iron still exists.

Great content and analysis.

Thank you, really.

Greetings from Italy.