With the months-long blip in manufacturing that delayed the “Blackwell” B100 and B200 generations of GPUs in the rear view mirror and nerves more calm about the potential threat that the techniques used in the AI models of Chinese startup DeepSeek better understood, Nvidia’s final quarter of its fiscal 2025 and its projections for continuing sequential growth in fiscal 2026 will bring joy to Wall Street.

It remains to be seen if Nvidia will be able to meet the demand for Blackwell compute engines, including its GB200 superchip that marries its “Grace” CG100 Arm server CPU to a pair of its Blackwell B200 GPU accelerators, which is used in its GB200 NVL72 rackscale system as well as more traditional B100 and B200 GPUs used in its NVL8 server nodes as well as clones of these machines from all the major OEMs and ODMs.

But for now, Nvidia is trying to ramp up Blackwell shipments fast and is taking a slight margin hit as it does so that customers who have been waiting to build AI clusters based on Blackwell GPUs can get them into their hands sooner.

In a call with Wall Street analysts going over the numbers, Colette Kress, Nvidia’s chief financial officer, says that the Blackwell ramp began in earnest in Q4, which ended in January. This is a big improvement over the mere 13,000 GPU samples for Blackwell that Nvidia shipped to partners in the third quarter of fiscal 2025, which ended in October 2024. In Q3 F2025, the “Hopper” H200 platform drive “double digit billions” in datacenter revenues for Nvidia, and we learned today that the H200 platform kept growing sequentially from that point in Q4 F2025. In the third quarter, Nvidia said that is shipped “billions of dollars” (plural) in Blackwell systems, and now we learn from Kress that in the final quarter of fiscal 2025, Nvidia sold more than $11 billion in Blackwell components and systems. We think that it is probably around $2.1 billion for Q3 and $11.3 billion for Q4, which is a factor of 5.4X growth sequentially.

Nvidia is still selling plenty of its first generation Hopper GPUs, the H100s that were launched in the spring of 2022, that started shipping at the end of that year, and that exploded in sales as the GenAI boom took hold at the hyperscalers and cloud builders. (This is roughly analogous to Nvidia’s fiscal 2024.) Since the final quarter of fiscal 2023 (ended January 2023 and almost completely overlapping with calendar 2022), Nvidia has grown its datacenter compute revenue in the double digits sequentially every quarter, but the growth rate is trending down over the past two years after the initial GenAI explosion.

Mind you, having a sequential growth rate for nine quarters that averages 42.7 percent is absolutely insane, and even an average of 21.3 percent sequential growth is stunning. We live in an IT world where a few points of sequential growth and maybe high single digits to low double digits of year on year growth is the doing well. So even if we are hitting the limits of large numbers on this GenAI boom, or if this is a local flattening before a new enterprise GenAI boom takes off, these are still remarkable numbers that Nvidia is turning in as it makes the most complex systems ever invented.

In the quarter ended in January, Nvidia’s Datacenter division had $35.58 billion in sales, up 93.3 percent year on year and up 15.3 percent sequentially.

Within this, Datacenter compute – meaning CPU and GPU compute engines – accounted for the vast majority of revenues, at $32.56 billion, up 116 percent year on year but up only 17.8 percent sequentially. Datacenter networking comprised $3.02 billion in sales, down 9.2 percent year on year and down 3.3 percent sequentially. Kress said that datacenter networking would return to growth in the first quarter of fiscal 2026, but did not elaborate on how InfiniBand was doing and how Spectrum-X was doing. She did say that with the Blackwell generation, the AI clusters using NVL8 nodes – what we used to call DGX when it had the Nvidia brand on it and HGX when it was just the system boards and OEMs and ODMs built the shells around them – were tending to use InfiniBand interconnects, but that larger AI clusters, and particularly those built using the NVL72 rackscale systems tended to use Spectrum-X Ethernet interconnects.

Kress also added that for the current shipments of Blackwell, it was common for an AI cluster to have 100,000 or more GPUs in a cluster – which is one of the reasons why Ethernet was chosen over InfiniBand. (The other is that hyperscalers and cloud builders like to use Ethernet, and only used InfiniBand because they had to. Now RDMA over Ethernet works well enough and the BlueField 3 DPUs can help deal with congestion control and adaptive routing (and that was missing from Ethernet but part of InfiniBand from the beginning) to yield most of the performance benefits of InfiniBand without having to leave the Ethernet fold.

Given all of this, our model shows InfiniBand sales are on the downswing and Ethernet sales are on the rise, and the two should crossover in the next quarter or so.

Our best guess is that InfiniBand sales were down 41 percent to $1.68 billion in Q4 F2025 and Ethernet (with a smidgen of other stuff) rose by 2.8X to $1.34 billion.

For the full fiscal 2025 year, datacenter compute drove $102.2 billion in sales at Nvidia, up 2.6X compared to fiscal 2024, with InfiniBand rising by 32.3 percent to $8.84 billion and Ethernet/Other hitting $4.15 billion, up by 3.1X. If you do the math on that, datacenter compute was 78.3 percent of Nvidia’s total sales in fiscal 2025, with InfiniBand making up 6.8 percent and Ethernet/Other making up 3.2 percent. The overall Datacenter division had $115.19 billion in sales, up 2.4X.

As the charts make abundantly clear above, Nvidia is a datacenter company that has a good business in gaming and some hobbies in automotive and professional graphics. Yes, the combined revenues that Nvidia will get in fiscal 2026 for in-car and in-datacenter compute and networking for the automotive sector will break $5 billion. Which is a lot of money for a lot of companies – including Nvidia only a few years ago. But it is a trickle in a faucet out in the middle of a torrential downpour of GenAI money.

Kress said on the call that datacenter compute and datacenter networking would both grow sequentially, and that Nvidia expected Q1 F2026 revenues of $43 billion, plus or minus 2 percent, which is 9.3 percent growth at the midpoint. In Q4, the Datacenter division had 90.5 percent of revenues, and we think that percent will only rise higher. Our guess is that Datacenter will bring in $39.5 billion, which is 92 percent of overall revenues in Q1 F2026 and 11 percent sequential growth and 75.1 percent year on year growth. If all of 2026 goes like the first quarter of Q1, the business will follow a curve we know well.

In a boom, IT product revenues triple. Then they double. Then the slow down to 75 percent growth. Then they slow down to 50 percent growth, then 25 percent, then 15 percent, which is a healthy multiple over gross domestic product growth. Then they are down in the single digits as a market matures – still ahead of GDP, but not 100X or 25X as happened for a good many years.

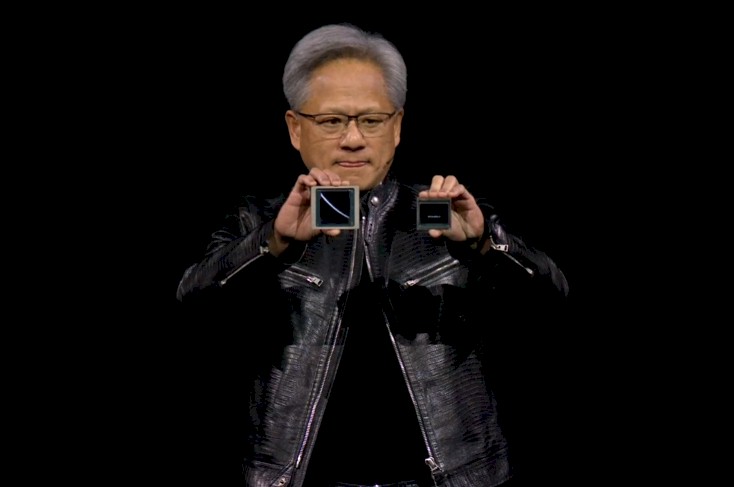

If something dramatic changes in a market – as certainly happened with AI in late 2022 – then the cycle can spin up again. And perhaps this is precisely what Nvidia co-founder and chief executive officer, Jensen Huang, is counting on. The hyperscalers and clouds perfect GenAI, but the enterprises make it pervasive.

This is why Huang was totally unflustered by the DeepSeek revelation that you could train a good model on fewer GPU cards through lots of tricks, including doing more reinforcement learning on the front end of the training of a foundation model and only activating a very small range of parameters on any given response from a Mixture of Experts model. (There were some other clever networking tricks to hide latencies in collective operations that DeepSeek came up with for its V3 and R1 models.)

Here is the point: When you are thinking that models will soon require hundreds of thousands to millions of times more computing, seeing a 4X or even a 10X reduction on compute needs for current models doesn’t make things worse. It makes the future where much more compute is needed to make better models anyway more possible quicker.

This is what Nvidia is focused on, as Huang explained on the call when talking about test time compute or long thinking or chain of thought reasoning, which is basically having a collection of models ponder a response, mull it over, and feed answers into other models as prompts or to analyze the differences between responses to come up with what appears to be a more thoughtful overall response. This is akin to the difference between 5 year olds who blurt things out and 25 year olds that think before opening their mouths. (A 50 year old knows you asked the wrong question, and a 75 year old is just amused by all of this questioning.)

“The amount of tokens generated – the amount of inference compute needed – is already 100X more than the one-shot capabilities of large language models,” explained Huang. “And that’s just the beginning. This is just the beginning. The idea that the next generation could have thousands of times better and even hopefully, extremely thoughtful simulation-based and search-based models – that could require hundreds of thousands or millions of times more compute than today – is in our future.”

Later on in the call, when pressed about how much visibility that Nvidia has into future demand, Huang had this to say:

“Another way to think about that is we have really only tapped consumer AI and search, and some amount of consumer generative AI, advertising, recommenders, and kind of the early days of software,” Huang said. “The next wave is coming – agentic AI for enterprise, physical AI for robotics, and sovereign AI as different regions build out their AI for their own ecosystems. And so each one of these are barely off the ground, and we can see them. We can see them because, obviously, we are in the center of much of this development and we can see great activity happening in all these different places and these will happen.”

So, maybe this is just a local plateauing and the market will boom again and again to higher levels. The funny thing is that even AI can’t predict this future.

Anyway, to finish out the financial analysis, in the fourth quarter of fiscal 2025, Nvidia had a total of $39.33 billion in sales, and brought 56 percent of revenues to the bottom line, which is $22.09 billion. Revenues rose by 77.9 percent and net income rose by 79.8 percent. For the full year, sales were up 114.2 percent to $130.5 billion, and net income rose by 2.45X to $72.88 billion. Nvidia ended the year with $43.21 billion in cash and investments in the bank.