If you are a neocloud – and there seem to be more of these popping up like mushrooms in a moist North Carolina spring in the mountains – then you are going to need a pricing edge and a niche offering to compete with the big clouds and rival neoclouds. This is why TensorWave, which just raised $100 million in Series A funding and which will use the money to build out its second wave of AMD-only infrastructure, will be interesting to watch.

TensorWave was founded in Las Vegas in December 2023 by Darrick Horton, Jeff Tatarchuk, and Piotr Tomasik.

Horton did a stint at the famous Skunk Works at Lockheed Martin working on plasma physics for nuclear fusion after getting degrees in physics and mechanical engineering at Andrews University. In October 2017, Horton was founder and chief technology officer of VaultMiner Technologies, based in the datacenter hub of Cheyenne, Wyoming and crunching cryptocurrency. (Not geologic mining or data vaulting or both, as we might have hopefully and interestingly guessed. Imagine a data vault in a mountain that was also a crystal mine. . . .) As best we can figure, VaultMiner was a big adopter of FPGAs and eventually transitioned its infrastructure to become the VMAccel FPGA cloud service, which has been used across a variety of AI, genomics, weather modeling, and quantitative finance applications.

Tatarchuk was is listed as a co-founder of VMAccel and was a chief business development officer at a company called Let’s Rolo for a short stint, where he met Tomasik, a serial entrepreneur with strong ties to Las Vegas. Tatarchuk is chief growth officer and Tomasik is president and chief executive officer at TensorWave. Prior to starting TensorWave with Horton and Tatarchuk, Tomasik was founder and chief technology officer at Influential, an “influencer marketing” firm that created AI tools to help companies promote their wares on social media. Tomasik is also a general partner in the 1864 Fund, which is a startup accelerator for Nevada that makes $10 million seed rounds to promising companies located in the state.

Money is the name of the game when it comes to the neoclouds, and you have to raise big rounds, and fast.

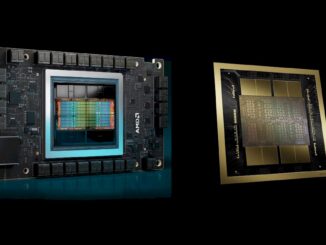

In October 2024, TensorWave raised $43 million through what is called “simple agreement for future equity” or SAFE, funding and this money was used to expand the team, get datacenter capacity, and buy thousands of AMD’s “Antares” Instinct MI300X GPU accelerators, which debuted in December 2023 when TensorWave was founded. Nexus Venture Partners led the SAFE round, with Maverick Capital, Translink Capital, Javelin Venture Partners, Granite Partners, and AMD Ventures participating. Unlike other equity investments, SAFE cash injections do not create debt but do push out a conversion to equity and a valuation of the company to a future date, usually set by a triggering event.

This week, TensorWave raised $100 million in Series A funding, which means there was an equity sale by the company’s owners to get the cash and there is also a valuation of some sort on TensorWave. It is unclear if the Series A funding was the trigger for equity conversion for the SAFE investment last year. What is clear is that AMD Ventures and hedge fund Magneter Capital led the Series A round, with Maverick Silicon and Nexus Venture Partners kicking in some more dough and Prosperity7 joining the party. (Prosperity7 is, of course, the $3 billion venture capital fund of Aramco Ventures, which is itself a division of Saudi Arabia’s Aramco oil company.)

The Series A funds will be used, in part, to cover the costs of a new AI training cluster with 8,192 Instinct MI325X, a gussied up MI300X with more HBM memory capacity and bandwidth but the same raw floating point and integer performance and therefore still a variant of the “Antares” family as far as we are concerned. That 256 GB of memory capacity (up 33 percent compared to the 192 GB on the MI300X) and 6 TB/sec of bandwidth (up 13.2 percent) has real value because it helps the GPU get more work done – and in a greater proportion to the memory increases because GPUs are all memory bound thanks to the dearth of HBM stacks on the market even after Micron Technology has joined Samsung and SK Hynix. Nearly doubling the memory on the jump Nvidia made from the H100 to the H200 GPU accelerators, with the same “Hopper” GPU chips in both, nearly doubled the performance. So, we think the effective performance of the MI325X should be around 30 percent higher than it was on the MI300X on the same AI workloads for the same GPU count. (It would have been even higher had the MI325X come out with 288 GB of HBM memory as expected.)

That should also mean that the MI325X sells for about 30 percent more than the estimated $22,500 street price we have for the MI300X, which means it probably costs under $30,000. With 8,192 of them in the latest TensorWave cluster being installed in a datacenter in Las Vegas, the GPUs alone will cost around $240 million. Add a Pensando DPU for each GPU, as TensorWave is doing we have confirmed, and wrap a server around eight of these MI325X cards with two top-of-the line “Turin” Epyc 9005 CPUs, toss in some flash drives, use maybe 400 Gb/sec Ethernet switches from a whitebox vendor to interlink the nodes, and keep the whole network cost (cables, switches, and DPUs) at 20 percent of the total, and the AI server node plus its share of networking costs is around $360,000. Last time we checked, 8,192 divided by 8 comes to 1,024 nodes, and that means the cost of the new AI cluster at TensorWave should be in range of $365 million.

To date, by its own comments, TensorWave had an annual revenue run rate of $5 million in 2024 and is looking at $100 million in 2025. It has raised another $143 million. The company already built an MI300X cluster that cost maybe somewhere around $75 million – that’s a wild guess, but an informed one.

Where is the money coming from to build this new MI325X cluster? Are the founders coming up with it? Are there loans using the GPUs as collateral? Is AMD giving TensorWave deep discounts? (This seems unlikely given its need to show profits and the demand in the market.) Did the Saudis kick in money outside of the Series A?

Inquiring minds want to know, and we hope to talk to TensorWave soon to find out how this all works both financially and technically, given that the neoclouds are charging a lot less for GPU capacity than the big clouds like Microsoft Azure, Amazon Web Services, and Google Cloud.

TensorWave is charging $1.50 per GPU-hour for MI300X capacity and $2.25 per GPU-hour for MI325X capacity. If you want to create a cluster of GPU systems – what the company calls an enterprise cluster – or add WEKA file system storage outside of the in-node flash storage that comes with bare metal instances, you have to call for custom pricing. The company’s “Maverick” inference service, which runs Llama 3.3 70B on a single GPU and DeepSeek R1 671B on a single node, costs $1.50 per GPU-hour with unlimited queries on dedicated GPUs. The inference service at TensorWave can run all kinds of models, so don’t think these are the only two.

Be the first to comment