Not all important supercomputers are on the twice-a-year Top500 rankings of machines. In fact, many important architectures are built, tested, and used in production for real-world and useful work – often in academic settings – below the thresholds of entry on the list each June and November.

Such has been the case with pair of Isambard machines designed by the GW4 collective – that’s the universities of Bath, Bristol, Cardiff, and Exeter – in the United Kingdom, which were shepherded into being by HPC luminary Simon McIntosh-Smith, principal investigator for the Isambard project and a professor of HPC at the University of Bristol. But with the Isambard 3 system, slated to be installed this year and getting most of its floating point oomph from a compute engine that Nvidia calls a “superchip” and that is based on a pair of its 72-core “Grace” Arm server CPUs, it looks like the Isambard line will break into the bigtime as well as pushing the architectural envelope for Arm-based supercomputing.

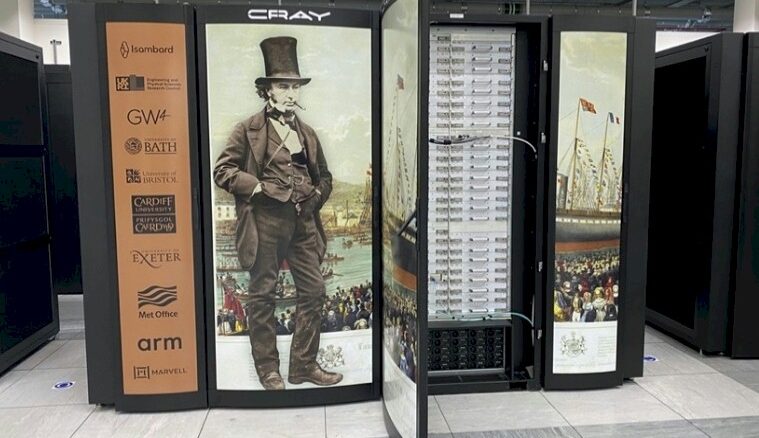

The first Isambard machine was unveiled in March 2017, and McIntosh-Smith and his team were the first in the HPC community to put the Arm architecture through the paces, comparing performance across various CPUs and Nvidia GPU architectures. (The Isambard line is aptly named after Isambard Kingdom Brunel, who designed bridges, tunnels, railways, and steamships during the Industrial Revolution, and also aptly in the Germanic flavor of the Norse language, “Isambard” means “iron bright.”)

Isambard 1, which was built by the then-independent Cray using its “Cascade” XC systems and their “Aries” interconnect to lash together nodes based on Marvell/Cavium ThunderX2 Arm CPUs with a total of over 10,000 cores. This was done through £3 million in funding, or about $3.6 million at the time. With the Isambard 2 expansion in March 2020, the system added another 11,000 cores based on the Fujitsu A64FX processor, integrated by Hewlett Packard Enterprise and using InfiniBand interconnect, with a £4.4 million (about $5.6 million) in funding, with £750,000 of that being kicked in by Marvell and Arm, who benefitted greatly from all of that benchmarking that proved Arm was ready to do HPC work.

With Isambard 3, McIntosh-Smith has been able to corral £10 million (about $12.3 million at current exchange rates between the US dollar and the British pound) to upgrade the facility at Bristol & Bath Science Park with a modular datacenter that has rear door liquid cooling on its racks to keep the infrastructure from burning up. The contract with Hewlett Packard Enterprise is to fill up one of this modular datacenter with what he called “whitebox” Nvidia servers with Grace-Grace superchips and have them lashed together with HPE’s Cray Slingshot 11 interconnect, plus a testbed for “Hopper” H100 GPUs from Nvidia paired with Grace CPUs.

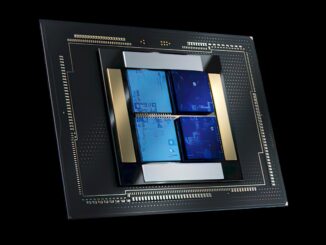

The Isambard machine comes with two partitions. One is based entirely on Grace-Grace and has 384 of these superchips across six racks of HPE Cray XD2000 machinery (that’s 64 Grace-Grace superchips per rack) to bring 55,296 Arm cores to bear. The Grace chip is using the Neoverse “Demeter” V2 cores from Arm Holdings, which are based on the ArmV9 architecture, as we surmised back in August 2022. The other partition in Isambard 3 has a much smaller 32 nodes using the Grace-Hopper superchips in a one-to-one ratio between CPU and GPU. (The Nvidia architecture does not require companies to just deploy superchips; they can connect anywhere from one to eight GPUs to a Grace CPU as they see fit.)

The Isambard 3 nodes will have 256 GB of LPDDR5 memory per node.

“The neat thing about the Grace-Grace superchips is that they are only about this big,” McIntosh-Smith says, holding up an A5-sized paper notebook. “And that is for two sockets and all of the memory, which is basically the entire server apart from the network interface. And because it is LPDDR5 and it is low power, the other cool thing – pun intended – is that it is more energy efficient and therefore you can have more channels so you get more bandwidth. So we can get closer to HBM-like bandwidth with DDR-like pricing. This is a neat sweet spot that Nvidia is exploiting, and I don’t know why others are not doing this.”

We pointed out that last week, Meta Platforms unveiled its MTIA inference chip, and it is using LPDDR5 memory. But that is the only other one we have seen.

The precise performance metrics for the Isambard 3 Grace-Grace partition are not yet available, but McIntosh-Smith tells The Next Platform that the machine will have 6X the flops and 6X the memory bandwidth of the Isambard 2 system and that it will be rated at 2.7 petaflops peak for the CPU partition. And the machine, including storage, will all fit within a 400 kilowatt thermal envelope, with 270 kilowatts of that being for the Grace compute nodes.

“People are just thinking about Grace-Hopper, but the thing is, Grace is super-competitive with what AMD and Intel have over the next year or two,” says McIntosh-Smith. “I don’t think people have realized what a good CPU it is.”

It will be the task of the GW4 collective to prove just that, in fact. We look forward to the benchmark test results.

The “Shasta” Cray EX server design does not have a way to incorporate Grace-Grace or Grace-Hopper nodes as yet, which is why it is coming in the Cray XD form factor and using whitebox motherboards from Nvidia. Every Cray EX deal we have seen to date has the Slingshot interconnect, and that stands to reason since HPE wants to squeeze all the money it can out of the investment that Cray made in the “Rosetta” interconnects and its own network interface cards. McIntosh-Smith says that the choice was between 200 Gb/sec or 400 Gb/sec InfiniBand or 200 Gb/sec Slingshot 11, and the GW4 collective chose Slingshot because 200 Gb/sec was enough and it was cheaper than InfiniBand.

It is not clear who bid on the Isambard 3 contract. Nvidia has been a prime contractor for a few machines, but generally does not do it even though it can. In the European market, the biggies are HPE and Atos (now Eviden) and maybe Lenovo. We suspect that the bid came down to HPE and Atos and that HPE won given that the last two machines had Cray or HPE as prime contractor.

One last thing. The British government has just funded £900 million ($1.08 billion) on building an exascale supercomputer by 2026, which it needs to do because the United Kingdom is not part of the European Union. (And to be fair, the UK should have always had an exascale system of its own, based on the size of its scientific research and industrial economy.) Within that £900 million, says McIntosh-Smith, is around £100 million earmarked for short-term, relatively quick deployment of AI infrastructure, and the GW4 collective is looking at getting a piece of that action for a full-on Isambard AI machine dedicated specifically for AI training. Think of this as an AI accelerator for Isambard 3.

“There’s nothing official about that,” says McIntosh-Smith. “We reckon that you could put a pretty big GPU system into two modular datacenters alongside the one we have for Isambard 3. But it wouldn’t need that much space, because systems are so dense these days, and it might use a couple of megawatts.”

If Isambard-AI comes to pass, it sounds like it will be a lot higher up on the Top500 rankings.

Yummy! It’ll be great to finally see these CPUs grace the inner sockets of live HPC hardware … as they’ve been quite elusive thus far (as noted by Thomas Hoberg in the “Google invests heavily in GPU compute” TNP story). Hopefully they won’t be delayed by the “sky freaking high” mountains of gold coins that nVidia needs to painstakingly bulldoze into its enormous coffers due to the AI-induced boom in GPU accelerator demand (covered in the other TNP article published today, on etherband and infininet, or vice-versa). 64KB of L1 instruction cache (and another 64KB for L1 data cache), as found in these V2 Neoverses, is the way to go for HPC (not so sure that more channels of LPDDR5 can compensate for no HBM, but am willing to learn!).

64KB, shm64KB! Just yesterday, Sally Ward-Foxton reported on Axelera (French) putting 4MB of L1 on its RISC-V matrix-vector multiply (MVM) accelerator (no OS) — they apparently refer to that as “in-memory compute”, seeing how each ALU/MVM is bathed in sizeable fast cache RAM (that 4MB). It is a rather surprising arch. (as suggested, I think, by Mark Sobkow in the Meta Platforms MTIA TNP piece) but the French appetite knows no bounds (and yet they somehow remain quite slim)!

It’s because the French savor each bite thoroughly….

Speaking of cool French tech., Liam Proven (not in Prague) wrote a very good piece on successful application of exo-cortices (and a bit on exo-skeleta too) ( https://www.theregister.com/2023/05/26/experimental_brain_spine_interface/ ) that features French Clinatec’s (in Grenoble) WIMAGINE implants for Brain Computer Interface (BCI) — very high-tech stuff, human-pilot-tested in Switzerland (with a SuPeRb open-access paper in Nature), and backed-by (get this):

“Recursive Exponentially Weighted N-way Partial Least Squares Regression […] in Brain-Computer Interface”

Waouh! Viva le biomath francaise, and bioengineering!