The national supercomputing centers in the United States, Europe, and China are not only rich enough to build very powerful machines, but they are rich enough, thanks to their national governments, to underwrite and support multiple and somewhat incompatible architectures to hedge their bets and mitigate their risk.

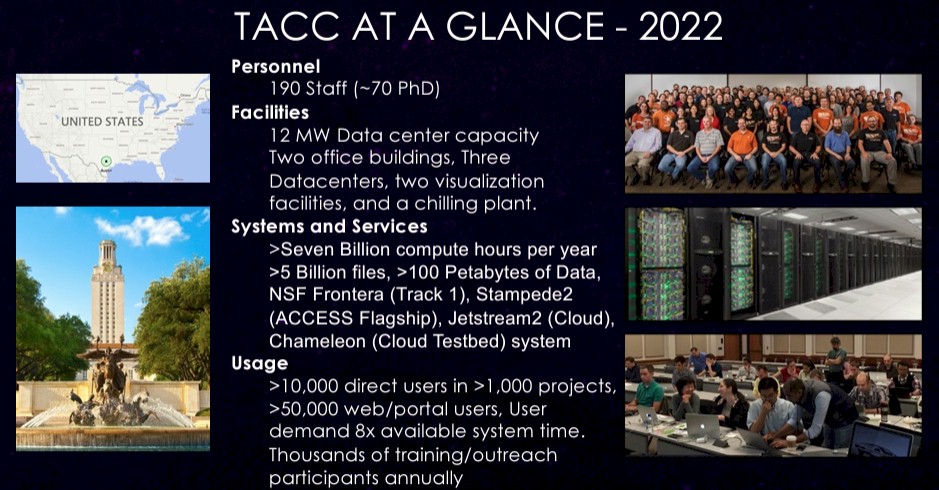

In the United States, the National Science Foundation, working alongside of the Department of Energy, likes to keep its options open as we have seen in the pages of The Next Platform over the past decade, and through the Texas Advanced Computing Center at the University of Texas, which is the flagship facility for the NSF, there is even enough appetite and funding to provide multiple architectures across different vendors inside of TACC.

But ultimately, one compute engine has been chosen for the workhorse system at TACC, and with the recent firing up of the “Vista” hybrid CPU-GPU cluster at the facility, the stage is now set for a three-way horse race between Intel, AMD, and Nvidia to be the compute engine supplier for the future “Horizon” supercomputer whose mandate is to be 10X faster than the current “Frontera” all-CPU supercomputer, which cost $60 million to build and which was installed in 2019.

Before issues with the Intel product lines and the coronavirus pandemic, TACC had expected for a phase two follow-on to Frontera, possibly with some kind of accelerator delivering a lot of or most of its computational capability, to be delivered in 2021, but instead TACC upgraded its companion “Lonestar” and “Stampede” systems and just kept chugging away on the 8,008 two-socket “Cascade Lake” Xeon SP nodes that have a combined 448,448 cores and 38.7 peak petaflops of performance.

The “Lonestar 6” machine installed in 2021 is based on AMD’s “Milan” Epyc 7763 processors, has 71,680 cores providing 3 petaflops of peak F64 oomph for $8.4 million.

Stampede 3 was installed last year and will be in production shortly. The Stampede 3 machine kept 1,064 of the Intel “Skylake” Xeon SP nodes and 224 “Ice Lake” Xeon SP nodes used in the prior Stampede 2 system and added 560 nodes based on Intel’s “Sapphire Rapids” Max Series CPUs, which have HBM2e memory, combining for a total of 137,952 cores (including some experimental nodes using Intel’s “Ponte Vecchio” GPU Max Series accelerators, and just under 10 peak petaflops at FP64 precision.

With the Vista system, Nvidia is getting in on the action. The Vista machine has 600 superchips, which combine a 72-core “Grace” CG100 Arm server CPU with a “Hopper” GH100 GPU accelerator in a coherent memory space. The vector engines on the H100 GPUs alone deliver 20.4 petaflops of peak FP64 performance, and you can double that up to 40.8 petaflops at FP64 on the matrix math units embedded in the H100. Basically, Vista has as much raw oomph as Frontera – provided you can port codes from CPUs to GPUs, of course. So in theory it would only take ten Vistas lashed together to yield the raw performance of 10X times Frontera – which is the goal of the future Horizon supercomputer expected to be housed in a new Leadership Class Computing Facility that TACC is building on the outskirts of Austin in conjunction with co-location datacenter operator Switch.

The facility, known as The Rock, is the fifth prime datacenter site operated by Switch, joining datacenters it operates in Reno, Las Vegas, Atlanta, and Grand Rapids. Here is what the portion of the Austin site where the Horizon supercomputer will be located will look like:

And here is what The Rock datacenter complex being built by Switch will look like:

TACC has taken a very long view into designing the future Horizon supercomputer and the LCCF facility that will house it and other future supercomputers. The NFS funded the initial design work for $3.5 million back in September 2020, and right now NSF is seeking somewhere between $520 million and $620 million to fully fund the LCCF between 2024 and 2027 (those are US government fiscal years that end on July 31 of that year). But in the same document, there is a table that shows a total of $656 million in spending out through F2029. Of this amount, $40 million per year is allocated to operate the LCCF.

The Horizon system is a big part of that budget, but not as much as you might be thinking. Dan Stanzione, associate vice president for research at the University of Texas and executive director of TACC, gave us some insight into the thinking at TACC when we spoke about the Stampede 3 and impending Vista machine before it was announced at the SC23 supercomputing conference in Denver last year. At the time, we said if we were Stanzione, we would be buying some Grace-Grace and some Grace-Hopper machines and get all three of the compute engine vendors in a bidding war, and all he did was laugh.

Presumably because that is exactly the plan.

But actually designing the Horizon system that will be the first machine in the LCCF is not trivial given the diversity of workloads that the NSF.

“We know for the applications that we are profiling for Horizon, 40 percent are in good shape for GPUs at this point,” Stanzione tells The Next Platform. “But that means 60 percent of our big science applications are not. So I have made a commitment that we will have a significant CPU component for Horizon, even though I am going to invest the dollars along roughly the same split as the apps. So 40 percent GPU dollars, which means around 80 percent GPU flops because they’re four or five times cheaper in terms of peak flops.”

Our best guess was that Horizon would be somewhere around the same cost as the “Blue Waters” machine that Cray built for the National Center for Supercomputing Applications at the University of Illinois back in 2011, which cost $188 million and which represented a high water mark for spending on a single system by the NSF. And Stanzione confirmed that the cost of the Horizon system, which we be built in 2025 and operational in 2026, would ne in that ballpark and was “nothing to sneeze at” even compared to the $500 million that the “Frontier” system at Oak Ridge National Laboratories cost when it was installed two years ago or the $400 million that the impending “El Capitan” system being brought up right now at Lawrence Livermore National Laboratory cost. (Those are system costs minus non-recurring engineering, or NRE, costs.)

That leaves the matter of that 10X performance bump compared to Frontera for the applications that currently run on it.

Lars Koesterke, one of the 190 researchers at TACC, put together a presentation on Frontera and Horizon back in March 2023, which we found today while poking around for this story. This one lays out how central TACC has become to HPC in the United States:

The LCCF is rated at 15 MW, by the way, compared to the 12 MW that the current facility at the UT campus is rated at. But there is a lot of room to grow at that switch datacenter outside of Austin, and no hassle about trying to bring more power to the current TACC location.

Also please process this: 7 billion compute-hours per year and 5 billion files. That is its own kind of hyperscale. And having tens of thousands of users and thousands of projects to manage is no joke. We have said it before and we will say it again: In some ways, the hyperscalers have it easy. They manage a few workloads at massive scale. But managing orders of magnitude higher workload counts is its own special kind of nightmare when you are trying to push performance to its absolute limits. And TACC is probably the best HPC center in the world at doing this, with 99.2 percent uptime and 95.4 percent utilization across 1.13 million jobs delivered in the twelve months prior to the presentation put together by Koesterke.

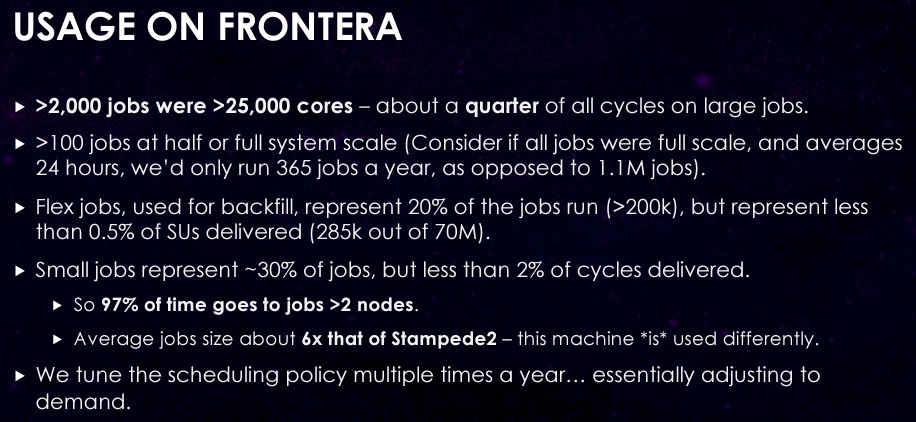

This is the nightmare that Stanzione’s team has to manage at TACC on its flagship machine:

It is the craziest game of Tetris in the world, and that the workload managers can make this happen at all is a testament to human genius.

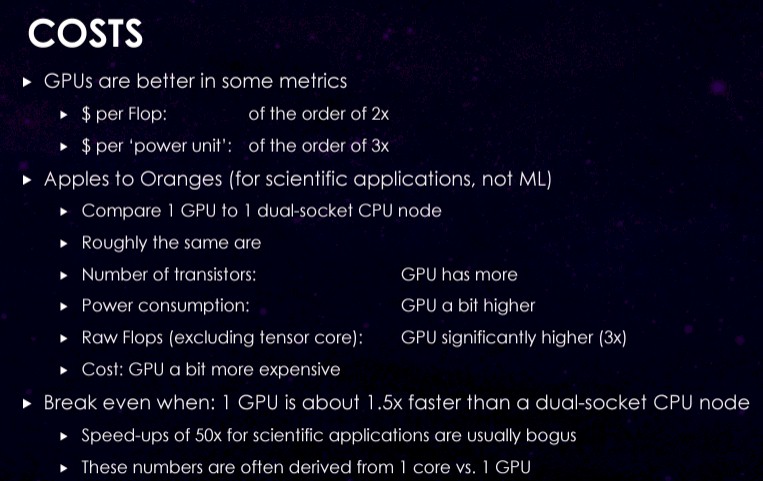

Here is how TACC is thinking about costs as it plots out the architecture for the future Horizon system:

There is some real world, cold water on the face for the HPC crowd to contemplate.

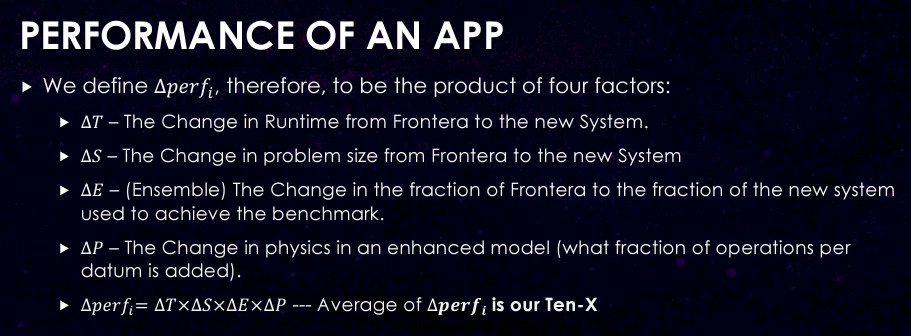

All of this will feed into the design of Horizon, which we think will have a mix of CPU-CPU nodes and CPU-GPU nodes, and one that is designed explicitly to boost the performance of applications by that goal of 10X over Frontera. Koesterke says that there are four factors that TACC looks at when it comes to application performance, and we quote:

- Did the runtime change? (An analog to Strong Scaling –run the same problem in less time).

- Did the problem size change? (An analog to Weak Scaling –run larger problems in fixed time)

- Did we use more or less of the total resource? (An analog to Throughput).

- Did the Physics change? (No good analog).

And that brings us to the actual deltas that TACC will use to drive the Horizon design:

It’s going to average out as the multiplicative of those four factors above, and not all applications will multiply at the same rate over those four factors even on the same CPU and GPU hardware. The goal, over what we presume are the 20 “Characteristic Science Applications” or CSAs that Koesterke went over, which span astronomy and astrophysics, biophysics and biology, computational fluid dynamics, geodynamics and earth systems, and materials engineering, is to get 10X in whatever ways make sense for each individual application given the nature of the code and its mapping to the iron.

The point is, it is not necessarily as simple as building a 400 petaflops CPU-GPU machine, or mix of CPU-only and GPU-accelerated nodes that adds up to 400 petaflops, and calling it a day. TACC has many different codes and many difference customers, unlike the other US national labs, which often have a handful of key codes and lots of money to port code across architectural leaps. While Frontera has a certain number of those capability-class workloads, these do not dominate.

We look forward to seeing how NSF and TACC map the hardware to the jobs. We can all learn a lot from how Horizon will be built.

Be the first to comment