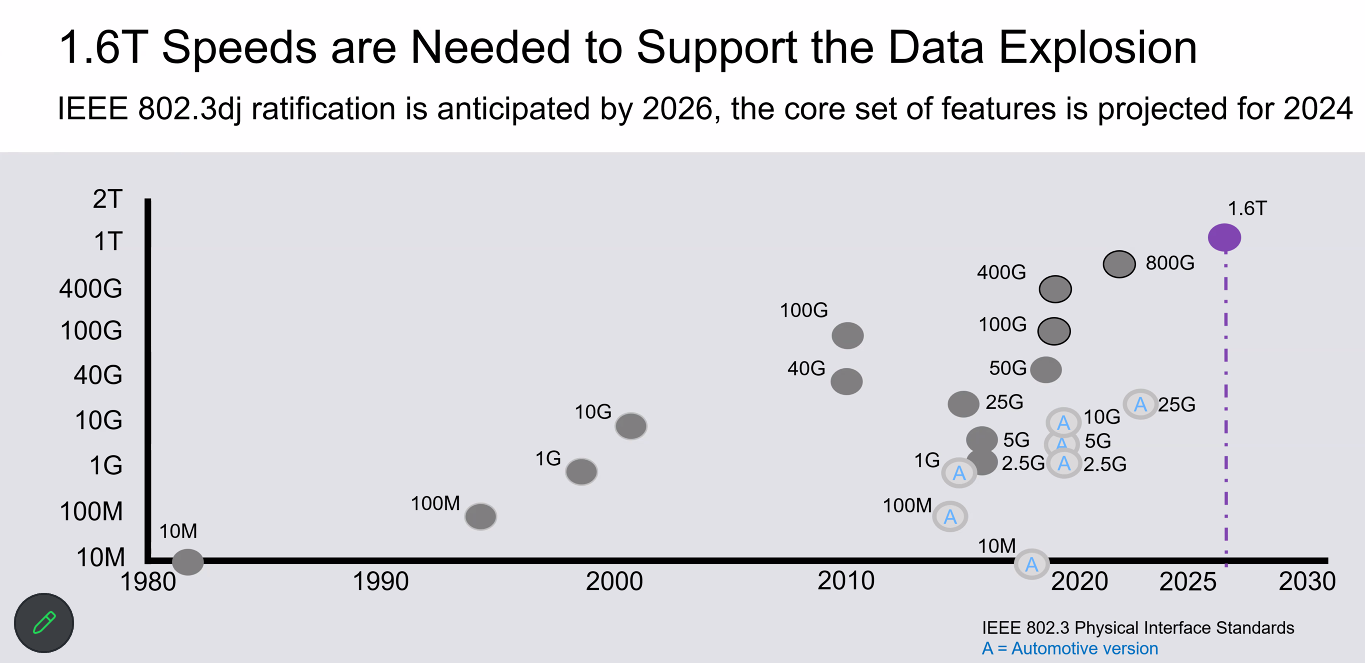

The Ethernet roadmap has had a few bumps and potholes in the four and a half decades since the 10M generation was first published in 1980. Remember the long time between 10 Gb/sec to 100 Gb/sec and the 40 Gb/sec bridge between the two that represented the first time we didn’t have a 10X jump in performance? Now, we see 2X and 4X jumps regularly, and frankly it is not enough of a jump to keep up with the most advanced workloads like HPC and AI. But, doubling or quadrupling performance is hard, and we have to take the capacity jumps when and where we can get them.

The Ethernet roadmap has tracked the explosion of data brought on by the cloud, the Internet of Things (IoT), and, most recently, the rise of machine learning, with generative AI giving it the latest boost and we would argue the biggest boost we have seen in decades. Given that, it’s not surprising that seemingly everything in IT now has “AI” connected to it, similar to the trends when Internet technology, then virtualization, and then cloud came onto the scene.

However, when talking about the need for faster and more power-efficient connectivity in hyperscale datacenters and for hardware developers, AI is moving to the front of drivers fueling the rapidly rising demand, says Michael Posner, vice president of product management for Synopsys’ high performance computing IP solutions.

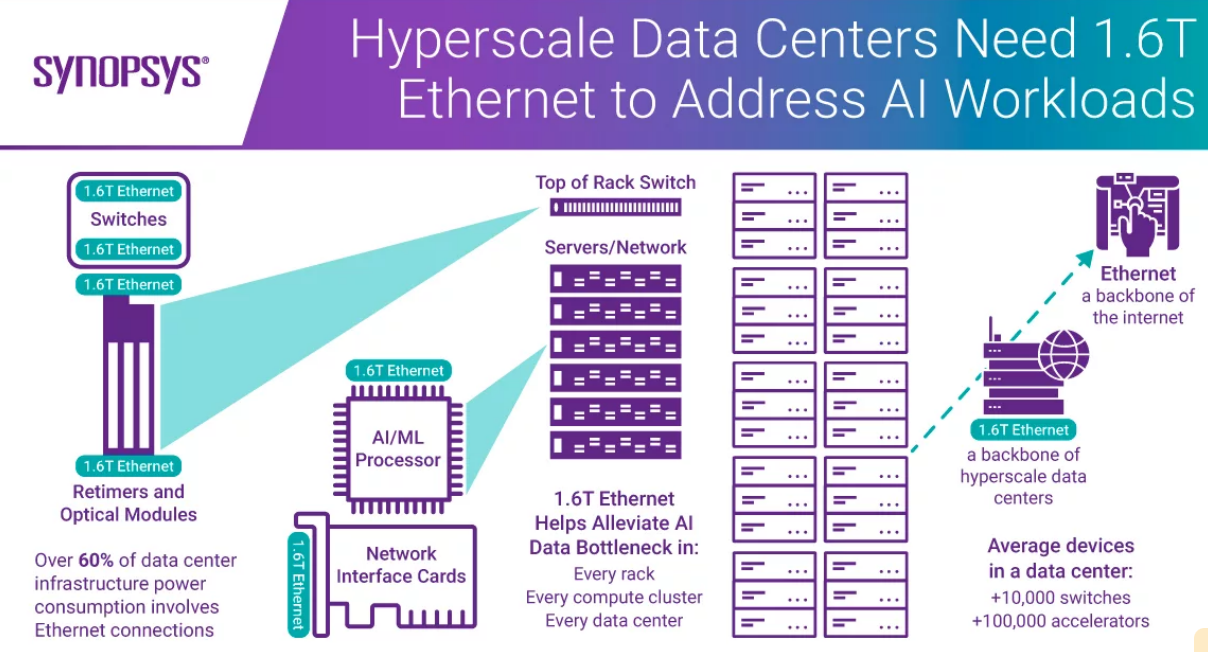

“AI requires more processing power, more computational power, but also it is really stressing the interconnect, either the interconnect between datacenter units from a server to an accelerator or to the switch or top-of-the-rack switch,” Posner tells The Next Platform. “That is now challenging datacenter connectivity. Hyperscalers today are addressing this challenge by scaling. They’re taking their existing infrastructure, they’re building new datacenters based on it and that is obviously balancing the load. It’s predicted that those datacenters are going to grow by 3x over about six years. But what that doesn’t do is address some of the base-level challenges.”

Those challenges in particular are power consumption and latency. We find this hard to believe, but Ethernet switches, adapters, and cables (including retimers and optical modules) make up about 60 percent of the power consumption in datacenters that house increasingly disaggregated architecture comprising servers, storage, and networking that needs to be tied together. All this explains the need for accelerated innovation around the interconnect technology. If hyperscalers continue scaling with the same hardware, it’s also going to mean scaling their power profiles. New hardware is needed, Posner says.

Those are driving factors behind the ongoing development by the IEEE of 1.6T Ethernet. Ratification of the standard is expected in 2026, but a baseline set of features is being completed this year through the IEEE 802.3dj task force.

That’s where Synopsys comes in. The company offers electronic design automation (EDA) software for systems and silicon and earlier this month reported a 21 percent year-over-year jump in quarterly revenue to almost $1.65 billion. That came weeks after the company put up $35 billion to buy Ansys, the number-one supplier of HPC simulation software for modeling objects in the real world. It’s a busy company.

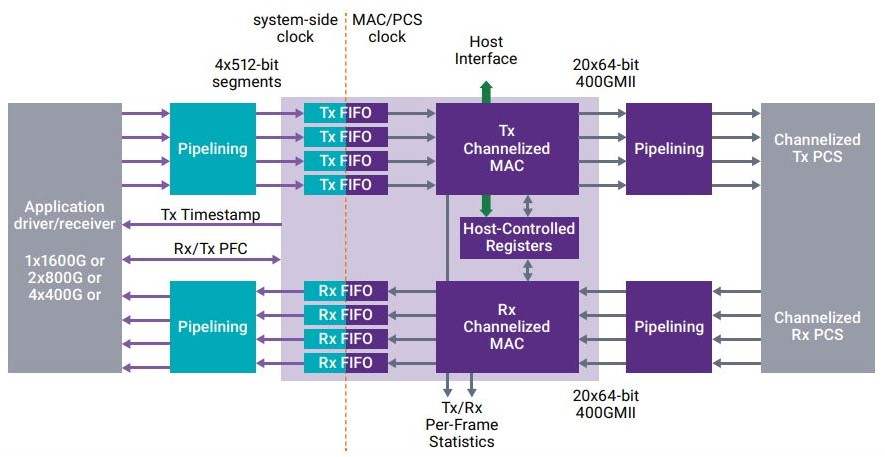

This week, Synopsys is running out a 1.6T Ethernet IP package that includes multi-channel and multi-rate MAC and PCS Ethernet controllers that support up to 1.6 Tb/sec, 224 Gb/sec Ethernet PHY signaling that can be customized to support chip-to-chip, chip-to-module, copper cable connections, and accelerated verification.

According to Synopsys, this package addresses the challenges faced by hyperscalers and cloud builders and their interconnect partners. The collective IP is simulated to cut the overall Ethernet power consumption by half compared with what is in datacenters now and the controllers reduce latency by 40 percent and area by up to 50 percent – thanks to Synopsys’ patented algorithms in the IP – over existing multi-rate 800 Gb/sec IP controllers. The controllers ensure backward compatibility, supporting not only 1.6 Tb/sec but also 200 Gb/sec, 400 Gb/sec, and 800 Gb/sec Ethernet, and the subsystem is customizable to users’ needs.

“The reason that is so popular is it’s an established technology,” Posner says. “Datacenters’ interconnectivity is all based on it. We’ve now got demand for additional data, so by going to 1.6T Ethernet, you’re doubling the bandwidth of the link. You then need to think about how that’s going to be achieved. Scaling a datacenter doesn’t address it. To scale for the future, you need to change your silicon. You need to change the underlying architecture. This Ethernet IP is not tailored to a specific application. It’s not tailored to be a switch or a server. It is a general-purpose 1.6T Ethernet solution, which can be configured and tailored to that application.”

The goal is to give hyperscalers some breathing room while the pace of innovation, particularly around predictive and generative AI, speeds up. It’s clear that datacenters are going to have to continue to improve at a similar level of frequency just to keep pace. The introduction of 1.6 Tb/sec Ethernet ports does that, at least for now, Posner says. It doubles the bandwidth of what available in most datacenters today. That’s important, given the hockey-stick trend that the growth of data generation is on. In 2020, the amount of data created, copied, and consumed hit 64.2 zettabytes; next year that number is expected to reach 181 ZB stretching from on-premises datacenters to the cloud and edge.

The thing to remember is that Ethernet has had a long life up to this point and it will continue on into the future, with 1.6 Tb/sec just being the latest step in the journey, he says.

“It’s a huge step, just as going from 10BASE-T Ethernet to 100 BASE-T was a huge step,” Posner says, and we will remind everyone that it was really three steps – 10 Gb/sec to 40 Gb/sec using 10 Gb/sec signaling to 100 Gb/sec using 10 Gb/sec signaling that was largely ignored by the hyperscalers and cloud builders to 100 Gb/sec using 25 Gb/sec signaling that offered lower power and lower cost. “1.6T addresses the challenges that are faced today and then gives us breathing room for the future. This is technology that also has a very long lifespan. 800G based on 112G Ethernet PHYs has been around for five years and it’s predicted that design starts will continue for another five years. The infrastructure will continue to evolve, hopefully in parallel to the demands. This is a way just to get a little bit ahead of the power profile and reduce the latency.”

Which means, once again, that the hyperscalers and cloud builders and their interconnect partners are blazing the trail that will eventually turn into a four-lane highway for the other enterprises in the world who don’t quite need 1.6T yet. Except in their AI and HPC systems, of course.

It’s good to see Synopsys bringing competition to this space, especially with 50% reduction in power consumption, and 40% in latency. I wonder how this compares with Marvell’s designs who seem to be the current leader ( https://www.nextplatform.com/2023/03/07/setting-the-stage-for-1-6t-ethernet-and-driving-800g-now/ , https://www.nextplatform.com/2023/05/15/the-future-of-ai-training-demands-optical-interconnects/ , https://www.nextplatform.com/2023/09/27/ai-means-re-architecting-the-datacenter-network/ ).