Chip makers and partners Intel and Micron Technology unveiled their jointly developed and manufactured 3D XPoint memory three weeks ago to much fanfare, but it is still perhaps sinking in to system architects and future system buyers how dramatic a move this is and how much it will change the memory hierarchy in systems and the applications that ride on it.

Both companies have been very tight lipped about the change in the bulk material processes that will be used to create 3D XPoint memory, which can split the difference between volatile DRAM memory and non-volatile NAND flash memory in terms of performance, addressability, and cost. To put it simply, 3D XPoint will have about 1,000 times the performance of NAND flash, 1,000 times the endurance of NAND flash and about 10 times the density of DRAM. Intel is not yet giving away specific performance details, but Bill Leszinske, director of strategic planning and business development at Intel’s NVM Solutions Group, tells The Next Platform that the performance will be closer to DRAM than to NAND flash, which is an important hint. Somewhere on the order of nanoseconds compared to microseconds of latency for NAND flash and millisecond for disk drives. The interesting part about 3D XPoint is that it will be bit addressable, like DRAM, and non-volatile like NAND flash. The cost of capacity will also be somewhere between that of DRAM and NAND flash, but where that slider on price ends up depends on demand, manufacturing ramp, and other factors.

Perhaps the most important thing that Leszinske confirmed, if it was not already obvious given the announcement a few weeks ago, is that Intel’s search for an intermediate technology in the memory hierarchy, which everyone knows we need, is over.

“We absolutely see 3D XPoint and Optane technology as filling that gap between DRAM and NAND from a time and latency perspective,” says Leszinske. “We are betting heavily on it, and there is a ton of memory solutions out there to replace DRAM and NAND. We have made our decision after doing broad research after a long period of time that this is right and we are going to bring it to production. It fills the need that exists in the memory hierarchy.”

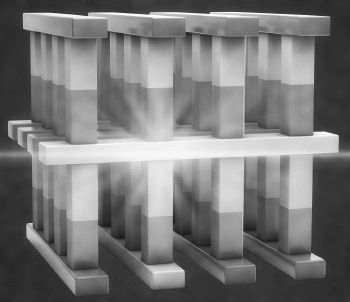

Precisely what 3D XPoint memory is, neither Intel nor Micron will say, but many believe that it is a variant of phase change memory with innovations in materials and with a 3D stacking technique that allows memory cells to be layered as well as the traditional process scaling to increase the density of the memory chips. The initial 3D XPoint memory chips that Intel and Micron are producing in their joint fab in Lehi, Utah have 128 Gbits of capacity and use two layers of cells; that yields 16 GB capacity per chip.

The big reveal at the Intel Developer Forum this week is that 3D XPoint memory technologies from Intel will be sold under the Optane brand. Optane technology refers to the set of controllers, firmware, and drivers that Intel creates to turn 3D XPoint memory chips into sold state drives or server memory sticks, the two form factors it said were coming first from Intel. Micron will sell its own 3D XPoint products, and may even sell raw 3D XPoint chips; Intel has no plans to do so, according to Leszinske. They collaborate on design and manufacturing, but compete in the storage marketplace.

“As demand takes off, we have the ability to convert existing Micron factories, and when we announced 3D NAND, we mentioned that we can run 3D NAND or 3D XPoint in our own factories if we chose to do so. Obviously we look a lot out into the demand and make estimates because the time to retrofit is not overnight. We can meet demand, and we have a plan on how we will go and do that.”

The Optane-branded SSDs will be the first 3D XPoint products to appear from Intel, according to Leszinske, and thus far all the company will say is that they will be coming sometime in 2016. Intel also divulged at IDF that a future and unnamed Xeon server processor will have the capability of using Optane memory DIMMs. Intel did not say what Xeon processor would come with such support, but as The Next Platform divulged back in May, the future “Purley” server platform based on “Skylake” Xeon processors was expected to have a new memory architecture that included memory that was four times as dense as DRAM, lower cost than DRAM, and 500 times faster than NAND while also offering persistence. This sounds an awful lot like 3D XPoint memory sticks to us, perhaps with less aggressive comparisons than Intel and Micron used at the 3D XPoint launch a few weeks ago.

We also suspect that 3D XPoint memory will be featured prominently in the future “Aurora” supercomputer at Argonne National Laboratory, which will sport the massively parallel “Knights Hill” Xeon Phi processor, but Leszinske was unable to comment on this. As we reported back in May, the Aurora system will have more than 7 PB of memory, which Intel characterized as a mix of high bandwidth memory on the processor package, local memory, and persistent memory. We would bet that this persistent memory is 3D XPoint, now known as Optane. The question is what form factor will it be?

Form Follows Function

There will be options for Aurora and other systems of all shapes and sizes. Intel is getting ready to sell 3D NAND flash memory (which it also makes in conjunction with Micron) that can be put onto the M.2 “gum stick” form factor that is used on consumer devices and, in a few cases, on storage arrays and servers for boot drives and flash acceleration. (Microsoft has M.2 flash in its Open Cloud Server designs that were donated to the Open Compute Foundation.) The U.2 form factor, formerly known as SFF-8639, is used with 2.5-inch SSDs based on flash to mimic the form factor of legacy disk drives. And there are also normal PCI-Express flash cards that plug into server slots. Intel makes NAND flash products in all of these form factors today, and Leszinske said it would be reasonable to expect 3D XPoint to eventually come to all of these, too.

Intel and Micron have some experience in ramping up production of 2D and now 3D NAND flash, and they are obviously trying to predict demand for 3D XPoint and meet it. The Lehi foundry has been producing 3D XPoint wafers, and Leszinske says that the same foundries that make 2D and 3D NAND flash chips can make 3D XPoint chips.

“As demand takes off, we have the ability to convert existing Micron factories, and when we announced 3D NAND, we mentioned that we can run 3D NAND or 3D XPoint in our own factories if we chose to do so,” says Leszinske. “Obviously we look a lot out into the demand and make estimates because the time to retrofit is not overnight. We can meet demand, and we have a plan on how we will go and do that.”

Memory processes and logic processes are different, so this is no easy conversion for Intel to make. With logic circuits like CPUs, it either works or it doesn’t, and therefore the processes used to make logic devices are more precise and therefore inherently a little bit slower in terms of manufacturing capacity. With memory chips, it is all about cost, so you put redundant capacity in the chips so that when chunks of it don’t work right, you ignore the bad bits (literally and figuratively) and try to whip as many wafers through the fab as fast as you can. Although Leszinske did not say this, CPU and memory chips use different wafer baking processes, too, although it would be interesting to see them align to the benefit of Intel and Micron.

It seems that XPoint will reach “ultrabooks” in 2016. An XPoint launch seems likely later in 2016, but it might be worth postponing a laptop purchase to get 16/32 GB of XPoint for holding OS in a laptop.

Or get 3D XPoint/DRAM both on a DIMM package and give the old lappy some boost. A new mobile motherboard specification that incorporates XPoint/Xpoint modules into the Motherboard via a dedicated channel, etc. Add to that some much cheaper SLC NAND based SSDs to fill the drive bay on the laptop. This will see XPoint, and its competing technologies giving NAND a very short time in the limelight before NAND gets pushed down the list just like HDDs. So long TLC NAND, and most likely MLC(2) NAND, with SLC NAND the only option for SSDs after XPoint/similar changes the market dynamics. Ultrabooks I could do without, I’d rather have a regular form factor laptop, that can run the CPU/APU/SOC without so much thermal throttling.

APUs or CPUs are too much restricted thermally on ultrabooks and it’s a shame to have a 35 watt rated part so thermally constrained into those thin and light abominations and only allowed to run at 15 watts.

It’s more sad than cute to see a set of utter miscomprehensions of what NAND flash is, and where it goes.

Hint: SLC NAND isn’t the cheaper option. TLC (and soon QLC for vNAND) is.

As for “pushing NAND down the list”, don’t expect to see anything do that for at least 7-8 years. Remember, phase-change memory has been around since 1967; it doesn’t suddenly go from an also-ran to the leading technology.

Sad in only the fact that the flash NAND manufactures have taken to calling TLC NAND by the moniker MLC(3) in an effort to distance themselves from those TLC memory retention problems! Problems that were so bad that the controllers on the TLC based NAND products were so busy error correcting that the SSD’s Read performance become relatively worse than even an HDD’s performance. So now on all the Marketing materials on the NAND producers products they only state MLC(3), instead of TLC. So technically anything above SLC can and is refereed to as MLC(#) and also mean 2, 3(TLC), or more states per cell, but NAND for long term storage will always remain suspect, more so with the Cell nomenclature shenanigans. Cross-point, will in fact be at the top, and forcing the NAND manufactures to go all SLC NAND to obtain any sort of faster Read/Write margins remotely relative to Cross-point and any other similar technologies’ superior read/write speeds. And the Cross-point memory is bit addressable, and no more having to read an entire block, just to change a single byte’s value like Flash NAND. It will be SLC NAND only and still an up hill struggle against Cross-point, and other newer technologies, for the makers of Flash NAND going forward.

I expect the price per GB of the new memory to be much closer to RAM prices than to SSD prices, so I find it weird that the higher-capacity application will arrive first.

Also that figure about getting 500 times faster than RAM was probably misunderstood or misquoted.

What really interests me is how this will be used on DIMMs and how it will interface with the CPU like NVMe.

With the Skylake Purley CPUs they must be planning to have it as close as possible to get the most bandwidth and least latency, so i wonder if the controller will be on die or on package.

I also wonder if Kaby Lake is moving Cannonlake 10nm chips back because they will integrate XPoint controllers on Cannonlake E3s as well. Thats probably not the reason but i wonder where else the XPoint DIMMs will end up.

Nothing too weird or interesting there – it will not just use DIMM form factor but also protocols and interface, so it will look just like a RAM stick to the OS. I guess they will only add something in the SPD info to indicate it is NVM (not NVMe which is a SSD interface) to future systems that care for that difference.

How will future systems make use of that non-volatility is a different question. I expect they will be able to store the registers and other internal CPU state and be able to resume operation right where it powered off, without having to dump all RAM to disk.

But doing that on command, like the current hibernate commands, does not seem all that impressive. Dumping/reloading the RAM takes under a second with today’s SSD speeds so the NVM benefit doesn’t seem big here. What will actually be a huge breakthrough is if it’s able to do it as part of normal CPU operation, so it is able to resume after any unexpected power loss. It will be interesting to see future developments in this regard.

Re: “What will actually be a huge breakthrough is if it’s able to do it as part of normal CPU operation, so it is able to resume after any unexpected power loss. It will be interesting to see future developments in this regard.” I wonder if future architectures will leverage this capability to reduce power usage and extend battery life through intelligent micro power management much like a hybrid car will shut off at a stop light and instantly resume when the pedal (or key) is depressed.

My personal view is that the software to do AI is about 20 years behind the hardware. In reality I would say the human brain is an engineering feat, but quite knowable. I would guess equivalent to about 128 Gbytes mostly in prediction tree like arrangements. At 7 petabytes the supercomputer mentioned would have about 56000 times the capacity of the human brain. Maybe it can put some manners on the human species.