There is a misconception out there that the hyperscalers of the world are so rich that they always have the shiniest new toys in their datacenters. This is not correct. Hyperscalers are restrained by the same laws of physics and the same rules of accounting and budgeting as the rest of the IT sector.

That said, they do push up against boundaries earlier, and how they react to those boundaries is inherently interesting because these large organizations are a kind of leading indicator for directions in which large enterprises might eventually head when they hit a wall or a ceiling in some aspect of their infrastructure. This is why we watch Facebook very closely – or, to be more precise, as closely as the company allows anyone to watch it.

The social network was not the first hyperscaler to design its own server and storage infrastructure as well as datacenters – Google was first, followed by Amazon and then probably Microsoft – but Facebook was the first of the upper echelon to open source its server, storage, datacenter, and now switch designs through the Open Compute Project that it founded in April 2011. Opening up servers and storage servers was relatively easy, given the ubiquity of both X86 processors and the Linux operating system, but cultivating an open ecosystem of network switches that can scale across the 100,000-node datacenters that Facebook operates to support its 1.6 billion users has taken more time, and Facebook is still largely using switches made by Cisco Systems, Arista Networks, and others because of this.

Over time, unless vendors adopt Open Compute form factors and disaggregation between the switch hardware and network operating system and allow switches to be managed precisely like servers, this will change. And we think that large enterprises that are tired of paying for Cisco’s 65 percent gross profit margins – even though they may love Cisco’s switches – will keep a keen eye on what Facebook is doing with its networks and what Google has been doing for almost a decade and that Amazon Web Services has been doing for almost as long.

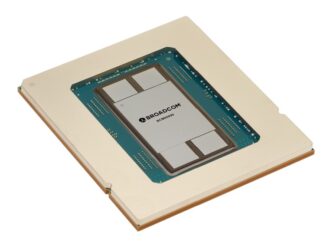

As Facebook network operations chief Najam Ahmad told The Next Platform in great detail back in March, the company is adamant about breaking up the elements of the switch and individually opening them up, in effect making switches look more like servers and less like the sealed black boxes that switch makers peddle today. As Ahmad explained, Facebook is all Ethernet and uses a lot of 40 Gb/sec in the spine of its networks, with the leaves providing 40 Gb/sec uplinks and 10 Gb/sec downlinks to the servers, which have 10 Gb/sec network interface cards. (More than 60 percent of servers in the world, by contrast, are still using 1 Gb/sec Ethernet ports, if you can believe it, which is why network ASIC vendor Broadcom is still rolling out improved Trident-II+ chips to make 10 Gb/sec networking cheaper and easier for enterprises.)

The Wedge top-of-rack and 6-pack aggregation switches developed by Facebook for its own datacenters, which we detailed back in February, are based on an earlier generation of Broadcom Trident-II switch chips. The neat thing about the Wedge and 6-pack designs is that they are all based on essentially the same Wedge system boards. The 6-pack modular switch is just a network enclosure that takes eight Wedge switches and uses them as line cards and uses four more Wedge boards to create a fabric linking those line cards together.

Each Wedge board has a single Trident-II chip for switching and can handle 1.28 Tb/sec of switching capacity in duplex mode Layer 2 or Layer 3 and has a forwarding rate of 1.44 billion packets per second. The Wedge also has a Panther+ microserver created by Facebook using a single four-core Intel Atom C2550, which is used to run parts of the network stack and is what makes the switch updatable like a server and amenable to Facebook’s Kobold and FBAR system management tools. While the Trident-II ASIC can drive 32 ports at 40 Gb/sec speeds, Facebook is only pushing it to 16 ports. (The answer seems to be that Facebook wants to keep half the bandwidth to expose in the aggregation switch.) The company uses four-way splitter cables to chop each 40 Gb/sec port down to the 10 Gb/sec that each server network interface needs. Each line card and fabric card in the 6-pack aggregation switch is a pair of Wedge boards glued together, sharing work.

Facebook engineers Jasmeet Bagga and Zhiping Yao announced in a recent blog post that the company has deployed thousands of Wedge switches in Facebook datacenters, and shared some experience of building a switch like a server and treating it like one in the field. And just to make it clear to Cisco, Arista, and others that this is not some ruse to try to get deep discounts, the Facebook engineers said this: “As exciting as the Wedge and FBOSS journey has been, we still have a lot of work ahead of us. Eventually, our goal is to use Wedge for every top-of-rack switch throughout our datacenters.”

That is a lot of iron that is going to get displaced – easily tens of thousands of current switches and many times more than that of future lost business.

Earlier this year, Ahmad hinted that Facebook was looking ahead to 100 Gb/sec speeds to complement the time when it would be adding 25 Gb/sec and 50 Gb/sec ports onto servers. Only a few weeks later, Facebook unveiled its “Yosemite” microserver at the Open Compute Summit using Intel’s ARM-blunting, single-socket Xeon D processor. This microserver aggregates and shares four 25 Gb/sec ports across the servers and uplinks to the top of rack switch using a single 100 Gb/sec link. If you have that link in a server, you need a matching one in the switch.

Enter the Wedge 100, which will support 32 ports running at 100 Gb/sec speeds. Facebook did not divulge which network ASIC it is using, but it could employ the Tomahawk chip from Broadcom, the Spectrum chip from Mellanox Technologies, or the XPliant chip from Cavium Networks to build the Wedge 100. In fact, you can bet that the design was created precisely so the switch ASIC and the compute elements are modular so they can be swapped out at will. Again, this is the point of an open, modular design.

The Tomahawk ASIC has over 7 billion transistors, which is almost as many as on the Intel “Knights Landing” Xeon Phi parallel processor, which weighs in at over 8 billion and which is the fattest device we have seen. The Tomahawk has SerDes circuits that run at 25 GHz, 2.5X that of the prior Trident-II ASICs, and this matches up to the 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec port speeds that Tomahawk switches support natively. (You can run ports in 10 Gb/sec and 40 Gb/sec modes for compatibility.) The Tomahawk chip can drive 3.2 Tb/sec of switching bandwidth, too, which is again a factor of 2.5X over the Trident-II. As for latency, the Trident-II did a port-to-port hop in about 500 nanoseconds, and the Tomahawk does it in around 400 nanoseconds. So the latency gains are not huge, but still significant. (In a hyperscale datacenter, the distribution of latencies across the nodes across the datacenter is probably more important than latency inside of a rack anyway, but every little bit helps and consistency helps even more.)

Facebook is being a bit secretive about the Wedge 100 top-of-rack switch and the almost certain successor to the 6-pack aggregation switch that will be derived from it. But clearly, with more bandwidth coming out of the server and into the top-of-rack, it is going to need to do something to boost capacity across the spine layer of its network. Hopefully, whatever Facebook does won’t take quite so long to get approved by the Open Compute Project and commercialized by contract manufacturer Accton, which sells the Wedge under its Edge-Core brand.

What we really want to know is what the operational and capital benefits are to using Wedge versus other switches, and hopefully there will be enough real-world customers soon to try to figure that out.

Other Switch Makers Contribute, Too

By the way, these current and future Facebook switches are not the only ones that have been accepted by the Open Compute Project or are currently under review. You can see the list of machines here.

Accton has created its own leaf switch, the AS5712-54X, which has the Trident-II ASIC and an Atom C2538 processor that has 48 10 Gb/sec downlinks and six 40 Gb/sec uplinks.

Alpha Networks has a nearly identical switch that is OCP compliant that has the same basic feeds and speeds, plus another aggregation switch that is based on the Trident-II that has 32 40 Gb/sec ports.

Mellanox has contributed two switches based on its SwitchX-2 silicon (which implements 10 Gb/sec, 40 Gb/sec, and 56 Gb/sec Ethernet as well as, through the golden screwdriver, 56 Gb/sec FDR InfiniBand) in two switches: One provides 48 10 Gb/sec downlinks and a dozen 40 Gb/sec uplinks with an Intel Celeron 1047UE coprocessor for the SwitchX-2 ASIC, and the other has 36 40 Gb/sec ports with the same basic motherboard. These two Mellanox switches were just accepted by the OCP in October. Accton has another switch in review, one that has 32 100 Gb/sec ports that uses multiple Trident-II ASICs.

While these are all interesting, they are the past, not the future. With the ASICs that support the 25G standard offering more bandwidth for less money and a lot less heat, it is hard to imagine why an enterprise would go with any 10 Gb/sec or 40 Gb/sec switch when 25 Gb/sec or 50 Gb/sec options are available. It will all come down to money, of course, but the hyperscalers are driving down the cost of these switches and we think that enterprises should ride that and have lots of capacity in their networks. Let something else be the bottleneck for the next couple of years.

With this in mind, we are far more interested in the Accton OCP AS7500 switch, which is based on the top-end XPliant CNX 88091 switch ASIC from Cavium Networks, which does adhere to the 25G standard that the hyperscalers forced upon the IEEE when they were unhappy with existing 100 Gb/sec technologies based on 10 GHz lanes in the SerDes. This puppy has a Xeon D-1548 processor, which means it won’t get as swamped as that Atom processor did in the Wedge server (which the Facebook engineers talked about in their blog post). The compute side of this Accton XPliant switch has 4 GB of main memory plus 8 GB of flash for the Xeon D, and it also has that Atom chip with a similar chunk of memory and flash, a slew of FPGAs for accelerating certain network functions. To our eyes, it looks like you could drop a Broadcom Tomahawk switch chip into these like an engine swap in a muscle car. In any event, this switch is designed to be a top of rack switch or a leaf switch in a leaf/spine network, and it supports 32 ports running at 100 Gb/sec. These ports can be split down into 25 Gb/sec or 50 Gb/sec links with breakout cables.

About the only thing this switch is missing are 200 Gb/sec uplinks. . . . You are going to have to wait a bit for those.

It will be interesting to see how the Open Compute switches based on 25G ASICs stack up in terms of features and price to the ones from Arista Networks, Dell, Hewlett-Packard Enterprise, and Mellanox, which we talked about under those links right there.

Bootnote: We did finally hear back from Facebook in response to some of our questions about Wedge 100, specifically from Omar Baldonado, software engineering infrastructure manager at the company. We asked about the prospect of using Wedge 100 in a future aggregation switch as well as how Wedge 100 and a future 6-pack 100 might ramp across Facebook’s datacenters.

“The 100G design is challenging because we’re now at the forefront of 100G technologies – both in terms of the chip and the optics,” explains Baldonado by email. “In comparison, when we did the 40G Wedge, the chip and optics were relatively mature. Right now, we’re focused on getting 6-pack into the same production scale as Wedge, and FBOSS as a software stack runs across our growing platform of network switches: Wedge, 6-pack, and now Wedge 100. We’re still in the beginning phases with Wedge 100. There would be a number of difficult technical challenges associated with making a more powerful 6-pack based on the 100G Wedge.”

Baldonado was not at liberty to say how many 6-pack switches had been deployed at Facebook, so we presume it is not that many.

As for when Facebook might have only homegrown switches in its datacenters, we suggested that Wedge 40 and 6-pack 40 would need a year or so of testing and then would take years to roll out across all of its infrastructure. Here is what he said: “Currently, we use thousands of Wedge 40 switches in production and expect to continue using it for a quite a while. We’re still in the early phases with Wedge 100 before it will be ready for our next generation of datacenters, but your assumption is not way off base.”

Facebook is not going to disclose what ASICs and CPUs it is using in the Wedge design, but did say that the point with the open networking effort was to disaggregate the components and allow for different ones to be swapped in and out.

Be the first to comment