For a long time now, researchers have been working on automating the process of breaking up otherwise single-threaded code to run on multiple processors by way of multiple threads. Results, although occasionally successful, eluded anything approaching a unified theory of everything.

Still, there appears to be some interesting success via OpenMP. The good thing about OpenMP is that its developers realized that what is really necessary is for the C or Fortran programmer to provide just enough hints to the compiler that say “Hey, this otherwise single-threaded loop, this sequence of code, might benefit from being split amongst multiple threads.” It looks to me like they are right.

In this series, we have been talking about how to create multi-threaded applications to take advantage of multi-threaded hardware. (See The Essentials Of Multiprocessor Programming, What Is SMP Without Shared Memory?, and The Essence Of Multi-Threaded Applications for the details.) We have talked about theory and practice, and this last piece is more practice.

Being the sort of engineer/programmer who occasionally thinks that even programming in assembler is fun, we decided to take a good hard look at whether the simplifying abstractions being provided by OpenMP for C and Fortran were all that much “better” than the more primitive abstractions that C# provides for multi-threaded programming.

Before we go on, aside from our tendency to prefer thinking in terms of the lower-level primitives, we want to stress that there is nothing inherently wrong with either approach. If it does what you want, does it in a maintainable fashion, and does it within performance expectations, then it is a good approach. But I add the following: Please understand why you chose one approach over the other.

And perhaps it goes without saying, this short write up is not a definitive overview of OpenMP; that is done quite well enough both here and there, and from Intel here and there.

Additionally, for what we are going to write about here, the scope is limited to using a shared-memory, cache-coherent, symmetric multiprocessor (SMP) for the hardware and a single multi-threaded process representing a single address space for the storage model. We understand that this is also the scope of OpenMP. The essence of what we will be covering here is the question of how easily can we take our typical mental model of a single-threaded application and find and implement opportunities for allowing it to actually execute for much of its time under multiple threads.

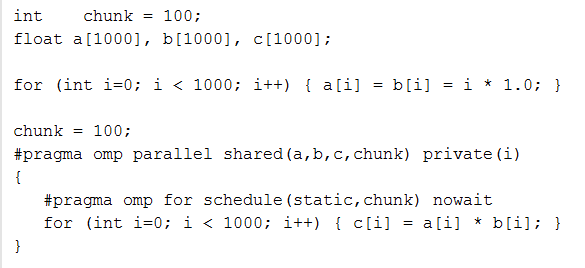

OpenMP is at least partly about providing multi-threading hints to the compiler. So how does it do that and what does the compiler do with such hints? We start by looking at the following short snippet of code. If executed by a single thread, all that it would be doing is multiplying the contents of two 1,000-entry arrays and putting the results in a third. This code would work fine and probably execute quite fast even with one thread, but for reasons of example only, this code is telling the compiler that part of this program might best be supported with multiple threads, ten of them, each processing 100 entries.

Using a special compiler, one capable of recognizing the meaning of the two #pragma statements,

- The first defines the complete scope of the code which is to be executed by the multiple threads (i.e., all of the code between the subsequent braces).

- The second points at the loop which is to be split amongst the threads.

As you may have seen by glancing at those earlier links, there is actually quite a bit more power to OpenMP, but this simple example demonstrates the essence of it.

Now a few more related observations:

- Starting with the code immediately after the first #pragma’s second/ending brace, processing returns again to just one thread. Something, compiler driven, enables that to happen.

- There is a basic assumption here that there is not really any communication between the threads. Each thread is working with its own private portion(s) of what appears to be a set of shared arrays.

- We have included a NOWAIT directive on the second #pragma. If I had not, and often you do want this default, then all threads synchronize with the last iteration of the parallel loop; all threads must have completed before the single-threaded code is allowed to continue past this point.

- The overhead of thread creation before the loop and thread destruction afterward is not cheap. For this application to have benefitted from this overhead, each “chunk” of loops should take a lot more time than it takes for this overhead.

Clearly, this code made it very easy to say what you intended in terms of multi-threading this loop. And, again, the OpenMP “language” – and language made up of these #pragmas, API calls, and environment variable controls – allows some pretty considerable additional control over the hints you want/need to provide.

But what is really going on under the covers? And what would it take if it were done via other means?

- The parallelism starts off with the creation of nine threads. The loop will be processed by ten threads, the current thread plus these nine more new ones.

- Post-creation, each thread starts its execution with what is essentially the first instruction of the loop. The compiler arranges for the address of this code to be passed to each thread at its creation.

- Each thread is provided, via the compiler, parameters addressing that thread’s own private portion of the three arrays.

- The compiler generates code to make each thread dispatchable independent of any other. Execution of any one begins when a processor becomes available and the system’s Task Dispatcher dispatches the thread to that processor. It is all asynchronous. A further point: Execution is asynchronous and might include multiple waits, say to read parts of the array and associated data into memory.

- When each thread completes, that thread essentially informs some structure that it had completed and then, if it is not the original Master thread, kills itself off (or perhaps returns to a system “available thread queue” for later reuse). Only when all have completed, the Master thread is allowed to continue execution.

So let’s look at a simple animation that, including the thread creation/destruction, demonstrates roughly the same thing, and repeatedly executes this function as though using a loop outside of the above code. That outside “loop” might actually be driven by incoming work requests from the outside world. This animation starts with only the Master thread and the array(s). When each Worker thread receives a “start” request, it begins execution, processing its portion of the array. (For simplification of the animation and for continuity with what is to come, we have only the Worker threads processing the array(s).)

As each Worker thread completes, completing asynchronously, it informs the Master thread. Although this completion could be implemented via a message, it could equally well have been implemented via an atomic counter (more on this later).

Back to the OpenMP code snippet above, with the completion of this code snippet, we are supposed to return to single-threaded processing; the Worker threads are no longer needed. Suppose, though, that we have an external something repeatedly driving this (e.g., an outer loop), no matter how this driver is implemented, the (re)start of this code snippet means we again need another set of Worker threads, to be later removed at the end. This approach is certainly not unreasonable, but there is a cost. Sure, we can save time by multithreading this loop, but what of the overhead time in the Master thread to start up the multithreading support? If the time for even a single thread to execute the entire loop is on a par with this multi-threaded startup overhead, there would seem to be no real benefit of multithreading here. When possible it would seem prudent to avoid this overhead as in this next animation in which there is no thread construction or destruction.

The OpenMP’s #pragmas imply that there exists this start/stop overhead with each execution. Instead, we need to move this overhead outside and do it just once. To do that, we am going to fall back to C#’s multithreading support, although the same can be done with C/C++ using pthreads as described here and there.

It happens that most of the C# support that we need for this application can be found in the preceding article, The Essence Of Multi-Threaded Applications. Just as in the code snippets found there, we will be creating the threads and their message queues, start execution of the threads, and share data.

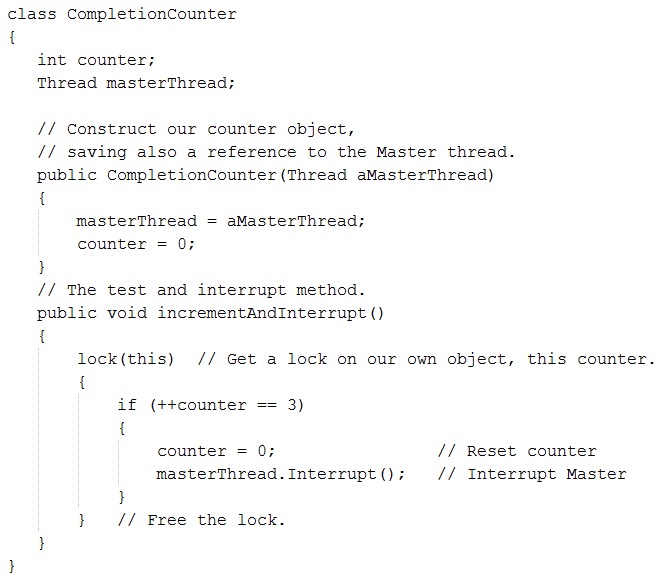

There is, though, something new here; in our animation, the Worker threads tell the Master thread that they are complete by way of a counter. When that counter reaches a value of three, only then does the Master thread return to a dispatchable state for the next iteration. Doing so is straightforward. What we are going to do is, via a common method, have each Worker thread increment and then test that counter. Only when the counter reaches the value three does one of the Worker threads send an interrupt request to a sleeping Master thread; the interrupt wakes the Master thread, returning it to processing.

So let’s start with putting the Master thread to sleep. (For more, see Pausing and Resuming Threads.) This is all that it takes:

Thread.Sleep(Timeout.Infinite);

We are telling the Master to sleep indefinitely until it receives an interrupt.

(By the way, do not use while (shared_Counter < 3) { … } within the Master thread for what we are about to do. The Sleep removes the Master thread from the processor, which is what we want; we don’t want the Master staying on a processor just for the purpose of polling for completion. This while loop just continues to execute, with the result being that this polling Master thread is competing with your Worker threads for an available processor.)

The actual code the Master thread would be executing would look something like the following:

try { Thread.Sleep(Timeout.Infinite); }

catch (ThreadInterruptedException) { ReturnMasterToWork(); }

The incoming interrupt from the last of the Worker threads results in the Thread.Sleep perceiving an exception. The exception is caught by the catch block code, resulting in the Master returning to work there. Nice and clean. This is all that it took for the Master to wait for the Worker thread’s completion.

The corresponding interrupt, produced by a Worker thread, is not much more than having the Work execute the following:

masterThread.Interrupt();

This is a Thread.Interrupt method, meaning that the Worker threads each need a reference to the Master thread’s own Thread object. That would need to come from the Master thread itself as in the following executed by the Master:

Thread masterThread = Thread.CurrentThread;

and where the object reference masterThread would have been made available to the Worker threads.

So the common code used for advancing the counter – also in shared memory – and executed by each Worker thread would drive something along the lines of the following:

- Get a Lock on or over the counter.

- Increment the counter.

- If the counter becomes 3, zero the counter and interrupt the Master Thread.

- Free the Lock on the counter.

In C#, getting a lock means getting a lock on an object. Your mental model for the counter, though, is likely to be a simple primitive integer which is not an object. So our little routine becomes implemented as a Class around such an integer counter – making it an object – as in:

The lock is implicitly freed upon leaving the lock’s bracketed code.

At application start, the Master thread would construct this shared counter as in

CompletionCounter completionCtr = new CompletionCounter(Thread.CurrentThread);

to initialize it to zero. Later, as each of the Worker threads ends processing, each Thread would execute something like the following:

completionCtr.incrementAndInterrupt();

calling the above code, and passing to it the handle of the Master thread. It does not matter the order in which these threads execute this method since only the one advancing the counter to it maximum value will interrupt the sleeping Master thread. After this, the Worker threads just go back and wait on their queue, after first getting themselves a lock – via a Monitor.Enter – on that queue.

And that’s it. It might have taken a while to get here, but putting the pieces together was actually fairly straightforward. Hopefully, you will have also noticed that there really are only a few concepts that need to be kept in mind. So, get going; go out there and arrange for all of those many processors to be kept busy.

Related Stories

The Essentials Of Multiprocessor Programming

What Is SMP Without Shared Memory?

The Essence Of Multi-Threaded Applications

o ERLANG / http://www.erlang.org

o ELIXIR / elixir-lang.org

o RUST / http://www.rust-lang.org

o LLVM / http://www.llvm.org