Machine learning is a rising star in the compute constellation, and for good reason. It has the ability to not only make life more convenient – think email spam filtering, shopping recommendations, and the like – but also to save lives by powering the intelligence behind autonomous vehicles, heart attack prediction, etc. While the applications of machine learning are bounded only by imagination, the execution of those applications is bounded by the available compute resources. Machine learning is compute-intensive and it turns out that traditional compute hardware is not well-suited for the task.

Many machine learning shops have approached the problem with graphics processing units (GPUs), application-specific integrated circuits (ASICs) – for example, Google TensorFlow – or field-programmable gate arrays (FPGAs) – for example, Microsoft’s investment in FPGAs for Azure and Amazon’s announcement of FPGA instances. Graphcore says these don’t provide the necessary performance boosts and suggests a different approach. The company is developing a new kind of hardware purpose-built for machine learning.

In a presentation to the Hadoop Users Group UK in October, Graphcore CTO Simon Knowles explained why current offerings fall short. ASICs represent a fixed point in time – once the chip is programmed, it keeps that programming forever. The field of machine learning is relatively young and is still evolving rapidly, so committing to a particular model or algorithm up front means missing out on improvements for the life of the hardware. FPGAs can be updated, but still have to be reprogrammed. GPUs are designed for high-performance, high-precision workloads and machine learning tends to be high-performance, low-precision.

CPUs and GPUs are excellent at deterministic computing – when a given input yields a single, predictable output – but they fall short in probabilistic computing. What we know as “judgment” is probabilistic computing where approximate answers come from missing data, or a lack of time or energy.

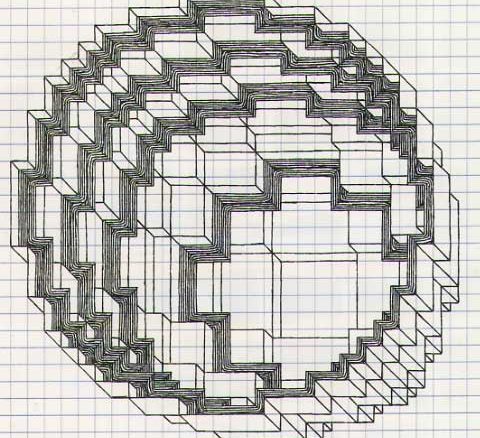

Graphcore is betting that hardware purpose-built for machine learning is the way to go. By designing a new class of processor – what they call the Intelligence Processing Unit (IPU) – machine learning workloads can get better performance and efficiency. Instead of focusing on scalars (CPUs) or vectors (GPUs), the IPU is specifically designed for processing graphs. Graphs in machine learning are very sparse, with each vertex connected to few other vertices. They estimate the IPU provides a 5x performance improvement for general machine learning workloads and 50-100x for some applications like autonomous vehicles. As a comparison, GPU performance for machine learning, according to Knowles, increases at a rate of 1.3-1.4x every two years.

Graphcore’s focus is on improving the speed and efficiency of the probabilistic computation needed for machines to exhibit what might be called intelligence. Knowles described intelligence as the culmination of four parts. First, condensing experience (data) into a probability model. Second, summarizing that model. Third, predicting the likely outputs given a set of inputs. Lastly, inferring the likely inputs given an output. With the IPU, Graphcore hopes to be on the cutting edge. “Intelligence is the future of all computing,” Knowles told the group, “It’s hard to imagine a computing task that cannot be improved by [intelligence].”

Yet we don’t use the word “betting” lightly above. Graphcore exited stealth mode at the end of October with the announcement of a $30 million funding round lead by Robert Bosch Venture Capital GmbH and Samsung Catalyst Fund. While the IPU technology sounds promising, it will not go to market until sometime in 2017 and so has not yet established itself in real-world deployments. It remains to be seen whether the industry will accept a new processor paradigm. As Michael Feldman noted on episode 148 of “This Week in HPC”, the trend has been away from HPC-specific silicon and toward commodity processors.

However, Intel’s purchase of Nervana Systems and Google’s development of the Tensor Processing Unit suggest that major tech companies are willing to look at purpose-built chips for their deep learning efforts. If Graphcore can deliver on their stated performance, the lower power and space profile of the IPU may be enough to drive adoption – particularly in power and space constrained environments like automobiles.

It is likely that GPUs will suffer the same fade as CPUs did and they will be overtaken by more specialized and better fit for purpose architecture for certain application areas they were never designed for in the first place. Same they did to the CPU for initial graphics processing compared. With the end of silicon-based miniaturizing very near now the pressure is even higher than it was back then.

Yeah, hopefully Nvidia will realize this, too, before it’s too late, and also before they ruin GPUs for gaming with their chasing of machine learning (which require quite different architectures, if you want maximum efficiency out of them).