Emerging “Universal” FPGA, GPU Platform for Deep Learning

In the last couple of years, we have written and heard about the usefulness of GPUs for deep learning training as well as, to a lesser extent, custom ASICs and FPGAs. …

In the last couple of years, we have written and heard about the usefulness of GPUs for deep learning training as well as, to a lesser extent, custom ASICs and FPGAs. …

This month Nvidia bolstered its GPU strategy to stretch further into deep learning, high performance computing, and other markets, and while there are new options to consider, particularly for the machine learning set, it is useful to understand what these new arrays of chips and capabilities mean for users at scale. …

As a former research scientist at Google, Ian Goodfellow has had a direct hand in some of the more complex, promising frameworks set to power the future of deep learning in coming years. …

With machine learning taking off among hyperscalers and others who have massive amounts of data to chew on to better serve their customers and traditional simulation and modeling applications scaling better across multiple GPUs, all server makers are in an arm’s race to see how many GPUs they can cram into their servers to make bigger chunks of compute available to applications. …

When it comes to leveraging existing Hadoop infrastructure to extend what is possible with large volumes of data and various applications, Yahoo is in a unique position–it has the data and just as important, it has the long history with Hadoop, MapReduce and other key tools in the open source big data stack close at hand and manned with seasoned experts. …

For Google, Baidu, and a handful of other hyperscale companies that have been working with deep neural networks and advanced applications for machine learning well ahead of the rest of the world, building clusters for both the training and inference portions of such workloads is kept, for the most part, a well-guarded secret. …

The future of hyperscale datacenter workloads is becoming clearer and as that picture emerges, if one thing is clear, it is that the content is heavily driven by a wealth of non-text content—much of it streamed in for processing and analysis from an ever-growing number of users of gaming, social network, and other web-based services. …

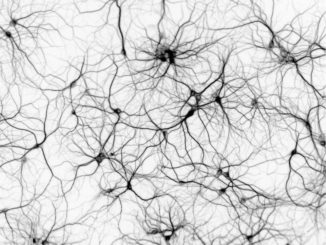

Back in the late 1980s, while working in the Adaptive Systems Research Department at AT&T Bell Labs, deep leaning pioneer, Yann LeCun, was just starting down the path of implementing brain-inspired machine learning concepts for image recognition and processing—an effort that would eventually lead to some of the first realizations of these technologies in voice recognition for calling systems and handwriting analysis for banks. …

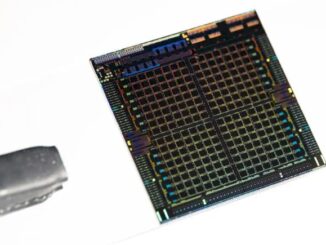

Chip maker Nvidia has come a long way in transforming its graphics processors so they can not only be used to drive screens, but also to work as efficient and powerful floating point engines to accelerate modeling and simulation workloads. …

No matter how elegant and clever the design is for a compute engine, the difficulty and cost of moving existing – and sometimes very old – code from the device it currently runs on to that new compute engine is a very big barrier to adoption. …

All Content Copyright The Next Platform