The Art Of System Design As HPC And AI Applications Diverge

Predicting the future is hard, even with supercomputers. And maybe specifically when you are talking about predicting the future of supercomputers. …

Predicting the future is hard, even with supercomputers. And maybe specifically when you are talking about predicting the future of supercomputers. …

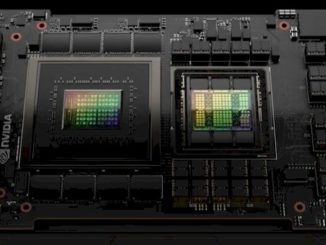

You can’t be certain about a lot of things in the world these days, but one thing you can count on is the voracious appetite for parallel compute, high bandwidth memory, and high bandwidth networking for AI training workloads. …

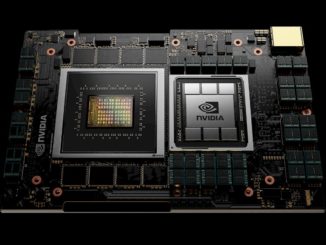

Imagine, if you will, that Nvidia had launched its forthcoming “Grace” Arm server CPU three years ago instead of early next year. …

There are many interpretations of the word venado, which means deer or stag in Spanish, and this week it gets another one: A supercomputer based on future Nvidia CPU and GPU compute engines, and quite possibly if Los Alamos National Laboratory can convince Hewlett Packard Enterprise to support InfiniBand interconnects in its capability class “Shasta” Cray EX machines, Nvidia’s interconnect as well. …

Within a year or so, with the launch of the “Grace” Arm server CPUs, it will not be heresy for anyone at Nvidia to believe, or to say out loud, that not every workload in the datacenter needs to have GPU acceleration. …

There are a lot of things that compute engine makers have to do if they want to compete in the datacenter, but perhaps the most important thing is to be consistent. …

While Marvell’s ThunderX family of server-class processors might not have taken high performance computing by storm from the outset, where there was interest and demand, it was fierce and committed. …

There has been talk and cajoling and rumor for years that GPU juggernaut Nvidia would jump into the Arm server CPU chip arena once again and actually deliver a product that has unique differentiation and a compelling value proposition, particularly for hybrid CPU-GPU compute complexes. …

All Content Copyright The Next Platform