The Art Of System Design As HPC And AI Applications Diverge

Predicting the future is hard, even with supercomputers. And maybe specifically when you are talking about predicting the future of supercomputers. …

Predicting the future is hard, even with supercomputers. And maybe specifically when you are talking about predicting the future of supercomputers. …

You can’t be certain about a lot of things in the world these days, but one thing you can count on is the voracious appetite for parallel compute, high bandwidth memory, and high bandwidth networking for AI training workloads. …

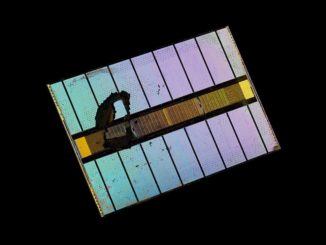

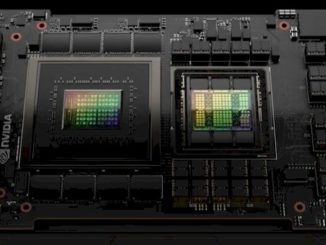

In March, Nvidia introduced its GH100, the first GPU based on the new “Hopper” architecture, which is aimed at both HPC and AI workloads, and importantly for the latter, supports an eight-bit FP8 floating point processing format. …

There are many interpretations of the word venado, which means deer or stag in Spanish, and this week it gets another one: A supercomputer based on future Nvidia CPU and GPU compute engines, and quite possibly if Los Alamos National Laboratory can convince Hewlett Packard Enterprise to support InfiniBand interconnects in its capability class “Shasta” Cray EX machines, Nvidia’s interconnect as well. …

There is increasing competition coming at Nvidia in the AI training and inference market, and at the same time, researchers at Google, Cerebras, and SambaNova are showing off the benefits of porting sections of traditional HPC simulation and modeling code to their matrix math engines, and Intel is probably not far behind with its Habana Gaudi chips. …

To a certain extent, the only thing that really matters in a computing system is what changes in its memory, and to that extent, this is what makes computers like us. …

With each passing generation of GPU accelerator engines from Nvidia, machine learning drives more and more of the architectural choices and changes and traditional HPC simulation and modeling drives less and less. …

Within a year or so, with the launch of the “Grace” Arm server CPUs, it will not be heresy for anyone at Nvidia to believe, or to say out loud, that not every workload in the datacenter needs to have GPU acceleration. …

It has taken untold thousands of people to make machine learning, and specifically the deep learning variety, the most viable form of artificial intelligence. …

There are a lot of things that compute engine makers have to do if they want to compete in the datacenter, but perhaps the most important thing is to be consistent. …

All Content Copyright The Next Platform