Big Blue Can Still Catch The AI Wave If It Hurries

It has been two and a half decades since we have seen a rapidly expanding universe of a new kind of compute that rivals the current generative AI boom. …

It has been two and a half decades since we have seen a rapidly expanding universe of a new kind of compute that rivals the current generative AI boom. …

The history of computing teaches us that software always and necessarily lags hardware, and unfortunately that lag can stretch for many years when it comes to wringing the best performance out of iron by tweaking algorithms. …

If Nvidia and AMD are licking their lips thinking about all of the GPUs they can sell to the hyperscalers and cloud builders to support their huge aspirations in generative AI – particularly when it comes to the OpenAI GPT large language model that is the centerpiece of all of the company’s future software and services – they had better think again. …

As we pointed out a year ago when some key silicon experts were hired from Intel and Broadcom to come work for Meta Platforms, the company formerly known as Facebook was always the most obvious place to do custom silicon. …

The best kinds of research are those that test new ideas and that also lead to practical innovations in real products. …

After its acquisitions of ATI in 2006 and the maturation of its discrete GPUs with the Instinct line from the past few years and the acquisitions of Xilinx and Pensando here in 2022, AMD is not just a second source of X86 processors. …

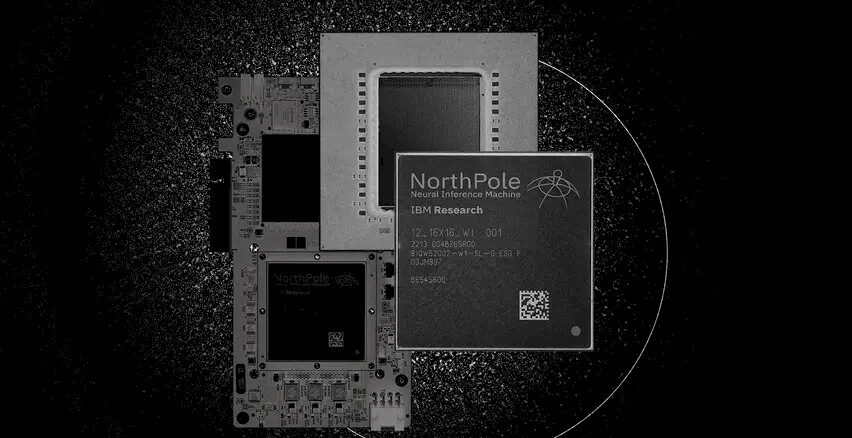

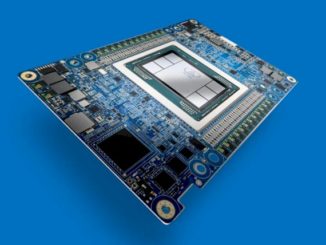

Nvidia is not the only company that has created specialized compute units that are good at the matrix math and tensor processing that underpins AI training and that can be repurposed to run AI inference. …

Industry benchmarks are important because, no matter that comparisons are odious, IT organizations nonetheless have to make them to plot out the architectures of their future systems. …

A number of chip companies — importantly Intel and IBM, but also the Arm collective and AMD — have come out recently with new CPU designs that feature native Artificial Intelligence (AI) and its related machine learning (ML). …

Machine learning inference models have been running on X86 server processors from the very beginning of the latest – and by far the most successful – AI revolution, and the techies that know both hardware and software down to the minutest detail at the hyperscalers, cloud builders, and semiconductor manufacturers have been able to tune the software, jack the hardware, and retune for more than a decade. …

All Content Copyright The Next Platform