Maybe they should have called it DeepFake, or DeepState, or better still Deep Selloff. Or maybe the other obvious deep thing that the indigenous AI vendors in the United States are standing up to their knees in right now.

Call it what you will, but the DeepSeek foundation model has in one short week turned the AI world on its head, proving once again that Chinese researchers can make inferior hardware run a superior algorithm and get results that are commensurate with the best that researchers in the US, either at the national labs running exascale HPC simulations or at hyperscalers running AI training and inference workloads, can deliver.

And for a whole lot less money if the numbers behind the DeepSeek models are not hyperbole or even mere exaggeration. Unfortunately, there may be a bit of that, which will be cold comfort for the investors in Nvidia and other publicly traded companies that are playing in the AI space right now. These companies have lost hundreds of billions of dollars in market capitalization today as we write.

Having seen the paper come out a few days ago about the DeepSeek-V3 training model, we were already set to give it a looksee this morning to start the week, and Wall Street’s panic beat us to the punch. Here is what we know.

DeepSeek-AI was founded by Liang Wenfeng in May 2023 and is effectively a spinout of High-Flyer AI, a hedge fund reportedly with $8 billion in assets under management that was created explicitly to employ AI algorithms to trade in various kinds of financial instruments. It has been largely under the radar until August 2024, when DeepSeek published a paper describing a new kind of load balancer it had created to link the elements of its mixture of experts (MoE) foundation model to each other. Over the holidays, the company published the architectural details of its DeepSeek-V3 foundation model, which spans 671 billion parameters (with only 37 billion parameters activated for any given token generated) and was trained on 14.8 trillion tokens.

And finally, and perhaps most importantly, on January 20, DeepSeek rolled out its DeepSeek-R1 model, which adds two more reinforcement learning stages and two supervised fine tuning stages to enhance the model’s reasoning capabilities. DeepSeek AI is charging 6.5X more for the R1 model than for the base V3 model, as you can see here.

There is much chatter out there on the Intertubes as to why this might be the case. We will get to that. Hold on.

Interestingly, the source code for both the V3 and R1 models and their V2 predecessor are all available on GitHub, which is more than you can say for the proprietary models from OpenAI, Google, Anthropic, xAI, and others.

But what we want to know – and what is roiling the tech titans today – is precisely how DeepSeek was able to take a few thousand crippled “Hopper” H800 GPU accelerators from Nvidia, which have some of their performance capped, and create an MoE foundation model that can stand toe-to-toe with the best that OpenAI, Google, and Anthropic can do with their largest models as they are trained on tens of thousands of uncrimped GPU accelerators. If it takes one-tenth to one-twentieth the hardware to train a model, that would seem to imply that the value of the AI market can, in theory, contract by a factor of 10X to 20X. It is no coincidence that Nvidia stock is down 17.2 percent as we write this sentence.

In the DeepSeek-V3 paper, DeepSeek says that it spent 2.66 million GPU-hours on H800 accelerators to do the pretraining, 119,000 GPU-hours on context extension, and a mere 5,000 GPU-hours for supervised fine-tuning and reinforcement learning on the base V3 model, for a total of 2.79 million GPU-hours. At the cost of $2 per GPU hour – we have no idea if that is actually the prevailing price in China – then it cost a mere $5.58 million to train V3.

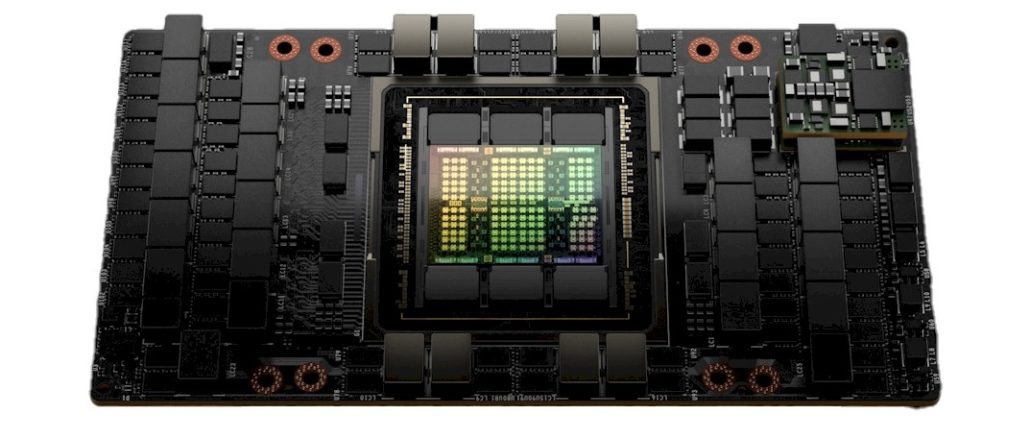

The cluster that DeepSeek says that it used to train the V3 model had a mere 256 server nodes with eight of the H800 GPU accelerators each, for a total of 2,048 GPUs. We presume that they are the H800 SXM5 version of the H800 cards, which have their FP64 floating point performance capped at 1 teraflops and are otherwise the same as the 80 GB version of the H100 card that most of the companies in the world can buy. (The PCI-Express version of the H800 card has some of its CUDA cores deactivated and has its memory bandwidth cut by 39 percent to 2 TB/sec from the 3.35 TB/sec on the base H100 card announced way back in 2022.) The eight GPUs inside the node are interlinked with NVSwitch es to created a shared memory domain across those GPU memories, and the nodes have multiple InfiniBand cards (probably one per GPU) to create high bandwidth links out to other nodes in the cluster. We strongly suspect DeepSeek only had access to 100 Gb/sec InfiniBand adapters and switches, but it could be running at 200 Gb/sec; the company does not say.

We think this is a fairly modest cluster by any modern AI standard, especially given the size of the clusters that OpenAI/Microsoft, Anthropic, and Google have built to train their equivalent GPT-4 and o1, Claude 3.5, and Gemini 1.5 models. We are very skeptical that the V3 model was trained from scratch on such a small cluster. It is simply hard to accept until someone else repeats the task. Luckily, science is repeatable: There are companies with trillions of curated tokens and tens of thousands of GPUs to see if what DeepSeek is claiming is true. On 2,048 H100 GPUs, it would take under two months to train DeepSeek-V3 if what the Chinese AI upstart says is true. That’s pocket change for the hyperscalers and cloud builders to prove out.

Despite that skepticism, if you comb through the 53 page paper, there are all kinds of clever optimizations and approaches that DeepSeek has taken to make the V3 model, and these, we do believe that they do cut down on inefficiencies and boost the training and inference performance on the iron DeepSeek has to play with.

The key innovation in the approach taken to train the V3 foundation model, we think, is the use of 20 of the 132 streaming multiprocessors (SMs) on the Hopper GPU to work, for lack of better words, as a communication accelerator and scheduler for data as it passes around a cluster as the training run chews through the tokens and generates the weights for the model from the parameter depths set. As far as we can surmise, this “the overlap between computation and communication to hide the communication latency during computation,” as the V3 paper puts it, uses SMs to create what is in effect an L3 cache controller and a data aggregator between the GPUs not in the same nodes.

As the paper puts it, this communication accelerator, which is called DualPipe, has the following tasks:

- Forwarding data between the InfiniBand and NVLink domain while aggregating InfiniBand traffic destined for multiple GPUs within the same node from a single GPU.

- Transporting data between RDMA buffers (registered GPU memory regions) and input/output buffers.

- Executing reduce operations for all-to-all combine.

- Managing fine-grained memory layout during chunked data transferring to multiple experts across the InfiniBand and NVLink domain.

In another sense, then, DeepSeek has created its own on-GPU virtual DPU for doing all kinds of SHARP-like processing associated with all-to-all communication in the GPU cluster.

Here is an important paragraph about DualPipe:

“As for the training framework, we design the DualPipe algorithm for efficient pipeline parallelism, which has fewer pipeline bubbles and hides most of the communication during training through computation-communication overlap. This overlap ensures that, as the model further scales up, as long as we maintain a constant computation-to-communication ratio, we can still employ fine-grained experts across nodes while achieving a near-zero all-to-all communication overhead. In addition, we also develop efficient cross-node all-to-all communication kernels to fully utilize InfiniBand and NVLink bandwidths. Furthermore, we meticulously optimize the memory footprint, making it possible to train DeepSeek-V3 without using costly tensor parallelism. Combining these efforts, we achieve high training efficiency.”

The paper does not say how much of a boost this DualPipe feature offers, but if a GPU is waiting for data 75 percent of the time because of the inefficiency of communication, reducing that compute delay by hiding latency and scheduling tricks like L3 caches do for CPU and GPU cores, then maybe, if DeepSeek can push that computational efficiency to near 100 percent on those 2,048 GPUs, the cluster would start acting it had 8,192 GPUs (with some of the SMs missing, of course) that were not running as efficiently because they did not have DualPipe. OpenAI’s GPT-4 foundation model was trained on 8,000 of Nvidia’s “Ampere” A100 GPUs, which is like 4,000 H100s (sort of). We are not saying this is the ratio DeepSeek attained, we are just saying this is how you might think about it.

And here is another interesting architectural feature of the DeepSeek model: V3 uses pipeline parallelism and data parallelism, but because the memory in managed so tightly, and overlaps forward and backward propagations as the model is being built, V3 does not have to use tensor parallelism at all. Weird, right?

Another key innovation for V3 is that auxiliary loss-free load balancing mentioned above. When you train a MoE model, there has to be some sort of router to know which model to send which tokens, just like you have to know which model to listen to when you query a bunch of the models inherent in the MoE.

Another performance boost is FP8 low precision processing, which boosts bandwidth through the GPUs at the same time as making the most of the limited 80 GB of memory on the H800 GPU accelerators. The majority of the V3 model kernels are implemented in FP8 format. But certain operations still require 16-bit of 32-bit precision, and master weights, weight gradients, and optimizer states are stored in higher precisions than FP8. DeepSeek has come up with its own way of microscaling the mantissas and exponents of data being processed such that the level or precision and numerical range necessary for any given calculation can be maintained without sacrificing the fidelity of the data in a way that hurts the reliability of the answers that come out of the model.

One neat technique that DeepSeek came up with its to promote higher-precision matrix math operations on intermediate results in the tensor cores to the vector units on the CUDA cores to preserve a semblance of higher precision. (Enough of a semblance to get output that looked like 32-bit math was used for the whole dataset.) Incidentally, DeepSeek uses 4-bit exponents and 3-bit mantissas – is called E4M3 – on all tensor calculations inside the tensor cores. None of this funny bitness is happening inside there. It is just happening in the CUDA cores. FP16 formats are used inside the optimizer and FP32 formats are used for the master weights of the V3 model.

There are lots of other neat tricks, such as recomputing all RMSNorm operations and recomputing all MLA up-projections during back propagation, which means they do not take up valuable space in the HBM memory on the H800 card. The exponential moving average (EMA) parameters, which are used to estimate the performance of the model and its learning rate decay, are stored in CPU host memory. Memory consumption and communication overhead is cut further by caching activations model activations and optimizer states in lower precision formats.

After perusing the paper, judge for yourself if all of the clever tweaks can add up to a 10X reduction in hardware. We are skeptical until we see proof.

Interestingly, in the V3 model paper, DeepSeek researchers offered Nvidia – or other AI accelerator providers – a list of needed features.

“Our experiments reveal that it only uses the highest 14 bits of each mantissa product after sign-fill right shifting, and truncates bits exceeding this range. However, for example, to achieve precise FP32 results from the accumulation of 32 FP8×FP8 multiplications, at least 34-bit precision is required. Thus, we recommend that future chip designs increase accumulation precision in Tensor Cores to support full-precision accumulation, or select an appropriate accumulation bit-width according to the accuracy requirements of training and inference algorithms. This approach ensures that errors remain within acceptable bounds while maintaining computational efficiency.”

DeepSeek has developed a method of tile-wise and block-wide quantization, which lets it move the numerical range of numbers at a certain bitness around within a dataset. Nvidia only supports tensor quantization, and DeepSeek wants Nvidia architects to read its paper and see the benefits of its approach. (And even if Nvidia does add such a feature, it might be turned off by the US government.)

DeepSeek also wants support for online quantization, which is also part of the V3 model. To do online quantization, DeepSeek says it has to read 128 BF16 activation values, which is the output of a prior calculation, from HBM memory to quantize them, write them back as FP8 values to the HBM memory, and then read them again to perform MMA operations in the tensor cores. DeepSeek says that future chips should have FP8 cast and tensor memory acceleration in a single, fused operation so the quantization can happen during the transfer of activations from global to shared memory, cutting down on reads and writes. DeepSeek also wants GPU makers to fuse matrix transposition with GEMM operations, which will also cut down on memory operations and make the quantization workflow more streamlined.

Now, here is the kicker, which we alluded to above. DeepSeek trains this V3 model. To create the R1 model, it takes the output of other AI models (according to rumor) and feeds them into reinforcement learning and supervised fine training operations to improve the “reasoning patterns” of V3. And then, here is the kicker, as outlined in the paper:

“We conduct post-training, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the base model of DeepSeek-V3, to align it with human preferences and further unlock its potential. During the post-training stage, we distill the reasoning capability from the DeepSeek-R1 series of models, and meanwhile carefully maintain the balance between model accuracy and generation length.”

Later in the paper, DeepSeek says this: “We introduce an innovative methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, specifically from one of the DeepSeek R1 series models, into standard LLMs, particularly DeepSeek-V3. Our pipeline elegantly incorporates the verification and reflection patterns of R1 into DeepSeek-V3 and notably improves its reasoning performance. Meanwhile, we also maintain control over the output style and length of DeepSeek-V3.”

How much does this snake eating tail described above boost the effectiveness of the V3 model and reduce the training burden? We would love to see that quantified and qualified.

“And here is another side effect: The V3 model uses pipeline parallelism and data parallelism, but because the memory in managed so tightly, and overlaps forward and backward propagations as the model is being built, V3 does not have to use tensor parallelism at all. Weird, right?”

This is mostly because of the small # of GPUs used, they can use expert parallelism as well, to eliminate the need for TP, if you used more GPUs, you’d need TP

If this is indeed “DeepFake”, then its one of the best engineered shorts (both in an energy and IT capital sense) in decades.

So it’s all mechanics, and really not a touch of actual theory.

But then, what is the theory behind LLMs? Doh!

Man, that opening paragraph should be typeset in gold and hung on your office wall. Great work, Timothy.

DeepSeek’s innovations in GPU memory management and communication optimization are fascinating, particularly their DualPipe algorithm and their novel approach to FP8 precision. The repurposing of 20 SMs as communication accelerators is especially clever – essentially creating a virtual DPU on the GPU itself to handle all-to-all communication.

I share the writers skepticism about achieving equivalent performance to GPT-4 with only 2,048 H800s. Even with their elimination of tensor parallelism and efficient pipeline/data parallelism, a 10x reduction in hardware requirements seems ambitious. I’m particularly interested in seeing independent verification of their E4M3 precision claims and how they maintain numerical stability when promoting calculations from tensor cores to CUDA cores. Their request for increased accumulation precision in future tensor cores is telling – it suggests they’re pushing right up against the limits of what’s possible with current hardware.

Would this DualPipe approach work even better on unified memory like MI300A?

I need to think about that. My gut reaction is yes, since it is really about staging data across GPUs in all-to-all communication. Being an APU doesn’t really change that.

Their neatest trick is getting people to really believe they don’t have a room full of H100s.

What impresses me about DeepSeek-V3 is that it only has 671B parameters and it only activates 37B parameters for each token. Instead of trying to have an equal load across all the experts in a Mixture-of-Experts model, as DeepSeek-V3 does, experts could be specialized to a particular domain of knowledge so that the parameters being activated for one query would not change rapidly. This would allow a chip like Sapphire Rapids Xeon Max to hold the 37B parameters being activated in HBM and the rest of the 671B parameters would be in DIMMs. This would be an ideal inference server for a small/medium size business. Queries would stay behind the company’s firewall. Unlike data center GPUs, this hardware could be used for general-purpose computing when it is not needed for AI. The HBM bandwidth of Sapphire Rapids Xeon Max is only 1.23 TBytes/sec so that needs to be fixed but the overall architecture with both HBM and DIMMs is very cost-effective. The reason it is cost-effective is that there are 18x more total parameters than activated parameters in DeepSeek-V3 so only a small fraction of the parameters need to be in costly HBM. Imagine a Xeon Diamond Rapids with 4.8 TBytes/sec of HBM3E bandwidth. That could generate about 4800B / 37B = 130 tokens/sec using DeepSeek-V3.

NVIDIA’s market cap fell by $589B on Monday. This loss in market cap is about 7x more than Intel’s current market cap ($87.5B). At NVIDIA’s new lower market cap ($2.9T), NVIDIA still has a 33x higher market cap than Intel.

Timothy Prickett Morgan wrote a good article about Xeon Max here:

nextplatform.com/2022/11/15/sapphire-rapids-xeon-sps-plus-hbm-offer-big-performance-boost

Interesting scenario, Sunil. Thanks for that.

Another banger Tim. Nice deepdive on deepseek… ha

Many in and out of Nvidia are claiming that this is actually a validation of the technology; that refinement of the methods of doing AI will make it plausible for more than 3-4 big companies to offer technology based on AI. I don’t know to what degree it’s true, but I really feel it’s necessary. I’m honestly not that impressed by what the AI industry has offered to date, and doubt it will be all that useful to a lot of industries. I think we need a lot more improvement before AI can be widely useful, and I’d rather have dozens of places trying to improve the state of the art, rather than just a handful.

As I said before, more sanctions on China will only lead to more innovation from Chinese engineers. And Trump is going to Make China Great Again.

Rather like having a family with your sister, training one model on the output of another is how the insanity starts.

Commentators testing bias have shown that deep seek can generate information relating to subjects censored by China. (They tell the LLM to use substitute characters, by-passing internal censors.) This indicates that the LLM was trained outside the Chinese firewall. This opens the possibility of training outside China on higher spec hardware.

I asked it a simple question about a legal issue in my country, and it succeeded in generating a human-like answer but failed to answer the question correctly. I understand that the potential lies in efficiency, but this is like comparing real-time 3D graphics on a C64 with 3D on modern hardware: they both look like 3D graphics, but one of them can only be used in a highly specialized use case.

Or like comparing 3D graphics to actually driving a car. . . .

Back door to a back door, straight to the CCP.